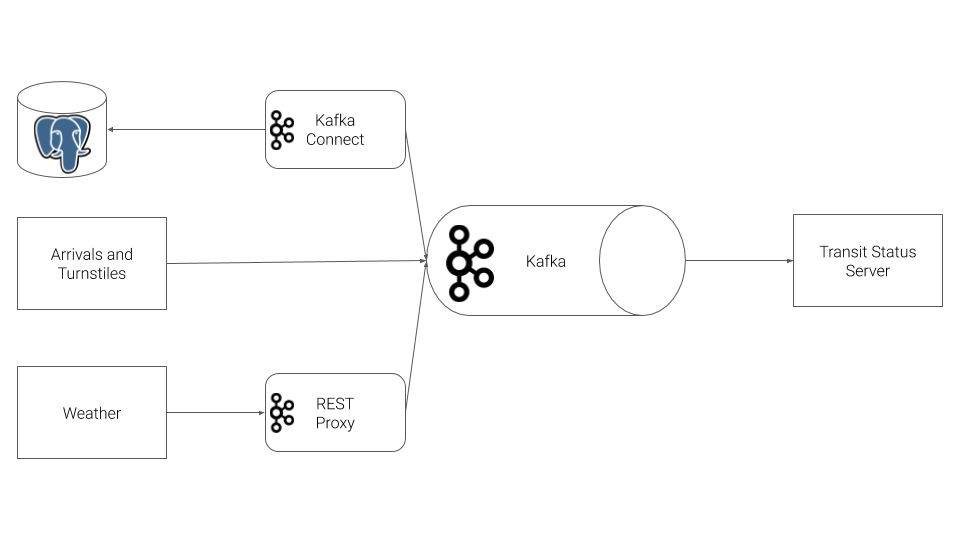

A streaming event pipeline using Apache Kafka and its ecosystem. Using public data sourced from the Chicago Transit Authority we've constructed an event pipeline to simulate the Chicago train transport system and display real time status updates. We have used Kafka and ecosystem tools such as REST Proxy, Kafka Connect, Faust Streams and KSQL to accomplish this task.

Our architecture looks like so:

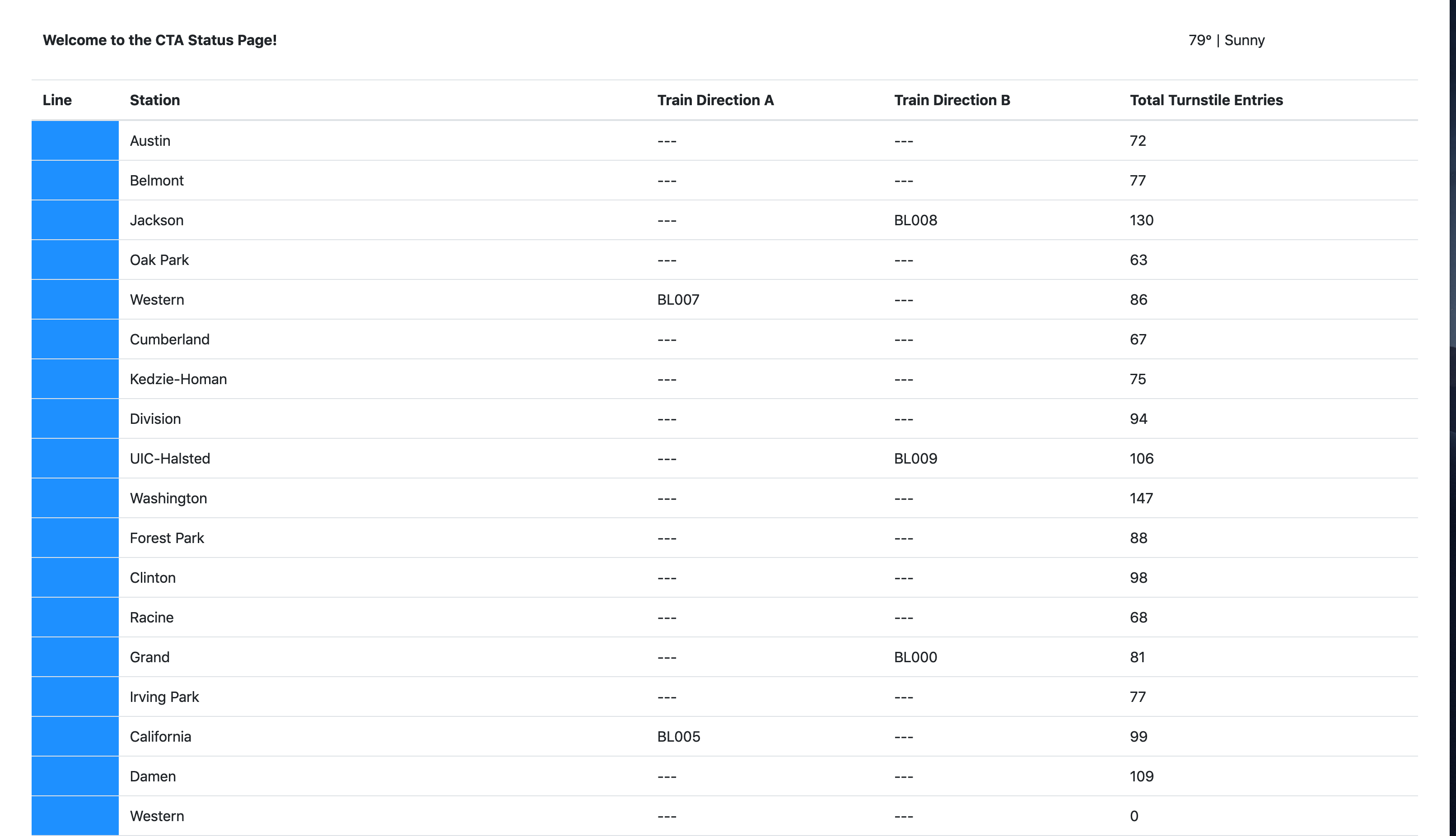

The UI will display the status of train lines and looks like so:

The following are required:

- Docker

- Python 3.7

- Access to a computer with a minimum of 16gb+ RAM and a 4-core CPU to execute the simulation

To run the simulation, you must first start up the Kafka ecosystem:

%> docker-compose up

Docker compose will take a 3-5 minutes to start, depending on your hardware. Please be patient and wait for the docker-compose logs to slow down or stop before beginning the simulation.

Once docker-compose is ready, the following services will be available:

| Service | Host URL | Docker URL | Username | Password |

|---|---|---|---|---|

| Public Transit Status | http://localhost:8888 | n/a | ||

| Landoop Kafka Connect UI | http://localhost:8084 | http://connect-ui:8084 | ||

| Landoop Kafka Topics UI | http://localhost:8085 | http://topics-ui:8085 | ||

| Landoop Schema Registry UI | http://localhost:8086 | http://schema-registry-ui:8086 | ||

| Kafka | PLAINTEXT://localhost:9092,PLAINTEXT://localhost:9093,PLAINTEXT://localhost:9094 | PLAINTEXT://kafka0:9092,PLAINTEXT://kafka1:9093,PLAINTEXT://kafka2:9094 | ||

| REST Proxy | http://localhost:8082 | http://rest-proxy:8082/ | ||

| Schema Registry | http://localhost:8081 | http://schema-registry:8081/ | ||

| Kafka Connect | http://localhost:8083 | http://kafka-connect:8083 | ||

| KSQL | http://localhost:8088 | http://ksql:8088 | ||

| PostgreSQL | jdbc:postgresql://localhost:5432/cta |

jdbc:postgresql://postgres:5432/cta |

cta_admin |

chicago |

Note that to access these services from your own machine, you will always use the Host URL column.

When configuring services that run within Docker Compose, like Kafka Connect you must use the Docker URL.

There are four pieces to the simulation: the producer service, consumer service/app, faust and KSQL stream processing apps. Open a terminal window for each of the following components and run them at the same time:

cd producersvirtualenv venv. venv/bin/activatepip install -r requirements.txtpython simulation.py

cd consumersvirtualenv venv. venv/bin/activatepip install -r requirements.txtfaust -A faust_stream worker -l info

cd consumersvirtualenv venv. venv/bin/activatepip install -r requirements.txtpython ksql.py

cd consumersvirtualenv venv. venv/bin/activatepip install -r requirements.txtpython server.py