A kubectl plugin to access Kubernetes nodes or remote services using a SSH jump Pod

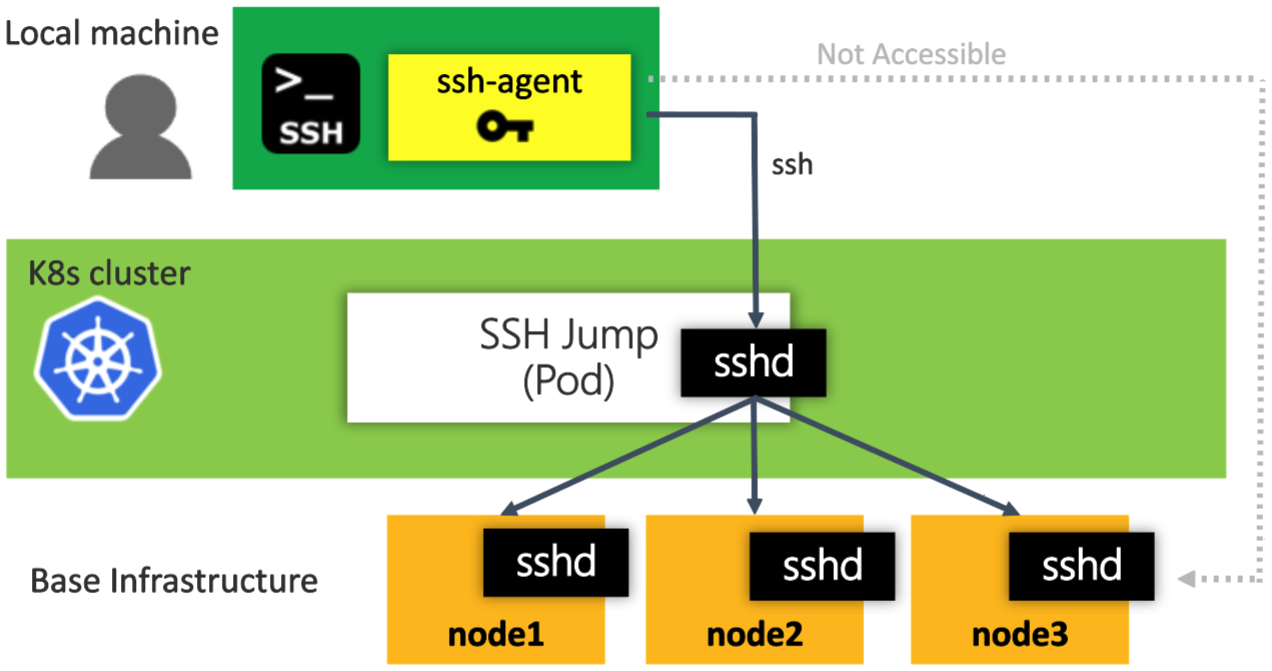

A jump host Pod is an intermediary Pod or an SSH gateway to Kubernetes node machines, through which a connection can be made to the node machines or remote services.

Here is an scenario where you want to connect to Kubernetes nodes or remote services, but you have to go through a jump host Pod, because of firewalling, access privileges. etc. There is a number of valid reasons why the jump hosts are needed...

CASE 1: SSH into Kubernetes nodes via SSH jump Pod

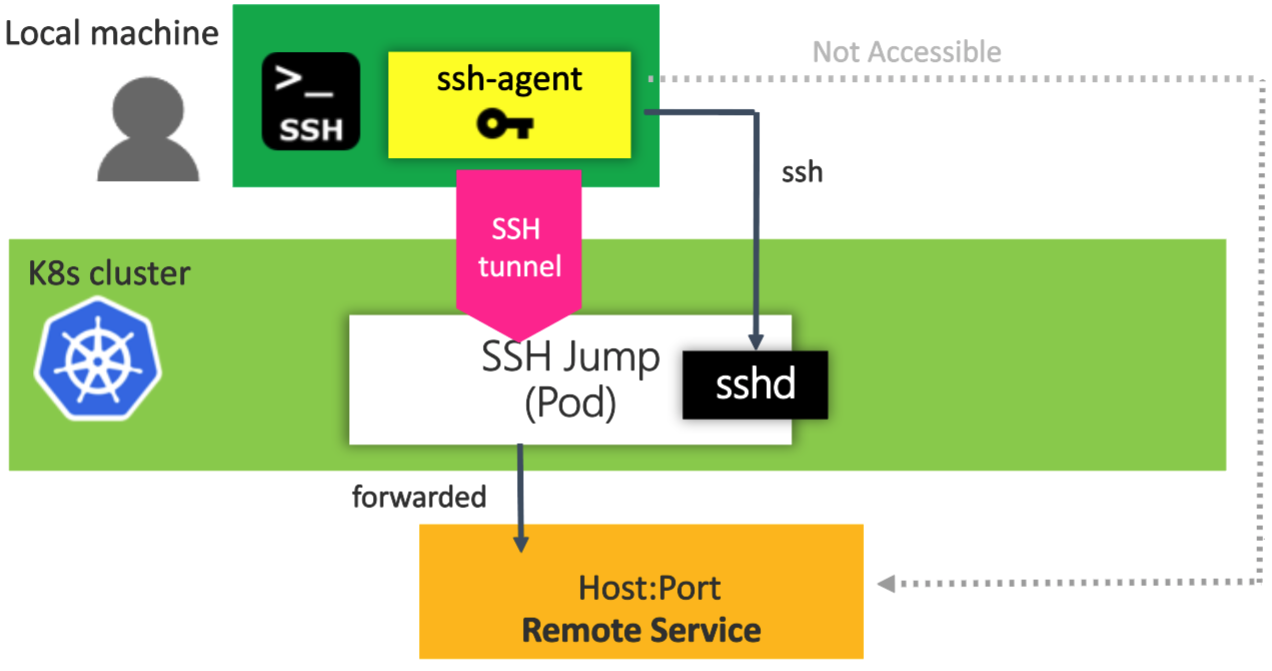

CASE 2: Connect to remote serivces via SSH local port forwarding. SSH local port forwarding allows to forward the traffic form local machine to SSH jump then SSH jump will forward the traffic to remote services (host:port)s.

[NOTE]

- Kubectl versions >=

1.12.0(Preferred)

- As of Kubernetes 1.12, kubectl now allows adding external executables as subcommands. For more detail, see Extend kubectl with plugins

- You can run the plugin with

kubectl ssh-jump ...- Kubectl versions <

1.12.0

- You still can run the plugin directly with

kubectl-ssh-jump ...

Table of Content

- kubectl-plugin-ssh-jump

This plugin needs the following programs:

- ssh(1)

- ssh-agent(1)

- ssh-keygen(1)

This is a way to install kubectl-ssh-jump through krew. After installing krew by following this, you can install kubectl-ssh-jump like this:

$ kubectl krew install ssh-jumpExpected output would be like this:

Updated the local copy of plugin index.

Installing plugin: ssh-jump

CAVEATS:

\

| This plugin needs the following programs:

| * ssh(1)

| * ssh-agent(1)

|

| Please follow the documentation: https://github.com/yokawasa/kubectl-plugin-ssh-jump

/

Installed plugin: ssh-jump

Once it's installed, run:

$ kubectl plugin list

The following kubectl-compatible plugins are available:

/Users/yoichi.kawasaki/.krew/bin/kubectl-krew

/Users/yoichi.kawasaki/.krew/bin/kubectl-ssh_jump

$ kubectl ssh-jumpInstall the plugin by copying the script in the $PATH of your shell.

# Get source

$ git clone https://github.com/yokawasa/kubectl-plugin-ssh-jump.git

$ cd kubectl-plugin-ssh-jump

$ chmod +x kubectl-ssh-jump

# Add kubeclt-ssh-jump to the install path.

$ sudo cp -p kubectl-ssh-jump /usr/local/binOnce in the $PATH, run:

$ kubectl plugin list

The following kubectl-compatible plugins are available:

/usr/local/bin/kubectl-ssh-jump

$ kubectl ssh-jumpUsage:

kubectl ssh-jump <dest_node> [options]

Options:

<dest_node> Destination node name or IP address

dest_node must start from the following letters:

ASCII letters 'a' through 'z' or 'A' through 'Z',

the digits '0' through '9', or hyphen ('-').

NOTE: Setting dest_node as 'jumphost' allows to

ssh into SSH jump Pod as 'root' user

-u, --user <sshuser> SSH User name

-i, --identity <identity_file> Identity key file, or PEM(Privacy Enhanced Mail)

-p, --pubkey <pub_key_file> Public key file

-P, --port <port> SSH port for target node SSH server

Defaults to 22

-a, --args <args> Args to exec in ssh session

-n, --namespace <ns> Namespace for jump pod

--context <context> Kubernetes context

--pod-template <file> Path to custom sshjump pod definition

-l, --labels <key>=<val>[,...] Find a pre-existing sshjump pod using labels

--skip-agent Skip automatically starting SSH agent and adding

SSH Identity key into the agent before SSH login

(=> You need to manage SSH agent by yourself)

--cleanup-agent Clearning up SSH agent at the end

The agent is NOT cleaned up in case that

--skip-agent option is given

--cleanup-jump Clearning up sshjump pod at the end

Defaults to skip cleaning up sshjump pod

-v, --verbose Run ssh in verbose mode (=ssh -vvv)

-h, --help Show this message

Example:

Scenario1 - You have private & public SSH key on your side

$ kubectl ssh-jump -u myuser -i ~/.ssh/id_rsa -p ~/.ssh/id_rsa.pub hostname

Scenario2 - You have .pem file but you don't have public key on your side

$ kubectl ssh-jump -u ec2-user -i ~/.ssh/mykey.pem hostnameusername, identity, pubkey, port options are cached, therefore you can omit these options afterward. The options are stored in a file named $HOME/.kube/kubectlssh/options

$ cat $HOME/.kube/kubectlssh/options

sshuser=myuser

identity=/Users/yoichi.kawasaki/.ssh/id_rsa_k8s

pubkey=/Users/yoichi.kawasaki/.ssh/id_rsa_k8s.pub

port=22The plugin automatically check if there are any ssh-agents started running by the plugin, and starts ssh-agentif it doesn't find any ssh-agent running and adds SSH Identity key into the agent before SSH login. If the command find that ssh-agent is already running, it doesn't start a new agent, and re-use the agent.

Add --cleanup-agent option if you want to kill the created agent at the end of command.

In addtion, add --skip-agent option if you want to skip automatic starting ssh-agent. This is actually a case where you already have ssh-agent managed or you want to manually start the agent.

You can customize the sshjump pod created by kubectl ssh-jump by setting the --pod-template flag to the path to a pod template on disk.

However, customized sshjump pods must be named sshjump and run in the current namespace or kubectl ssh-jump won't be able to find them without the required flags.

If you change the pod name, you must give the pod a unique set of labels and provide them on the command line by setting the --labels flag.

You can also specify the namespace and context used by kubectl ssh-jump by setting the --namespace and --context flags respectively.

Show all node list. Simply executing kubectl ssh-jump gives you the list of destination nodes as well as command usage

$ kubectl ssh-jump

Usage:

kubectl ssh-jump <dest_node> [options]

...snip...

List of destination node...

Hostname Internal-IP

aks-nodepool1-18558189-0 10.240.0.4

...snip...Suppose you have private & public SSH key on your side and you want to SSH to a node named aks-nodepool1-18558189-0, execute the plugin with options like this:

- usernaem:

azureuser - identity:

~/.ssh/id_rsa_k8s - pubkey:

~/.ssh/id_rsa_k8s.pub)

$ kubectl ssh-jump aks-nodepool1-18558189-0 \

-u azureuser -i ~/.ssh/id_rsa_k8s -p ~/.ssh/id_rsa_k8s.pub[NOTE] you can try SSH into a node using node IP address (

Internal-IP) instead ofHostname

As explained in usage secion, username, identity, pubkey options will be cached, therefore you can omit these options afterward.

$ kubectl ssh-jump aks-nodepool1-18558189-0You can pass the commands to run in the destination node like this (Suppose that username, identity, pubkey options are cached):

echo "uname -a" | kubectl ssh-jump aks-nodepool1-18558189-0

(Output)

Linux aks-nodepool1-18558189-0 4.15.0-1035-azure #36~16.04.1-Ubuntu SMP Fri Nov 30 15:25:49 UTC 2018 x86_64 x86_64 x86_64 GNU/LinuxYou can pass commands with --args or -a option

kubectl ssh-jump aks-nodepool1-18558189-0 --args "uname -a"

(Output)

Linux aks-nodepool1-18558189-0 4.15.0-1035-azure #36~16.04.1-Ubuntu SMP Fri Nov 30 15:25:49 UTC 2018 x86_64 x86_64 x86_64 GNU/LinuxYou can clean up sshjump pod at the end of the command with --cleanup-jump option, otherwise, the sshjump pod stay running by default.

$ kubectl ssh-jump aks-nodepool1-18558189-0 \

-u azureuser -i ~/.ssh/id_rsa_k8s -p ~/.ssh/id_rsa_k8s.pub \

--cleanup-jumpYou can clean up ssh-agent at the end of the command with --cleanup-agent option, otherwise, the ssh-agent process stay running once it's started.

$ kubectl ssh-jump aks-nodepool1-18558189-0 \

-u azureuser -i ~/.ssh/id_rsa_k8s -p ~/.ssh/id_rsa_k8s.pub \

--cleanup-agentYou can skip starting ssh-agent by giving --skip-agent. This is actually a case where you already have ssh-agent managed. Or you can start new ssh-agent and add an identity key to the ssh-agent like this:

# Start ssh-agent manually

$ eval `ssh-agent`

# Add an arbitrary private key, give the path of the key file as an argument to ssh-add

$ ssh-add ~/.ssh/id_rsa_k8s

# Then, run the plugin with --skip-agent

$ kubectl ssh-jump aks-nodepool1-18558189-0 \

-u azureuser -i ~/.ssh/id_rsa_k8s -p ~/.ssh/id_rsa_k8s.pub \

--skip-agent

# At the end, run this if you want to kill the current agent

$ ssh-agent -kFrom v0.4.0, the plugin supports PEM (Privacy Enhanced Mail) scenario where you create key-pair but you only have .pem / private key (downloaded from AWS, for example) and you don't have the public key on your side.

Suppose you've already downloaded a pem file and you want to ssh to your EKS worker node (EC2) named ip-10-173-62-96.ap-northeast-1.compute.internal using the pem, execute the plugin with options like this:

- usernaem:

ec2-user - identity:

~/.ssh/mykey.pem

$ kubectl ssh-jump -u ec2-user -i ~/.ssh/mykey.pem ip-10-173-62-96.ap-northeast-1.compute.internalSSH local port forwarding allows to forward the traffic form local machine to SSH jump then SSH jump will forward the traffic to remote services (host:port)s.

Suppose you have private & public SSH key on your side and you want to access a remote server (IP: 10.100.10.8) using 3389/TCP port which is not accessible directly but accessible via SSH jump, execute the plugin with options like this, at first:

- identity:

~/.ssh/id_rsa_k8s - pubkey:

~/.ssh/id_rsa_k8s.pub)

The command below allows to forward the traffic form local machine (localhost:13200) to SSH jump then SSH jump will forward the traffic to the remote server (10.100.10.8:3389).

$ kubectl ssh-jump sshjump \

-i ~/.ssh/id_rsa_k8s -p ~/.ssh/id_rsa_k8s.pub \

-a "-L 13200:10.100.10.8:3389"

sshjumpis the hostname for SSH jump Pod- The value for

--argor-ashould be in this format: "-L local_port:remote_address:remote_port"

Now, you're ready to access to the remote server at port 13200 at local machine.

Bug reports and pull requests are welcome on GitHub at https://github.com/yokawasa/kubectl-plugin-ssh-jump