Text2Image can understand a human written description of an object to generate a realistic image based on that description. Text2Image is using a type of generative adversarial network (GAN-CLS), implemented from scratch using Tensorflow.

You can also see a progression of GAN training

Our project is primarily based on the works of Generative Adversarial Text to Image Synthesis [Reed et al, 2016], the training is done in three steps:

- Encoding a pretrained GoogLeNet into high dimensional embedding space

- Training a text encoder to encode text into same high dimensional embedding space as step1

- Generative adversarial training on both generator network and discriminator network, feeding in encoded text using encoder from step 2.

- Step one:

pre-encode.pyencode images into 1024 dimensional embedding space with GoogLeNet [Reference here] - Step two:

train_text_encoder.pyto train text encoder into 1024 dim embedding space using encoded images in step 1 - Step three:

trainer_gan.pyto train GAN with the already trained text encoder from step 2. Inconf.pyyou can multi GPU support

The text encoder was trained on a single Nvidia K80 GPU for 12 hours, The GAN was trained on GCP with 4 Nvidia K80 GPUs for about 8 hours.

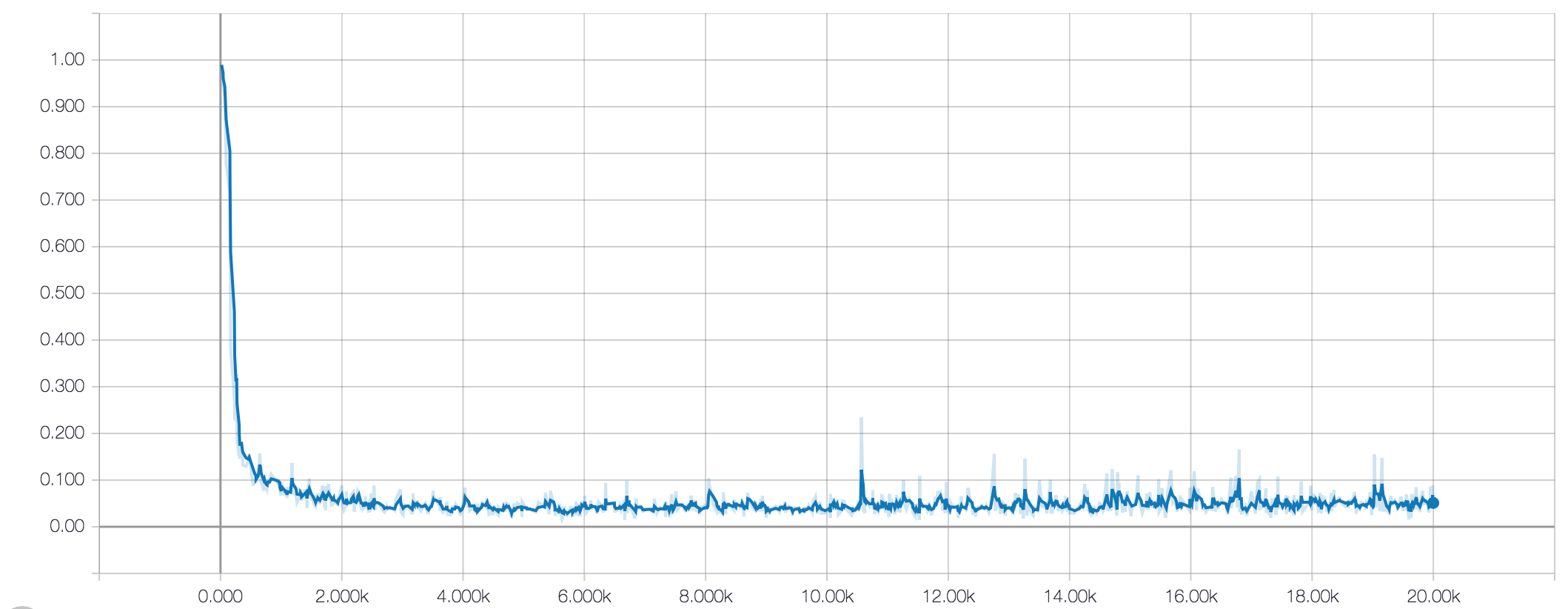

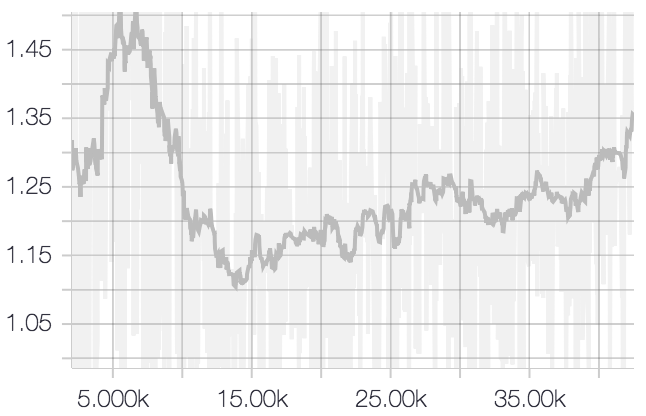

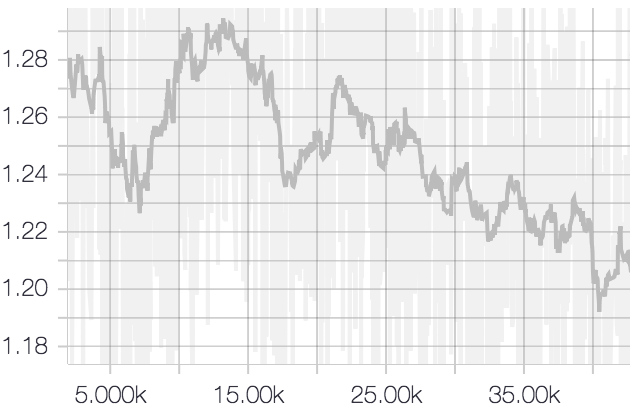

This is what the training loss look like:

Generator (step 3) loss:

Discrimator (also step 3) loss:

- Images: Oxford-102 http://www.robots.ox.ac.uk/~vgg/data/flowers/102/

- Image description: Oxford-102 description https://github.com/reedscot/cvpr2016 (see link in README)

Please PM me

- The text encoder embedding space might not have been normalized properly, because GAN diverges on certain text descriptions. however a randomly initialized text encoder can almost always ensure stable GAN learning.