Official PyTorch Implementation

Yossi Gandelsman, Alexei A. Efros, and Jacob Steinhardt

🔥 Check out our previous paper on interpreting attention heads in CLIP with text.

We provide an environment.yml file that can be used to create a Conda environment:

conda env create -f environment.yml

conda activate prsclipTo pre-compute the representations and the labels for the subsampled ImageNet data, execute:

datapath='...'

outputdir='...'

python compute_representations.py --model ViT-B-32 --pretrained openai --data_path $datapath --output_dir $outputdir # for representations and classes

python compute_classifier_projection.py --model ViT-B-32 --pretrained openai --output_dir $outputdir # for classifier weights

# Get second order for neurons in layer 9:

python compute_second_order_neuron_prs.py --model ViT-B-32 --pretrained openai --mlp_layer 9 --output_dir $outputdir --data_path $datapath # second order effectTo obtain the first PC that approximates most of the behavior of a single neuron, execute:

outputdir='...'

inputdir='...' # Set it to be the output of the previous stage

python compute_pcas.py --model ViT-B-32 --pretrained openai --mlp_layer 9 --output_dir $outputdir --input_dir $inputdirTo repeat our empirical analysis of the second-order effects, execute:

python compute_ablations.py --model ViT-B-32 --pretrained openai --mlp_layer 9 --output_dir $outputdir --input_dir $inputdir --data_path $datapathTo decompose the neuron second-order effects into text, execute:

python compute_text_set_projection.py --model ViT-B-32 --pretrained openai --output_dir $outputdir --data_path text_descriptions/30k.txt # get the text representations

# run the decomposition:

python compute_sparse_decomposition.py --model ViT-B-32 --pretrained openai --output_dir $outputdir --mlp_layer 9 --components 128 --text_descriptions 30k --device cuda:0To verify the reconstruction quality, add the --evaluate flag.

Please see a demo for visualizing the images with the largest second-order effects per neuron in visualize_neurons.ipynb.

To get adversarial images, please run:

CUDA_VISIBLE_DEVICES=0 python generate_adversarial_images.py --device cuda:0 --class_0 "vacuum cleaner" --class_1 "cat" --model ViT-B-32 --pretrained openai --dataset_path $outputdir --text_descriptions 30k --mlp_layers 9 --neurons_num 100 --overall_words 50 --results_per_generation 1Note that we used other hyperparameters in the paper, including --mlp_layers 8 9 10.

Please download the dataset from here:

mkdir imagenet_seg

cd imagenet_seg

wget http://calvin-vision.net/bigstuff/proj-imagenet/data/gtsegs_ijcv.matTo get the evaluation results, please run:

python compute_segmentation.py --device cuda:0 --model ViT-B-32 --pretrained openai --mlp_layers 9 --data_path imagenet_seg/gtsegs_ijcv.mat --save_img --output_dir $outputdirNote that we used other hyperparameters in the paper, including --mlp_layers 8 9 10.

Please see a demo for image concept discovery in concept_discovery.ipynb.

@misc{gandelsman2024neurons,

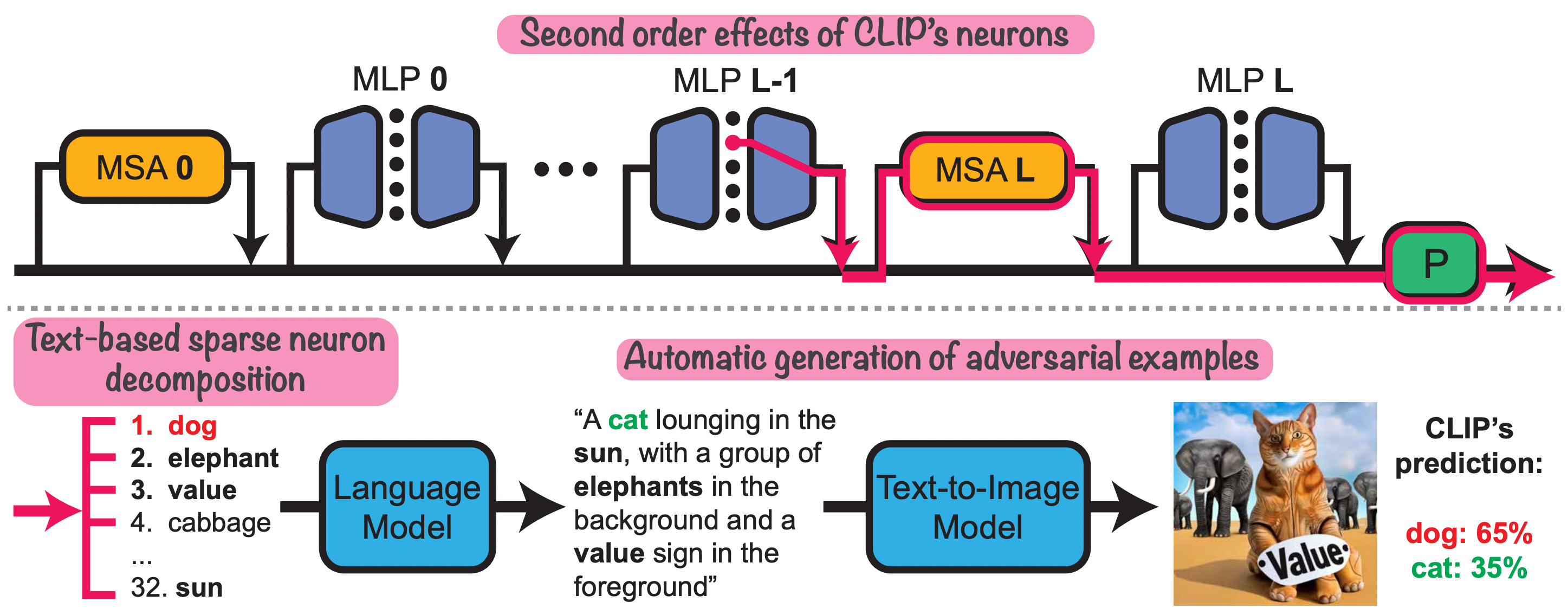

title={Interpreting the Second-Order Effects of Neurons in CLIP},

author={Yossi Gandelsman and Alexei A. Efros and Jacob Steinhardt},

year={2024},

eprint={2406.04341},

archivePrefix={arXiv},

primaryClass={cs.CV}

}