Our code and data are still being maintained and will be released soon.

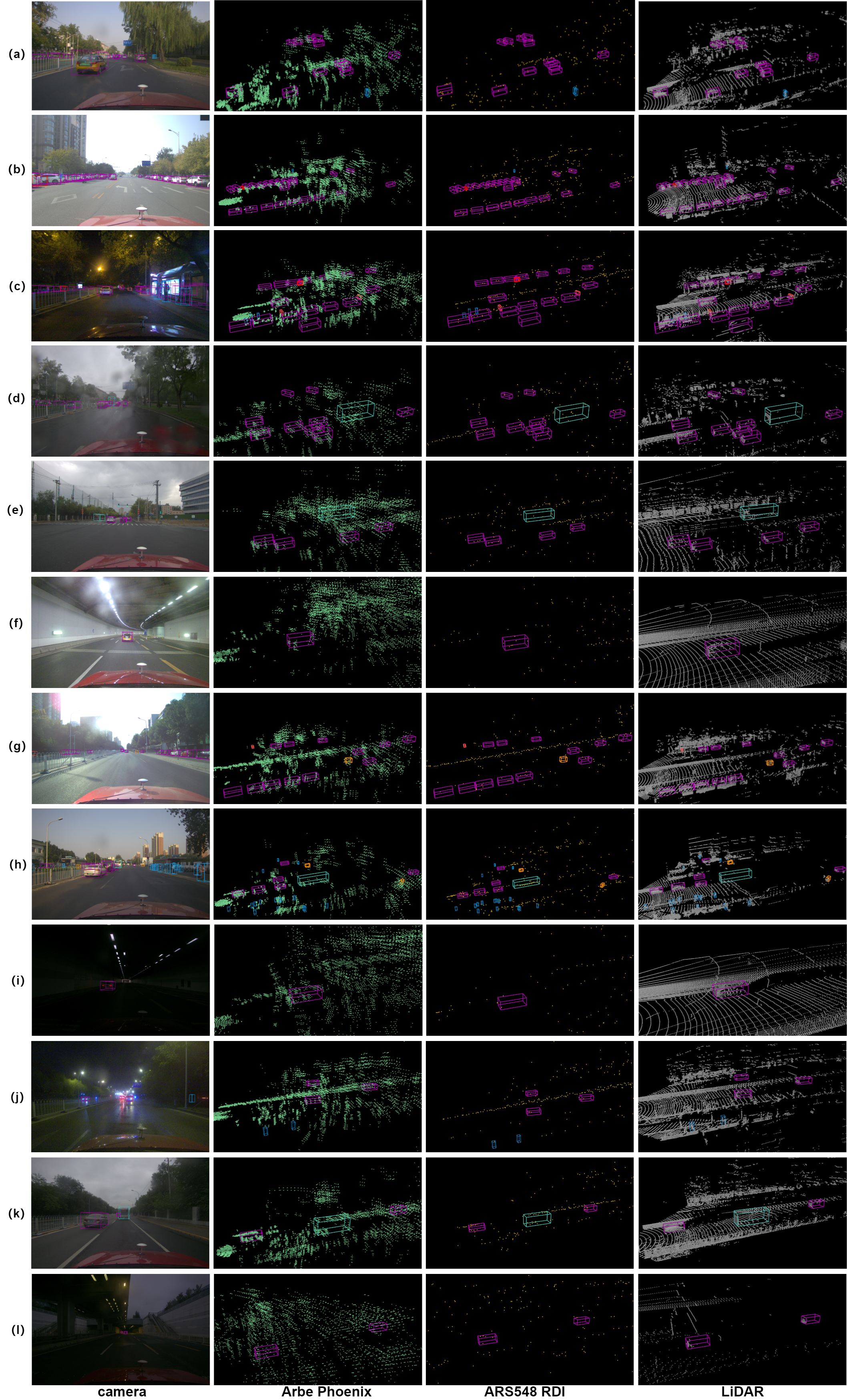

Dual-Radar (provided by 'ADEPTLab') is a brand new dataset based on 4D radar that can be used for studies on deep learning object detection and tracking in the field of autonomous driving. The system of ego vehicle includes a high-resolution camera, a 80-line LiDAR and two up-to-date and different models of 4D radars operating in different modes(Arbe and ARS548). The dataset comprises of raw data collected from ego vehicle, including scenarios such as tunnels and urban, with weather conditions rainy, cloudy and sunny. Our dataset also includes data from different time periods, including dusk, nighttime, and daytime. Our collected raw data amounts to a total of 12.5 hours, encompassing a total distance of over 600 kilometers. Our dataset covers a route distance of approximately 50 kilometers. It consists of 151 continuous time sequences, with the majority being 20-second sequences, resulting in a total of 10,007 carefully time-synchronized frames.

a) First-person perspective observation |

b) Third-person perspective observation |

Figure 1. Up to a visual range of 80 meters in urban

- Notice: On the left is a color RGB image, while on the right side, the cyan represents the Arbe point cloud, the white represents the LiDAR point cloud, and the yellow represents the ARS548 point cloud.

a) sunny,daytime,up to a distance of 80 meters |

b) sunny,nightime,up to a distance of 80 meters |

c) rainy,daytime,up to a distance of 80 meters |

d) cloudy,daytime,up to a distance of 80 meters |

Figure 1. Up to a visual range of 80 meters in urban

Figure 2. Data Visualization

The URLs listed below are useful for using the Dual-Radar dataset and benchmark:

Figure 3. Sensor Configuration and Coordinate Systems

- The specification of the autonomous vehicle system platform

Table 1. The specification of the autonomous vehicle system platform

| Sensors | Type | Resolution | Fov | FPS | ||||

| Range | Azimuth | Elevation | Range | Azimuth | Elevation | |||

| camera | acA1920-40uc | - | 1920X | 1200X | - | - | - | 10 |

| LiDAR | RS-Ruby Lite | 0.05m | 0.2° | 0.2° | 230m | 360° | 40° | 10 |

| 4D radar | ARS 548RDI | 0.22m | 1.2°@0...±15° 1.68°@±45° |

2.3° | 300m | ±60° | ±4°@300m ±14°@<140m |

20 |

| Arbe Phoenix | 0.3m | 1.25° | 2° | 153.6m | 100° | 30° | 20 | |

- The statistics of number of points cloud per frame

Table 2. The statistics of number of points cloud per frame

| Transducers | Minimum Values | Average Values | Maximum Values |

| LiDAR | 74386 | 116096 | 133538 |

| Arbe Phnoeix | 898 | 11172 | 93721 |

| ARS548 RDI | 243 | 523 | 800 |

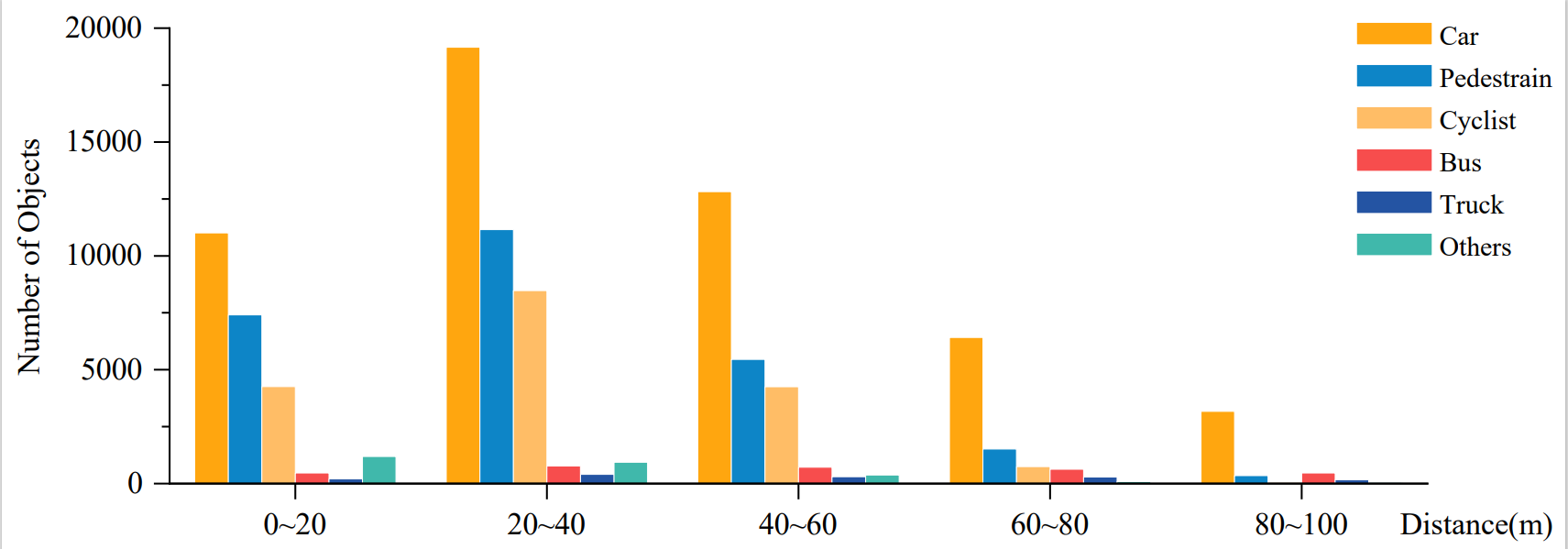

We separately counted the number of instances for each category in the Dual-Radar dataset and the distribution of different types of weather.

Figure 4. Distribution of weather conditions.

About two-thirds of our data were collected under normal weather conditions, and about one-third were collected under rainy and cloudy conditions. We collected 577 frames in rainy weather, which is about 5.5% of the total dataset. The rainy weather data we collect can be used to test the performance of different 4D radars in adverse weather conditions.

Figure 5. Distribution of instance conditions.

We also conducted a statistical analysis of the number of objects with each label at different distance ranges from our vehicle, as shown in Fig. Most objects are within 60 meters of our ego vehicle.This is the documentation for how to use our detection frameworks with Dual-Radar dataset. We tested the Dual-Radar detection frameworks on the following environment:

- Python 3.8.16 (3.10+ does not support open3d.)

- Ubuntu 18.04/20.04

- Torch 1.10.1+cu113

- CUDA 11.3

- opencv 4.2.0.32

[2022-09-30] The dataset will provide a link for access to the data for further research as soon as possible.

- After all files are downloaded, please arrange the workspace directory with the following structure:

Organize your code structure as follows

Frameworks

├── checkpoints

├── data

├── docs

├── Dual-Radardet

├── output

Organize the dataset according to the following file structure

Dataset

├── ImageSets

├── training.txt

├── trainingval.txt

├── val.txt

├── testing.txt

├── training

├── arbe

├── ars548

├── calib

├── image_2

├── label_2

├── velodyne

├── testing

├── arbe

├── ars548

├── calib

├── image_2

├── velodyne

- Clone the repository

git clone https://github.com/adept-thu/Dual-Radar.git

cd Dual-Radar

- Create a conda environment

conda create -n Dual-Radardet python=3.8.16

conda activate Dual-Radardet

-

Install PyTorch (We recommend pytorch 1.10.1.)

-

Install the dependencies

pip install -r requirements.txt

- Install Spconv(our cuda version is 113)

pip install spconv-cu113

- Build packages for Dual-Radardet

python setup.py develop

- Generate the data infos by running the following command:

python -m pcdet.datasets.mine.kitti_dataset create_mine_infos tools/cfgs/dataset_configs/mine_dataset.yaml

# or you want to use arbe data

python -m pcdet.datasets.mine.kitti_dataset_arbe create_mine_infos tools/cfgs/dataset_configs/mine_dataset_arbe.yaml

# or ars548

python -m pcdet.datasets.mine.kitti_dataset_ars548 create_mine_infos tools/cfgs/dataset_configs/mine_dataset_ars548.yaml

- To train the model on single GPU, prepare the total dataset and run

python train.py --cfg_file ${CONFIG_FILE}

- To train the model on multi-GPUS, prepare the total dataset and run

sh scripts/dist_train.sh ${NUM_GPUS} --cfg_file ${CONFIG_FILE}

- To evaluate the model on single GPU, modify the path and run

python test.py --cfg_file ${CONFIG_FILE} --batch_size ${BATCH_SIZE} --ckpt ${CKPT}

- To evaluate the model on multi-GPUS, modify the path and run

sh scripts/dist_test.sh ${NUM_GPUS} \

--cfg_file ${CONFIG_FILE} --batch_size ${BATCH_SIZE}

Here we provide a quick demo to test a pretrained model on the custom point cloud data and visualize the predicted results

- Download the pretrained model as shown in Table 4~8.

- Make sure you have installed the Open3d and mayavi visualization tools. If not, you could install it as follow:

pip install open3d

pip install mayavi

- prepare your point cloud data

points[:, 3] = 0

np.save(`my_data.npy`, points)

- Run the demo with a pretrained model and point cloud data as follows

python demo.py --cfg_file ${CONFIG_FILE} \

--ckpt ${CKPT} \

--data_path ${POINT_CLOUD_DATA}

Table 3. Multimodal Experimental Results(3D@0.5 0.25 0.25)

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| 3D@0.5 | 3D@0.25 | 3D@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| VFF | camera+LiDAR | 94.60 | 84.14 | 78.77 | 39.79 | 35.99 | 36.54 | 55.87 | 51.55 | 51.00 | model |

| camera+Arbe | 31.83 | 14.43 | 11.30 | 0.01 | 0.01 | 0.01 | 0.20 | 0.07 | 0.08 | model | |

| camera+ARS548 | 12.60 | 6.53 | 4.51 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | model | |

| M2Fusion | LiDAR+Arbe | 89.71 | 79.70 | 64.32 | 27.79 | 20.41 | 19.58 | 41.85 | 36.20 | 35.14 | model |

| LiDAR+ARS548 | 89.91 | 78.17 | 62.37 | 34.28 | 29.89 | 29.17 | 42.42 | 40.92 | 39.98 | model | |

Table 4. Multimodal Experimental Results(BEV@0.5 0.25 0.25)

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| BEV@0.5 | BEV@0.25 | BEV@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| VFF | camera+Lidar | 94.60 | 84.28 | 80.55 | 40.32 | 36.59 | 37.28 | 55.87 | 51.55 | 51.00 | model |

| camera+Arbe | 36.09 | 17.20 | 13.23 | 0.01 | 0.01 | 0.01 | 0.20 | 0.08 | 0.08 | model | |

| camera+ARS548 | 16.34 | 9.58 | 6.61 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | model | |

| M2Fusion | LiDAR+Arbe | 90.91 | 85.73 | 70.16 | 28.05 | 20.68 | 20.47 | 53.06 | 47.83 | 46.32 | model |

| LiDAR+ARS548 | 91.14 | 82.57 | 66.65 | 34.98 | 30.28 | 29.92 | 43.12 | 41.57 | 40.29 | model | |

Table 5. Single modity Experimental Results(3D@0.5 0.25 0.25)

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| 3D@0.5 | 3D@0.25 | 3D@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| pointpillars | LiDAR | 81.78 | 55.40 | 44.53 | 43.22 | 38.87 | 38.45 | 25.60 | 24.35 | 23.97 | model |

| Arbe | 49.06 | 27.64 | 18.63 | 0.00 | 0.00 | 0.00 | 0.19 | 0.12 | 0.12 | model | |

| ARS548 | 11.94 | 6.12 | 3.76 | 0.00 | 0.00 | 0.00 | 0.99 | 0.63 | 0.58 | model | |

| RDIou | LiDAR | 63.43 | 40.80 | 32.92 | 33.71 | 29.35 | 28.96 | 38.26 | 35.62 | 35.02 | model |

| Arbe | 51.49 | 26.74 | 17.83 | 0.00 | 0.00 | 0.00 | 0.51 | 0.37 | 0.35 | model | |

| ARS548 | 5.96 | 3.77 | 2.29 | 0.00 | 0.00 | 0.00 | 0.21 | 0.15 | 0.15 | model | |

| VoxelRCNN | LiDAR | 86.41 | 56.91 | 42.38 | 52.65 | 46.33 | 45.80 | 38.89 | 35.13 | 34.52 | model |

| Arbe | 55.47 | 30.17 | 19.82 | 0.03 | 0.02 | 0.02 | 0.15 | 0.06 | 0.06 | model | |

| ARS548 | 18.37 | 8.24 | 4.97 | 0.00 | 0.00 | 0.00 | 0.24 | 0.21 | 0.21 | model | |

| Cas-V | LiDAR | 80.60 | 58.98 | 49.83 | 55.43 | 49.11 | 48.47 | 42.84 | 40.32 | 39.09 | model |

| Arbe | 27.96 | 10.27 | 6.21 | 0.02 | 0.01 | 0.01 | 0.05 | 0.04 | 0.04 | model | |

| ARS548 | 7.71 | 3.05 | 1.86 | 0.00 | 0.00 | 0.00 | 0.08 | 0.06 | 0.06 | model | |

| Cas-T | LiDAR | 73.41 | 45.74 | 35.09 | 58.84 | 52.08 | 51.45 | 35.42 | 33.78 | 33.36 | model |

| Arbe | 14.15 | 6.38 | 4.27 | 0.00 | 0.00 | 0.00 | 0.09 | 0.06 | 0.05 | model | |

| ARS548 | 3.16 | 1.60 | 1.00 | 0.00 | 0.00 | 0.00 | 0.36 | 0.20 | 0.20 | model | |

Table 6. Single modity Experimental Results(BEV@0.5 0.25 0.25)

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| BEV@0.5 | BEV@0.25 | BEV@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| pointpillars | LiDAR | 81.81 | 55.49 | 45.69 | 43.60 | 39.59 | 38.92 | 38.78 | 38.74 | 38.42 | model |

| Arbe | 54.63 | 35.09 | 25.19 | 0.00 | 0.00 | 0.00 | 0.41 | 0.24 | 0.23 | model | |

| ARS548 | 14.40 | 8.14 | 5.26 | 0.00 | 0.00 | 0.00 | 2.27 | 1.64 | 1.53 | model | |

| RDIou | LiDAR | 63.44 | 41.25 | 33.74 | 33.97 | 29.62 | 29.22 | 49.33 | 47.48 | 46.85 | model |

| Arbe | 55.27 | 31.48 | 21.80 | 0.01 | 0.01 | 0.01 | 0.84 | 0.66 | 0.65 | model | |

| ARS548 | 7.13 | 5.00 | 3.21 | 0.00 | 0.00 | 0.00 | 0.61 | 0.46 | 0.44 | model | |

| VoxelRCNN | LiDAR | 86.41 | 56.95 | 42.43 | 41.21 | 53.50 | 45.93 | 47.47 | 45.43 | 43.85 | model |

| Arbe | 59.32 | 34.86 | 23.77 | 0.02 | 0.02 | 0.02 | 0.21 | 0.15 | 0.15 | model | |

| ARS548 | 21.34 | 9.81 | 6.11 | 0.00 | 0.00 | 0.00 | 0.33 | 0.30 | 0.30 | model | |

| Cas-V | LiDAR | 80.60 | 59.12 | 51.17 | 55.66 | 49.35 | 48.72 | 51.51 | 50.03 | 49.35 | model |

| Arbe | 30.52 | 12.28 | 7.82 | 0.02 | 0.02 | 0.02 | 0.13 | 0.05 | 0.05 | model | |

| ARS548 | 8.81 | 3.74 | 2.38 | 0.00 | 0.00 | 0.00 | 0.25 | 0.21 | 0.19 | model | |

| Cas-T | LiDAR | 73.42 | 45.79 | 35.31 | 59.06 | 52.36 | 51.74 | 44.35 | 44.41 | 42.88 | model |

| Arbe | 22.85 | 13.06 | 9.18 | 0.00 | 0.00 | 0.00 | 0.17 | 0.08 | 0.08 | model | |

| ARS548 | 4.21 | 2.21 | 1.49 | 0.00 | 0.00 | 0.00 | 0.68 | 0.43 | 0.42 | model | |

Table 7. Single modity Experimental Results in the rainy scenario(3D@0.5 0.25 0.25)

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| 3D@0.5 | 3D@0.25 | 3D@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| pointpillars | LiDAR | 60.57 | 44.31 | 41.91 | 32.74 | 28.82 | 28.67 | 29.12 | 25.75 | 24.24 | model |

| Arbe | 68.24 | 48.98 | 42.80 | 0.00 | 0.00 | 0.00 | 0.19 | 0.10 | 0.09 | model | |

| ARS548 | 11.87 | 8.41 | 7.32 | 0.11 | 0.09 | 0.08 | 0.93 | 0.36 | 0.30 | model | |

| RDIou | LiDAR | 44.93 | 39.32 | 39.09 | 24.28 | 21.63 | 21.43 | 52.64 | 43.92 | 42.04 | model |

| Arbe | 67.81 | 49.59 | 43.24 | 0.00 | 0.00 | 0.00 | 0.38 | 0.30 | 0.28 | model | |

| ARS548 | 5.87 | 5.48 | 4.68 | 0.00 | 0.00 | 0.00 | 0.09 | 0.01 | 0.01 | model | |

Table 8. Single modity Experimental Results(BEV@0.5 0.25 0.25) in the rainy scenario

| Baseline | Data | Car | Pedestrain | Cyclist | model pth | ||||||

| BEV@0.5 | BEV@0.25 | BEV@0.25 | |||||||||

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |||

| pointpillars | LiDAR | 60.57 | 44.56 | 42.49 | 32.74 | 28.82 | 28.67 | 44.39 | 40.36 | 38.64 | model |

| Arbe | 74.50 | 59.68 | 54.34 | 0.00 | 0.00 | 0.00 | 0.32 | 0.16 | 0.15 | model | |

| ARS548 | 14.16 | 11.32 | 9.82 | 0.11 | 0.09 | 0.08 | 2.26 | 1.43 | 1.20 | model | |

| RDIou | LiDAR | 44.93 | 39.39 | 39.86 | 24.28 | 21.63 | 21.43 | 10.80 | 52.44 | 50.28 | model |

| Arbe | 70.09 | 54.17 | 47.64 | 0.00 | 0.00 | 0.00 | 0.63 | 0.45 | 0.45 | model | |

| ARS548 | 6.36 | 6.51 | 5.46 | 0.00 | 0.00 | 0.00 | 0.13 | 0.08 | 0.08 | model | |

The Dual-Radar dataset is published under the CC BY-NC-ND License, and all codes are published under the Apache License 2.0.