💡 Some other projects about Discrete Tokenizer based Multimodal GenAI from our team may interest you ✨.

[NeurIPS 2024] Stabilize the Latent Space for Image Autoregressive Modeling: A Unified Perspective

Yongxin Zhu, Bocheng Li, Hang Zhang, Xin Li, Linli Xu, Lidong Bing

[ACL 2024] Generative Pre-Trained Speech Language Model with Efficient Hierarchical Transformer

Yongxin Zhu, Dan Su, Liqiang He, Linli Xu, Dong Yu

(Adopted by Moshi)

[EMNLP 2023] DiffS2UT: A Semantic Preserving Diffusion Model for Textless Direct Speech-to-Speech Translation

Yongxin Zhu, Zhujin Gao, Xinyuan Zhou, Zhongyi Ye, Linli Xu

Algorithm for SimVQ

You can find the core code here https://github.com/youngsheen/SimVQ/blob/main/taming/modules/vqvae/quantize.py#L28-L33

Table 1. Reconstruction performance of different tokenizers on

| Method | Codebook Size | Codebook Utilization | rFID | LPIPS | PSNR | SSIM | Checkpoint |

|---|---|---|---|---|---|---|---|

| VQGAN | 65,536 | 1.4% | 3.74 | 0.17 | 22.20 | 70.6 | - |

| VQGAN | 65,536 | 4.5% | 3.23 | 0.15 | 22.89 | 72.3 | - |

| VQGAN-FC | 65,536 | 100.0% | 2.63 | 0.13 | 23.79 | 77.5 | - |

| FSQ | 64,000 | 100.0% | 2.80 | 0.13 | 23.63 | 75.8 | - |

| LFQ | 65,536 | 100.0% | 2.88 | 0.13 | 23.60 | 77.2 | - |

| VQGAN-LC | 65,536 | 100.0% | 2.40 | 0.13 | 23.98 | 77.3 | - |

| SimVQ (ours) | 65,536 | 100.0% | 2.24 | 0.12 | 24.15 | 78.4 | - |

| SimVQ (ours) | 262,144 | 100.0% | 1.99 | 0.11 | 24.68 | 80.3 | - |

Table 2. Reconstruction performance of different tokenizers on LibriTTS test clean/other set.

| Method | Bandwidth | Codebook Utilization | UTMOS | PESQ | STOI | V/UV F1 | Checkpoint |

|---|---|---|---|---|---|---|---|

| Encodec | 3.0kbps | -/-% | 2.31/2.09 | 2.05/2.05 | 0.90/0.88 | 0.92/0.89 | - |

| Vocos | 3.0kbps | -/-% | 3.53/3.06 | 2.40/2.19 | 0.92/0.90 | 0.94/0.91 | - |

| SpeechTokenizer | 3.0kbps | -/-% | 3.56/3.02 | 1.93/1.74 | 0.88/0.84 | 0.93/0.89 | - |

| WavTokenizer | 0.9kbps | 100/100% | 3.74/3.43 | 2.01/2.26 | 0.89/0.89 | 0.92/0.92 | - |

| WavTokenizer | 1.05kbps | 27/-% | 4.00/- | 2.36/- | 0.81/- | 0.94/- | - |

| SimVQ (ours) | 0.9kbps | 100.0/100.0% | 4.00/3.51 | 2.33/2.08 | 0.91/0.88 | 0.94/0.91 | - |

| SimVQ (ours) | 1.35kbps | 95.6/94.7% | 4.03/3.53 | 2.61/2.31 | 0.93/0.90 | 0.95/0.93 | - |

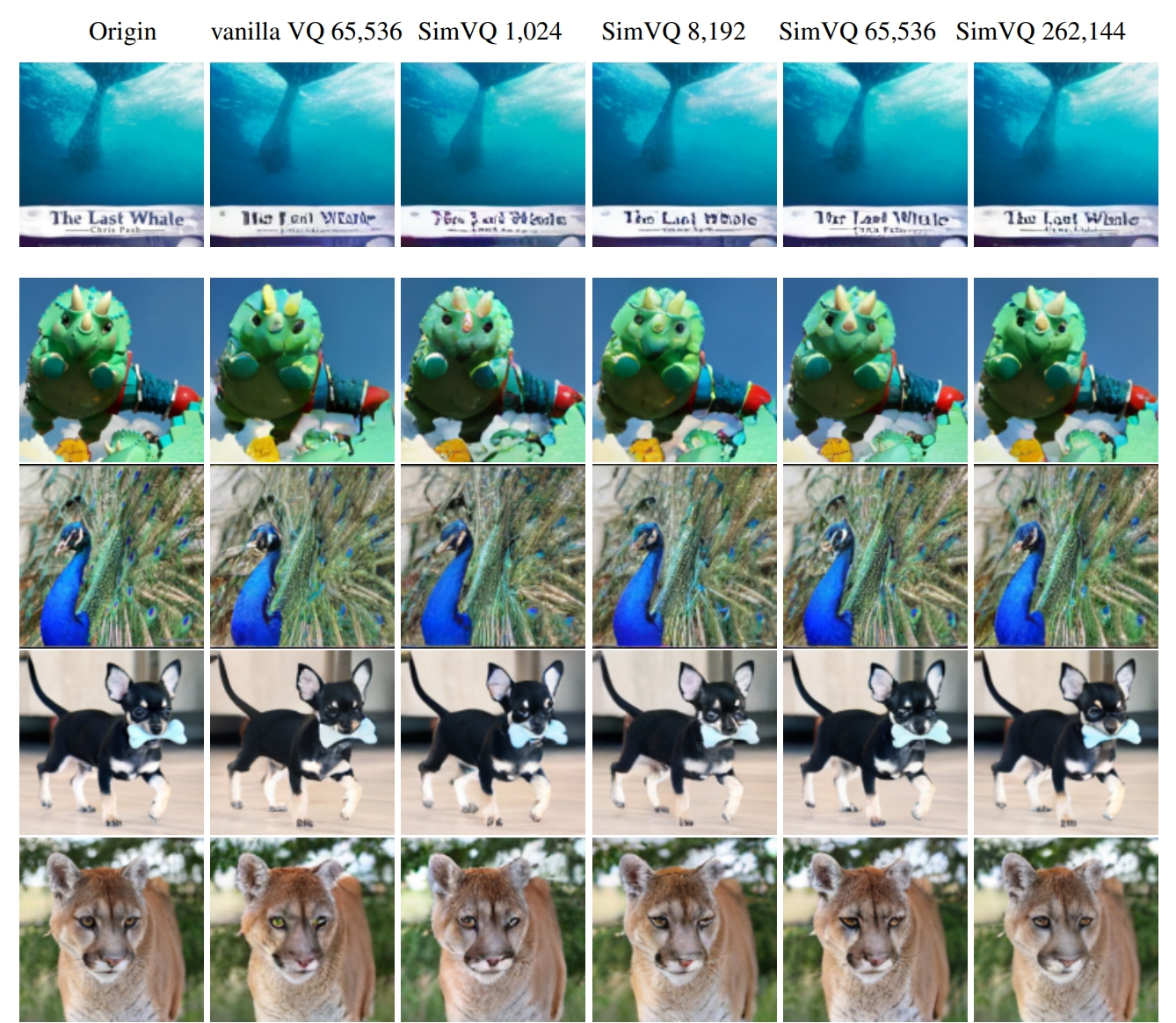

Figure 2. Visualization of the Open-MAGVIT2 tokenizer trained at imagenet_simvq_128_Base version). (a) indicates the original images while (b) specifies the reconstruction images.

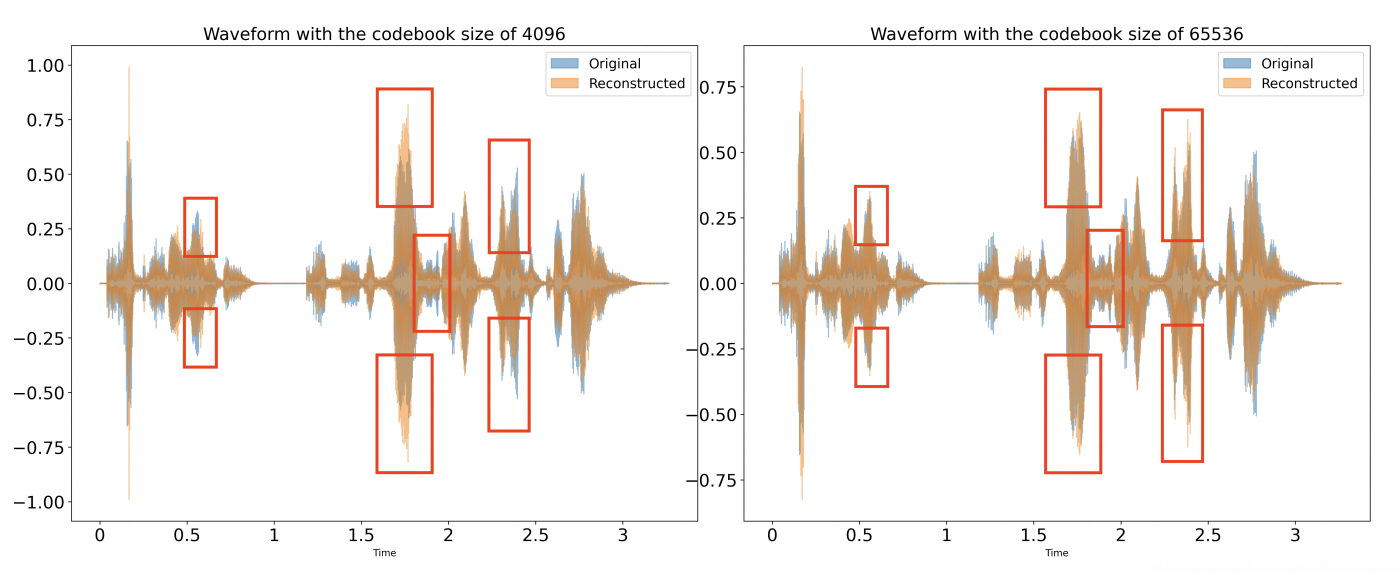

Figure 3. Visualization of the Open-MAGVIT2 tokenizer trained at LibriTTS (libritts_24khz version). (a) indicates the original images while (b) specifies the reconstruction images.

- Dependencies:

pip install -r requirements.txt - Extra dependencies for audio evaluation:

pip install -r requirements_audio.txt - Datasets

imagenet

└── train/

├── n01440764

├── n01440764_10026.JPEG

├── n01440764_10027.JPEG

├── ...

├── n01443537

├── ...

└── val/

├── ...

LibriTTS

└── train-clean-100/

├── 103/

├── 1241/

├── 103_1241_000000_000001.wav

├── ...

├── 1034

├── ...

└── train-clean-360/

├── ...

└── train-other-500/

├── ...

└── dev-other/

├── ...

└── dev-clean/

├── ...

└── test-other/

├── ...

└── test-clean/

├── ...

- Image Tokenizer Training

XDG_CACHE_HOME="dataset/ILSVRC2012" python main.py fit --config configs/imagenet_simvq_128_B.yaml

- Audio Tokenizer Training

DATA_ROOT="dataset/libritts" python main.py fit --config configs/libritts_24khz.yaml

- Image Tokenizer Evaluation

XDG_CACHE_HOME="dataset/ILSVRC2012" python evaluation.py --config_file vq_log/simvq_1k/size128/config.yaml --ckpt_path vq_log/simvq_1k/epoch=49-step=250250.ckpt

- Audio Tokenizer Evaluation

DATA_ROOT="dataset/libritts" python evaluation_speech.py --config_file vq_audio_log/simvq_1k/1second/config.yaml --ckpt_path vq_audio_log/simvq_1k/epoch=49-step=443500.ckpt

The codebase of SimVQ is adapted from Open-MAGVIT2 and WavTokenizer. Thanks for their wonderful work.