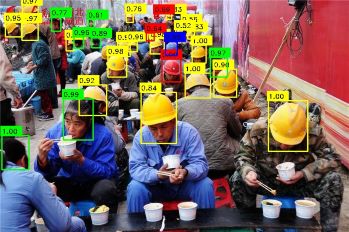

Detecting hardhat-use and identifying the corresponding colors of a hardhat on construction sites based on SSD framework.

This work tries to automate the monitoring of whether people are wearing hardhat on the construction sites and identify the corresponding colors. Currently, on the proposed dataset of GDUT-Hardhat Wearing Detection (GDUT-HWD), the SSD model combined with our reverse progressive attention (SSD-RPA) achieves 83.89% mAP with the input size 512×512. In addition, we have released models that are suitable for mobile devices.

-

Install dependencies the Caffe framework needs. You can visit the caffe official website and follow the instructions there to install the dependent libraries and drivers correctly.

-

Clone the SSD-RPA repository and compile the code.

git clone https://github.com/wujixiu/helmet-detection/tree/master/hardhat-wearing-detection/SSD-RPA.git cd SSD-RPA make all -j8 -

following the instructions in SSD (https://github.com/weiliu89/caffe/tree/ssd) to prepare data.

Suppose the code is running under the SSD-RPA directory and data is prepared.

- Download VGG_ILSVRC_16_layers_fc_reduced or peleenet and put them in models directory.

- Run the training scripts.

sh examples/Hardhat/SSD300/train_SSD300.shAll models are based on Caffe.

-

Environments

- Windows 10

- Visual Studio 2013 (Release x64)

- CPU

- OpenCV

-

C++

It is highly recommended to use mini-caffe to deploy model for its portability, or OpenCV3 with SSD's layer is enough if you do not use our proposed models with RPA module. Code with OpenCV3 deployment is available in old version. For mini-caffe deployment under Windows, we provide the complied files in mini-caffe-Release, or you can compile the mini-caffe yourself.

Notable that different models have different input size and different input scale. Details are shown below.

| Models | Input size | mean | input scale |

|---|---|---|---|

| SqueezeNet-SSD | 300×300 | (104,117,123) | 0.017 |

| MobileNet-SSD | 300×300 | (127.5,127.5,127.5) | 0.007843 |

| Pelee | 304×304 | (103.94,116.78,123.68) | 0.017 |

| Pelee-RPA | 304×304 | (103.94,116.78,123.68) | 0.017 |

| SSD-RPA300 | 300×300 | (104,117,123) | 1 |

| SSD-RPA512 | 512×512 | (104,117,123) | 1 |

- Dataset Statistics

- The number of images of GDUT-HWD is 3,174, which has been split into 1,587 for training (GDUT-HWD trainval) and 1,587 for testing (GDUT-HWD test). It contains 18,893 instances falling into 5 classes and each instance is annotated with a class label andits bounding box.

- Number of instances.

| Label | trainval | test | total |

|---|---|---|---|

| blue | 1251 | 1361 | 2612 |

| white | 1813 | 1968 | 3781 |

| yellow | 1936 | 1962 | 3898 |

| red | 2148 | 2083 | 4231 |

| none | 2171 | 2200 | 4371 |

| small (area ≤ 32×32) | 4237 | 4713 | 8950 |

| medium (32×32<area ≤ 96×96) | 4098 | 3826 | 7924 |

| large (area > 96×96) | 984 | 1035 | 2019 |

-

Data is available on Baidu Yun (646.6MB) (pwd:dstk).

-

The following pretrained models are available.

Data and weights are available in Google Drive