Official implementation of our DHM training mechanism as described in Dynamic Hierarchical Mimicking Towards Consistent Optimization Objectives (CVPR'20) by Duo Li and Qifeng Chen on CIFAR-100 and ILSVRC2012 benchmarks with the PyTorch framework.

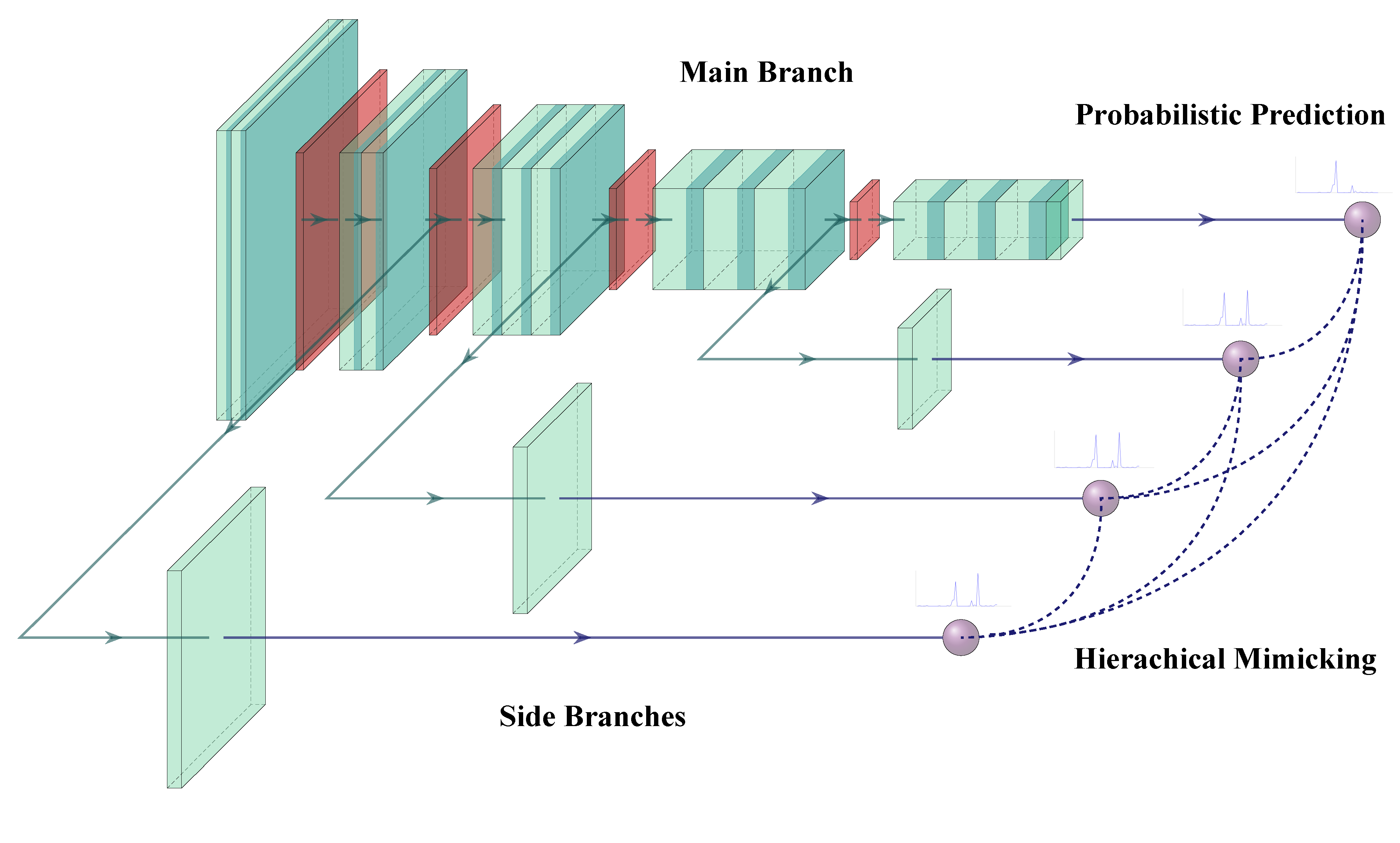

We dissolve the inherent defficiency inside the deeply supervised learning mechanism by introducing mimicking losses between the main branch and auxiliary branches located in different network depths, while no computatonal overheads are introduced after discarding those auxiliary branches during inference.

- PyTorch 1.0+

- NVIDIA-DALI (in development, not recommended)

Download the ImageNet dataset and move validation images to labeled subfolders. To do this, you can use the following script: https://raw.githubusercontent.com/soumith/imagenetloader.torch/master/valprep.sh

| Architecture | Method | Top-1 / Top-5 Error (%) |

| ResNet-18 | DSL | 29.728 / 10.450 |

| DHM | 28.714 / 9.940 | |

| ResNet-50 | DSL | 23.874 / 7.074 |

| DHM | 23.430 / 6.764 | |

| ResNet-101 | DSL | 22.260 / 6.128 |

| DHM | 21.348 / 5.684 | |

| ResNet-152 | DSL | 21.602 / 5.824 |

| DHM | 20.810 / 5.396 |

* DSL denotes Deeply Supervised Learning

* DHM denotes Dynamic Hierarchical Mimicking (ours)

Please refer to the training recipes for illustrative examples .

After downloading the pre-trained models, you could test the model on the ImageNet validation set with this command line:

python imagenet.py \

-a <resnet-model-name> \

-d <path-to-ILSVRC2012-data> \

--weight <pretrained-pth-file> \

-eIf you find our work useful in your research, please consider citing:

@InProceedings{Li_2020_CVPR,

author = {Li, Duo and Chen, Qifeng},

title = {Dynamic Hierarchical Mimicking Towards Consistent Optimization Objectives},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}