PCLs: Geometry-aware Neural Reconstruction of 3D Pose with Perspective Crop Layers

Frank Yu,

Mathieu Salzmann,

Pascal Fua, and

Helge Rhodin

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

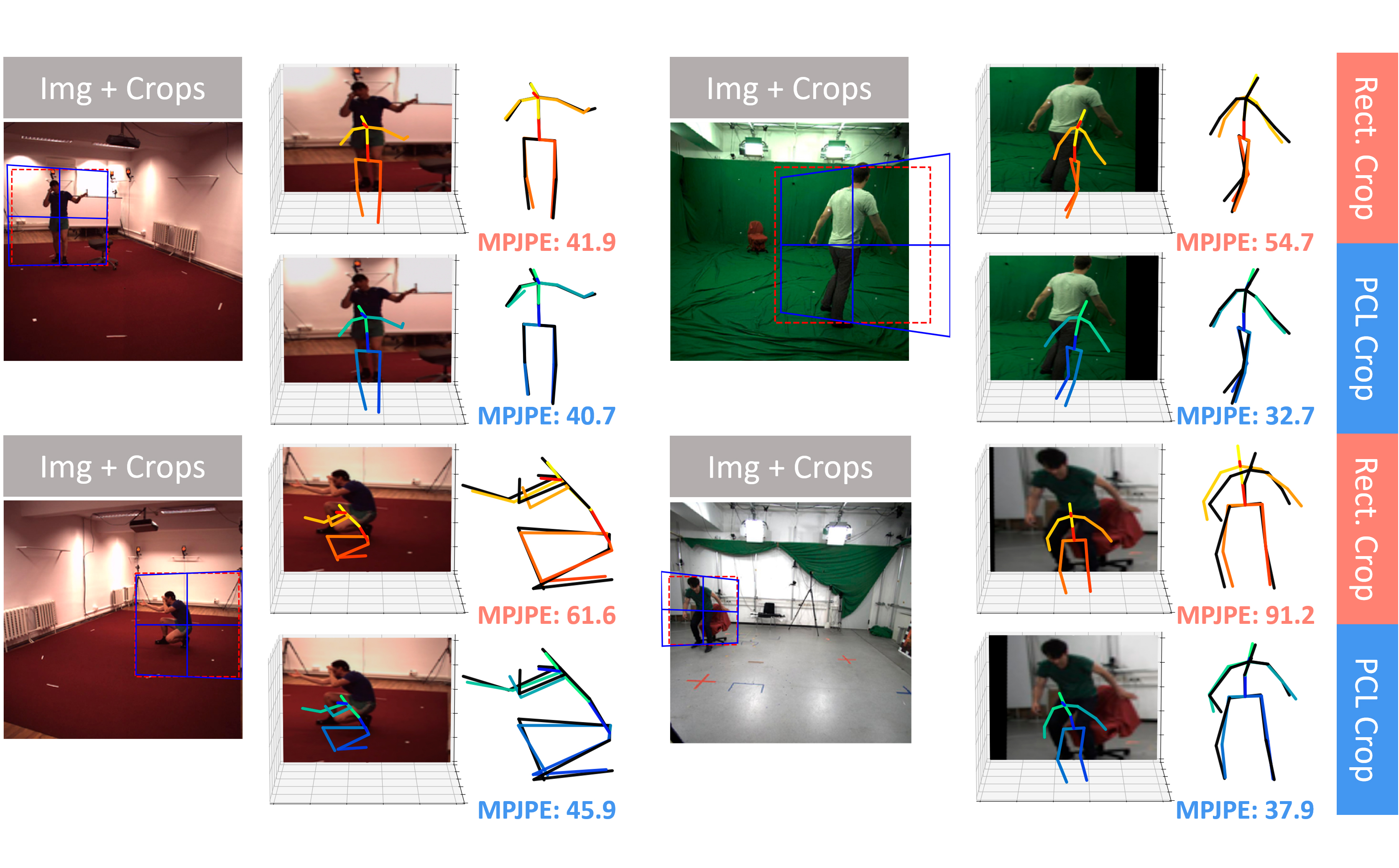

PyTorch implementation for removing perspective distortions from images or 2D poses using Perspective Crop Layers (PCLs) to improve accuracy of 3D human pose estimation techniques. Shown below are examples of this perspective distortion and its correction using PCL. Images shown below are from the Human3.6M (left) and MPI-INF-3DHP (right) datasets.

- Linux or Windows

- NVIDIA GPU + CUDA CuDNN

- Python 3.6 (Tested on Python 3.6.2)

- PyTorch 1.6.0,

- Python dependencies listed in requirements.txt

To get started, please run the following commands:

conda create -n pcl python=3.6.2

conda activate pcl

conda install --file requirements.txt

conda install -c anaconda ipykernel

python -m ipykernel install --user --name=pcl

conda install pytorch=1.6.0 torchvision=0.7.0 cudatoolkit=10.2 -c pytorchWe have included 2 Jupyter notebook demos for you to try out PCLs on both a general setting (RECOMMENDED) pcl_demo.ipynb (which does not require any pretrained models and goes through step-by-step how to use PCL) as well as one geared towards human pose estimation on extracted samples from Human3.6m as well as MPI-INF-3DHP (humanPose-demo.ipynb) (which requires a pretrained model and additional data)

- To process an image using PCL using the following lines of code:

P_virt2orig, R_virt2orig, K_virt = pcl.pcl_transforms({Crop Position [px; Nx2]}, {Crop Scale [px; Nx2]}, {Camera Intrinsic Matrix [px; Nx3x3]})

grid_sparse = pcl.perspective_grid(P_virt2orig, {Input IMG Dim.}, {Output IMG Dim}, transform_to_pytorch=True)

PCL_cropped_img = F.grid_sample({Original IMG}, grid_perspective)NOTE: All input tensors to PCL MUST be in pixel coordinates (including the camera matrix). This means, for a 512x512 image, the range of the coordinates should be [0, 512)

- Once the you pass this to the network you must then convert the predicted pose (which is in the virtual camera coordinates) back to the world coordinates. Please use the following code

NOTE: If the output of the network is normalized you must first deformalized the output before running this line

# Using the same R_virt2orig from the above command

pose_3d = pcl.virtPose2CameraPose(virt_3d_pose, R_virt2orig, batch_size, num_joints)- To process an input 2D pose please follow the following steps:

NOTE: The input 2D pose is not root centered yet (ie. the hip joint 2D coordinate should NOT be (0,0); this should be done afterwards (possibly during the normalization step).

virt_2d_pose, R_virt2orig, P_virt2orig = pcl.pcl_transforms_2d(pose_2d, {Crop Position [px; Nx2]}, {Crop Scale [px; Nx2]}, {Camera Intrinsic Matrix [px; Nx3x3]})- Normalize virt_2d_pose w/ the mean and standard deviation of virt_2d_pose over the whole dataset. This normalized 2D pose is what should be passed to the network.

- Once the network has made its prediction use the following code to convert the predicted 3D pose in virtual camera coordinates back to world coordinates with the following code:

# Using the same R_virt2orig from the above command

pose_3d = pcl.virtPose2CameraPose(virt_3d_pose, R_virt2orig, batch_size, num_joints)Image coordinates: First coordinate is the horizontal axis, second coordinate is the y axis, and the origin is in the top left.

3D coordinates (left-handed coordinate system): First coordinate is the horizontal axis (left to right), second coordinate is the vertical axis (up), and the third is in depth direction (positive values in front of camera).

Please follow the instructions from Margipose for downloading, pre-processing, and storing the data.

Included in the GitHub are 4 sets of pretrained models that are used in humanPose-demo.ipynb

We have also included training and evaluation code.

This work is licensed under MIT License. See LICENSE for details.

If you find our code helpful, please consider citing the following paper:

@article{yu2020pcls,

title={PCLs: Geometry-aware Neural Reconstruction of 3D Pose with Perspective Crop Layers},

author={Yu, Frank and Salzmann, Mathieu and Fua, Pascal and Rhodin, Helge},

journal={arXiv preprint arXiv:2011.13607},

year={2020}

}

- We would like to thank Dushyant Mehta and Jim Little for valuable discussion and feedback. Thank you to Shih-Yang Su for his feedback in the creation of this repository. This work was funded in part by Compute Canada and a Microsoft Swiss JRC project. Frank Yu was supported by NSERC-CGSM.

- We would also like to thank the authors of Nibali et al. 2018, Martinez et al. 2017, and Pavllo et al. 2019 for making their implementations available.

- Our code builds upon 3d-pose-baseline, Margipose, and VideoPose3D