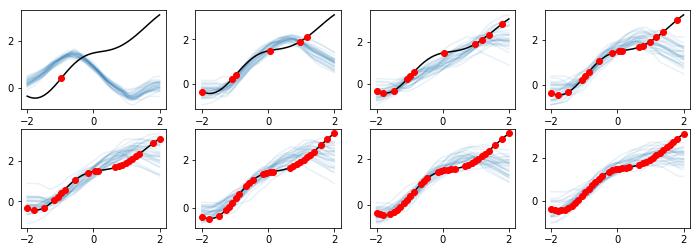

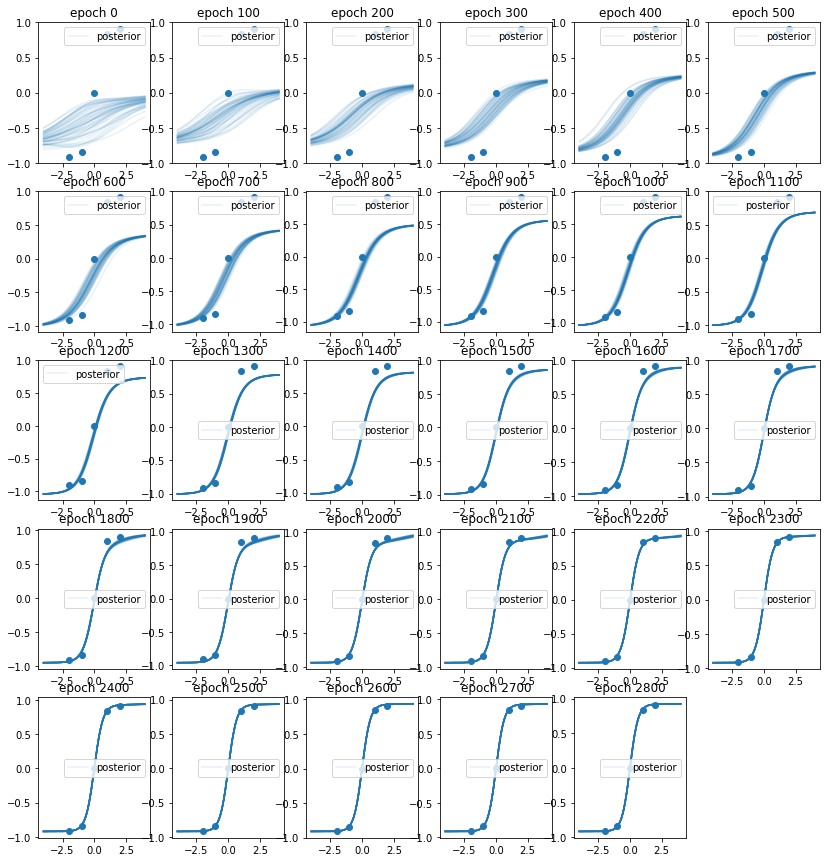

Implemenation of Neural Processes (NPs) introduced by Garnelo et al. (DeepMind) with Chainer. NPs can be seen as generalizations of recently published generative query networks (GQN) from DeepMind (Eslami and Rezende et al., Science 2018). NPs is a class of neural latent variable models, which can be considered as a combination of Gaussian Process (GP) and neural network (NN). Like GPs, NPs define distributions over functions, are capable of rapid adaptation to new observations, and can estimate the uncertainty in their predictions. Like NNs, NPs are computationally efficient during training and evaluation but also learn to adapt their priors to data.

This is the blog post (only in Japanese) about this repository.

The experiments and implementation are inspired by blog post by Kaspar Märtens

MIT license. Contributions welcome.

python 2.x, chainer 4.3.1, numpy, matplotlib

https://github.com/yu-takagi/chainer-neuralprocesses/blob/master/exp_1d_func.ipynb

https://github.com/yu-takagi/chainer-neuralprocesses/blob/master/exp_1d_func_gp_train.ipynb