Dual Augmented Lagrangian (DAL) algorithm for sparse/low-rank reconstruction and learning. For more details, check out our JMLR paper, book chapter, talk, and slides.

m = 1024; n = 4096; k = round(0.04*n); A=randn(m,n);

w0=randsparse(n,k); bb=A*w0+0.01*randn(m,1);

lambda=0.1*max(abs(A'*bb));

[ww,stat]=dalsql1(zeros(n,1), A, bb, lambda); m = 1024; n = 4096; k = round(0.04*n); A=randn(m,n);

w0=randsparse(n,k); yy=sign(A*w0+0.01*randn(m,1));

lambda=0.1*max(abs(A'*yy));

[ww,bias,stat]=dallrl1(zeros(n,1), 0, A, yy, lambda); m = 1024; n = [64 64]; k = round(0.1*n(1)); A=randn(m,prod(n));

w0=randsparse(n,k); yy=sign(A*w0(:)+0.01*randn(m,1));

lambda=0.1*max(sqrt(sum(reshape(A'*yy/2,n).^2)));

[ww,bias,stat]=dallrgl(zeros(n), 0, A, yy, lambda); m = 2048; n = [64 64]; r = round(0.1*n(1)); A=randn(m,prod(n));

w0=randsparse(n,'rank',r); yy=sign(A*w0(:)+0.01*randn(m,1));

lambda=0.2*norm(reshape(A'*yy/2,n));

[ww,bias,stat]=dallrds(zeros(n), 0, A, yy, lambda); n = [640 640]; r = 6; m = 2*r*sum(n);

w0=randsparse(n,'rank',r);

ind=randperm(prod(n)); ind=ind(1:m);

A=sparse(1:m, ind, ones(1,m), m, prod(n));

yy=A*w0(:)+0.01*randn(m,1);

lambda=0.3*norm(reshape(A'*yy,n));

[ww,stat]=dalsqds(zeros(n),A,yy,lambda,'solver','qn'); m = 1024; n = 4096; k = round(0.04*n); A=randn(m,n);

w0=randsparse(n,k); bb=A*w0+0.01*randn(m,1);

pp=0.1*abs(A'*bb);

[ww,stat]=dalsqal1(zeros(n,1), A, bb, pp); m = 1024; n = 4096; k = round(0.04*n); A=randn(m,n);

w0=randsparse(n,k); bb=A*w0+0.01*randn(m,1);

lambda=0.1*max(abs(A'*bb));

weight=(1:m)';

[ww,stat]=dalsqwl1(zeros(n,1), A, bb, lambda, weight);

figure, plot(bb-A*ww); m = 1024; n = 4096; k = round(0.04*n); A=randn(m,n);

w0=randsparse(n,k); bb=A*w0+0.01*randn(m,1);

lambda=0.1*max(abs(A'*bb));

[ww,stat]=dalsqen(zeros(n,1), A, bb, lambda, 0.5);Try

exp_hsglTo get

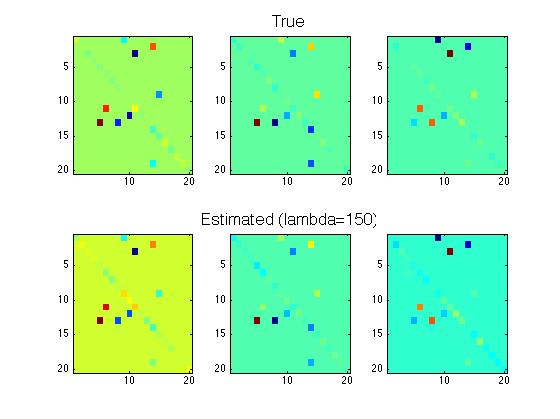

Here the model is a sparsely connected 3rd order multi-variate AR model with 20 variables. The top row shows the true coefficients. The bottom row shows the estimated coefficients.

See exp_hsgl.m and our paper for more details.

- Ryota Tomioka, Taiji Suzuki, and Masashi Sugiyama (2011) Augmented Lagrangian Methods for Learning, Selecting, and Combining Features. In Suvrit Sra, Sebastian Nowozin, and Stephen J. Wright, editors, Optimization for Machine Learning. MIT Press.

- Ryota Tomioka, Taiji Suzuki, Masashi Sugiyama, and Hisashi Kashima (2010)A Fast Augmented Lagrangian Algorithm for Learning Low-Rank Matrices. In Proc. of the 27 th Annual International Conference on Machine Learning (ICML 2010), Haifa, Israel. [Slides] [Support page]

- Ryota Tomioka, Taiji Suzuki, and Masashi Sugiyama (2011) Super-Linear Convergence of Dual Augmented Lagrangian Algorithm for Sparse Learning. JMLR 12: 1537-1586. [Support Page]

- Ryota Tomioka & Masashi Sugiyama (2009) Dual Augmented Lagrangian Method for Efficient Sparse Reconstruction IEEE Signal Proccesing Letters, 16 (12) pp. 1067-1070.

- Yamada, M., Jitkrittum, W., Sigal, L., Xing, E. P., & Sugiyama, M. (2014) High-dimensional feature selection by feature-wise kernelized lasso. Neural Computatio 26(1):185-207.

- Kriti Puniyani, Seyoung Kim and Eric P. Xing (2010) Multi-population GWA mapping via multi-task regularized regression. Bioinformatics, 26 (12): pp. i208-i216.

- S. Haufe, R. Tomioka, T. Dickhaus, C. Sannelli, B. Blankertz, G. Nolte, K.-R. Müller (2010) Large-scale EEG/MEG source localization with spatial flexibility. Neuroimage.

- Stefan Haufe, Ryota Tomioka, Guido Nolte, Klaus-Robert Müller, and Motoaki Kawanabe (2010) Modeling sparse connectivity between underlying brain sources for EEG/MEG. IEEE Trans. Biomed. Eng. 57(8), pp. 1954-1963.

I would like to thank Stefan Haufe, Christian Kothe, Marius Kloft, Artemy Kolchinsky, and Makoto Yamada for testing the software and pointing out some problems. The non-negative lasso extension was contributed by Shigeyuki Oba. The weighted lasso was contributed by Satoshi Hara. I was supported by the Global COE program (Computationism as a Foundation for the Sciences) until March 2009.