🥳 Welcome to visit our website by clicking here

We provide datasets TartanAir and SubT-MRS, aiming to push the rubustness of SLAM algorithms in challenging environments, as well as advancing sim-to-real transfer. Our datasets contain a set of perceptually degraded environments such as darkness, airborne obscurant conditions such as fog, dust, smoke and lack of prominent perceptual features in self-similar areas. One of our hypotheses is that in such challenging cases, the robot needs to rely on multiple sensors to reliably track and localize itself. We provide a rich set of sensory modalities including RGB images, LiDAR points, IMU measurements, thermal images and so on.

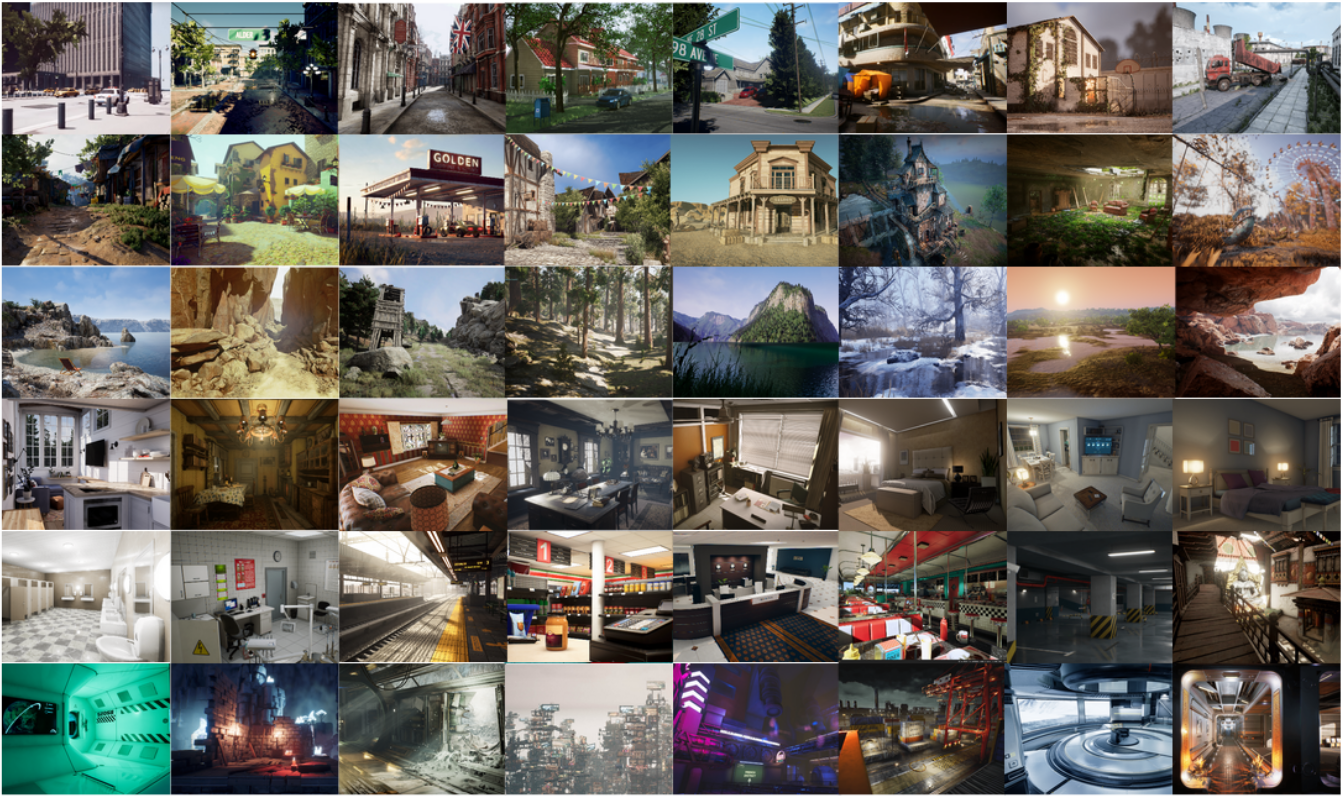

The TartanAir dataset is collected in photo-realistic simulation environments based on the AirSim project. A special goal of this dataset is to focus on the challenging environments with changing light conditions, adverse weather, and dynamic objects.

Key features of our dataset:

- Large size diverse realistic data: We collect the data in diverse environments with different styles, covering indoor/outdoor, different weather, different seasons, urban/rural.

- Multimodal ground truth labels: We provide RGB stereo, depth, optical flow, and semantic segmentation images, which facilitates the training and evaluation of various visual SLAM methods.

- Diversity of motion patterns: Our dataset covers much more diverse motion combinations in 3D space, which is significantly more difficult than existing datasets.

- Diversity of motion patterns: We include challenging scenes with difficult lighting conditions, day-night alternating, low illumination, weather effects (rain, snow, wind and fog) and seasonal changes.Please refer to the TartanAir Dataset and the paper for more information.

🎈You can find more information about Tartan Air from the links here

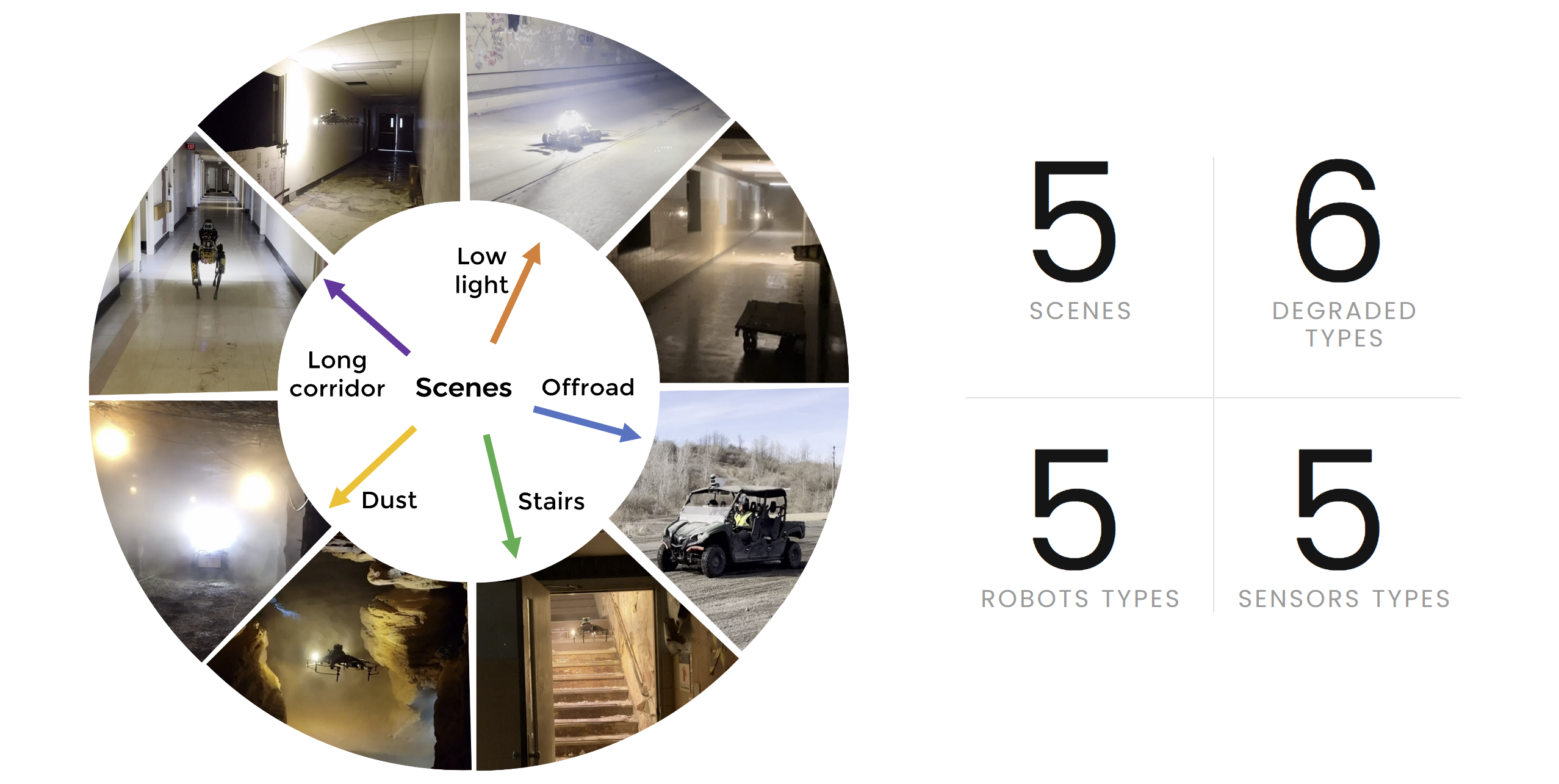

The SubT-MRS Dataset(Subterranean, Multi-Robot, Multi-Spectral-Inertial, Multi-Degraded Dataset for Robust SLAM) is an exceptional real-world collection of challenging datasets obtained from Subterranean Environments, encompassing caves, urban areas, and tunnels. Its primary focus lies in testing robust SLAM capabilities and is designed as Multi-Robot Datasets, featuring UGV, UAV, and Spot robots, each demonstrating various motions. The datasets are distinguished as Multi-Spectral, integrating Visual, Lidar, Thermal, and inertial measurements, effectively enabling exploration under demanding conditions such as darkness, smoke, dust, and geometrically degraded environments.

Key features of our dataset:

- Multiple Modalities: Our dataset includes hardware time-synchronized data from 4 RGB cameras, 1 LiDAR, 1 IMU, and 1 thermal camera, providing diverse and precise sensor inputs.

- Diverse Scenarios: Collected from multiple locations, the dataset exhibits varying environmental setups, encompassing indoors, outdoors, mixed indoor-outdoor, underground, off-road, and buildings, among others.

- Multi-Degraded: By incorporating multiple sensor modalities and challenging conditions like fog, snow, smoke, and illumination changes, the dataset introduces various levels of sensor degradation.

- Heterogeneous Kinematic Profiles: The SubT-MRS Dataset uniquely features time-synchronized sensor data from diverse vehicles, including RC cars, legged robots, drones, and handheld devices, each operating within distinct speed ranges.

| Name | Location | Robot | Sensors | Description | Degraded types | Length | Return to origin | Size |

|---|---|---|---|---|---|---|---|---|

| Subt Canary | Subt | subt_canary | IMU, Lidar | UAV goes in part of the subterranean environment | Geometry | 329m(591.3s) | No | 811.2MB |

| Subt DS3 | Subt | subt_ds3 | IMU, Lidar | UAV goes in part of the subterranean environment | Geometry | 350.6m(607.42s) | No | 576.4MB |

| Subt DS4 | Subt | subt_ds4 | IMU, Lidar | UAV goes in part of the subterranean environment | Geometry | 238m(484.7s) | No | 308.3MB |

| Subt R1 | Subt | subt_r1 | IMU, Lidar | UGV goes in part of the subterranean environment | Geometry | 436.4m(600s) | No | 2.11GB |

| Subt R2 | Subt | subt_r2 | IMU, Lidar | UGV goes in part of the subterranean environment | Geometry | 536m(1909s) | No | 1.96GB |

velodyne_msgs/VelodyneScan messages can be converted to sensor_msgs/PointCloud2 messages by this driver tool in ROS1. Please follow the below instructions to use this driver.

-

Prerequisite

ROS1, preferably ROS Noetic or ROS Melodic, on which the driver have been tested. The below steps assume that ROS1 installation is completed.

-

Update package index files

sudo apt-get update

-

Install ROS packages

rosversion -d | xargs bash -c 'sudo apt-get install -y ros-$0-pcl-ros ros-$0-roslint ros-$0-diagnostic-updater ros-$0-angles'

-

Install other packages

sudo apt-get install -y libpcap-dev libyaml-cpp-dev

-

Download and build the driver

Note that the driver code is tested on the master branch on August 2023.

mkdir -p velodyne_ws/src cd velodyne_ws/src git clone https://github.com/ros-drivers/velodyne.git cd .. catkin_make

-

Run the driver

source devel/setup.bash roslaunch velodyne_pointcloud VLP16_points.launchThis will launch several ROS nodes, which subscribe to the topic /velodyne_packets for input velodyne_msgs/VelodyneScan messages and publish sensor_msgs/PointCloud2 messages to the topic /velodyne_points.

Visit the documentation for the hardware information here

We provide three exciting tracks of challenges : visual Inertial Track, LiDAR Inertial Track and Sensor Fusion Track!

You can participate either Visual-inertial challenge or LiDAR-inertial challenge Or Sensor Fusion challenge.

See the three challenge track web pages for the requirement of estimated trajectories. The three challenge track web pages can be accessed here. In addition, every participating team will be required to submit a report describing their algorithm. A template for the report is here.

Is the final scoring based on the aggregate performance across all three tracks?

Three separate awards will be given for each track. Your SLAM performance in the Sensor Fusion track will not impact the scores in other tracks.

May I run my own calibration?

While we appreciate your initiative, running your own calibration is not necessary. We have already provided both intrinsic and extrinsic calibration for each sequence. These calibrated values are carefully computed to ensure accurate results.

How are the submission ranked?

The submission will be ranked based on completeness of the trajectory as well as on the position accuracy (ATE,RPE).

We will directly use ATE and RPE to evaluate the accuracy of trajectory.