Currently, we only offer the Hugging Face demo code. The CODE dataset and training scripts will be made available once this paper is accepted.

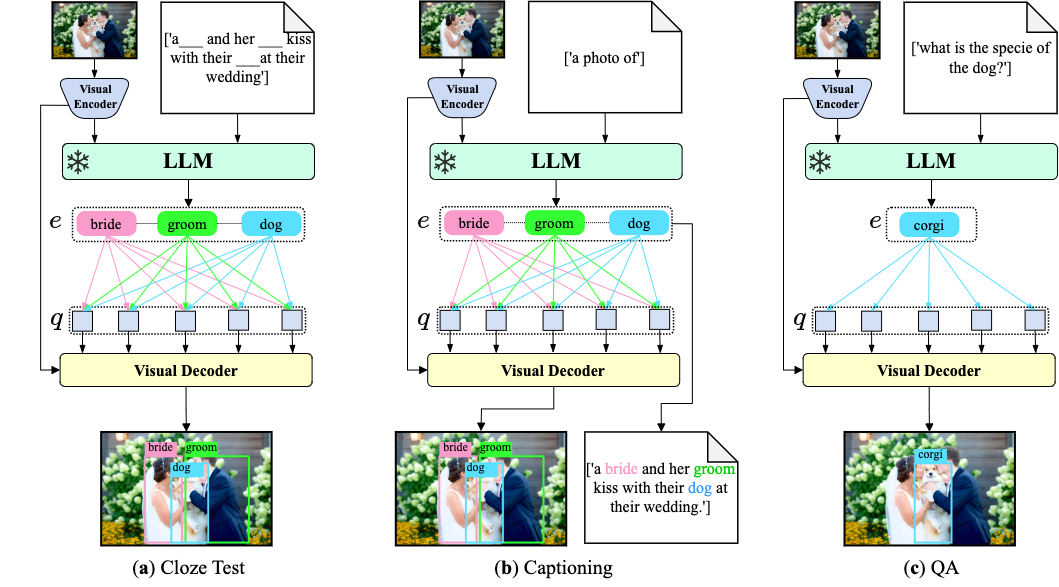

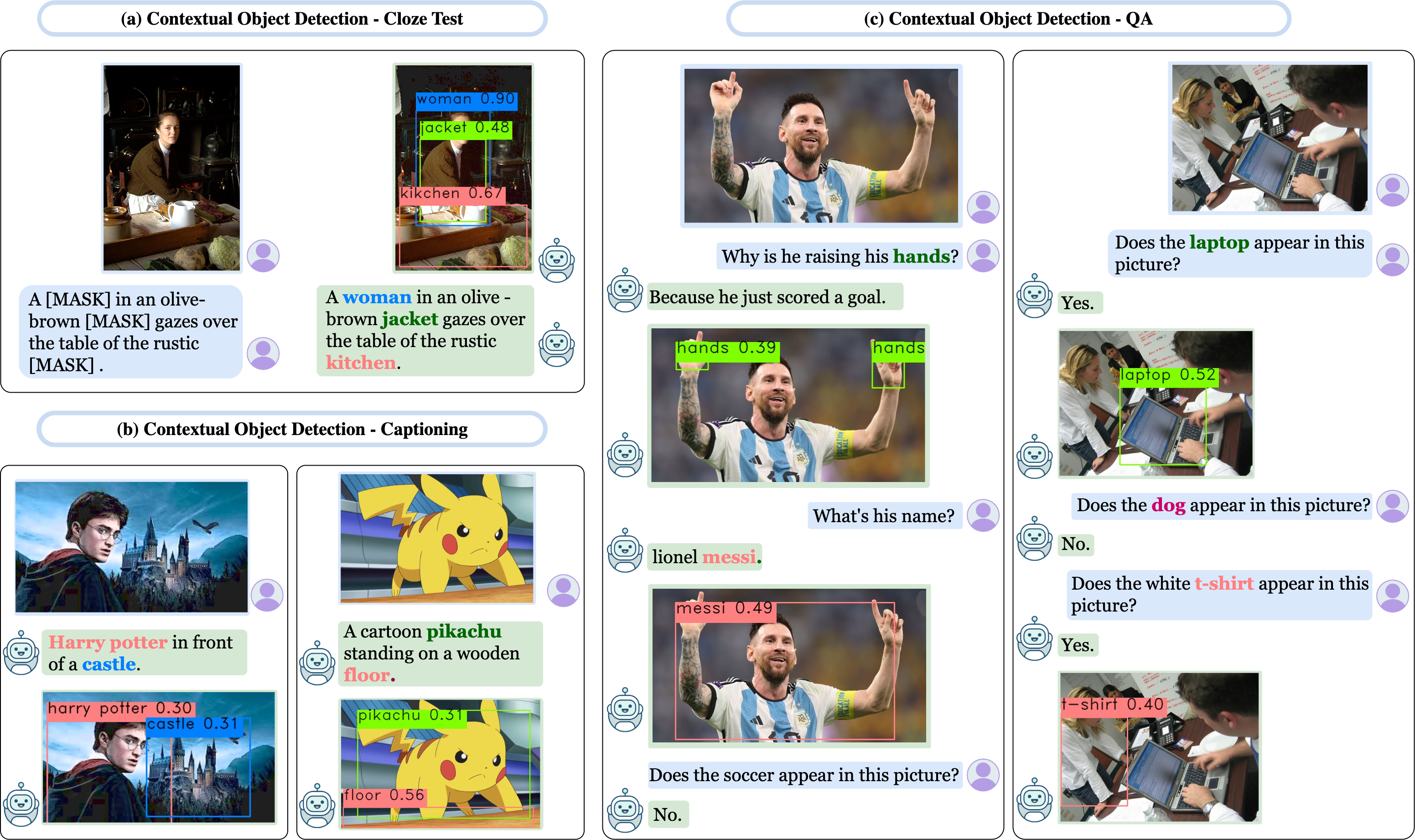

Recent Multimodal Large Language Models (MLLMs) are remarkable in vision-language tasks, such as image captioning and question answering, but lack the essential perception ability, i.e., object detection. In this work, we address this limitation by introducing a novel research problem of contextual object detection--understanding visible objects within different human-AI interactive contexts. Three representative scenarios are investigated, including the language cloze test, visual captioning, and question answering.

| Task | Language Input | Output(s) | Remark |

|---|---|---|---|

| Object Detection | ✗ | box, class label | pre-defined class labels |

| Open-Vocabulary Object Detection | (optional) class names for CLIP | box, class label | pre-defined class labels |

| Referring Expression Comprehension | complete referring expression | box that expression refers to | / |

| Contextual Cloze Test (ours) | incomplete expression, object names are masked | {box, name} to complete the mask | name could be most valid English word |

| Image Captioning | ✗ | language caption | / |

| Contextual Captioning (ours) | ✗ | language caption, box | / |

| Visual Question Answering | language question | language answer | / |

| Contextual QA (ours) | language question | language question, box | / |

We present ContextDET, a novel generate-then-detect framework, specialized for contextual object detection. ContextDET is end-to-end and consists of three key architectural components:

- a visual encoder that extracts high-level image representations and computes visual tokens,

- a pre-trained LLM that decodes multimodal contextual tokens with a task-related multimodal prefix, and

- a visual decoder that predicts matching scores and bounding boxes for conditional queries linked to contextual object words.

The new generate-then-detect framework enables us to detect object words within human vocabulary.

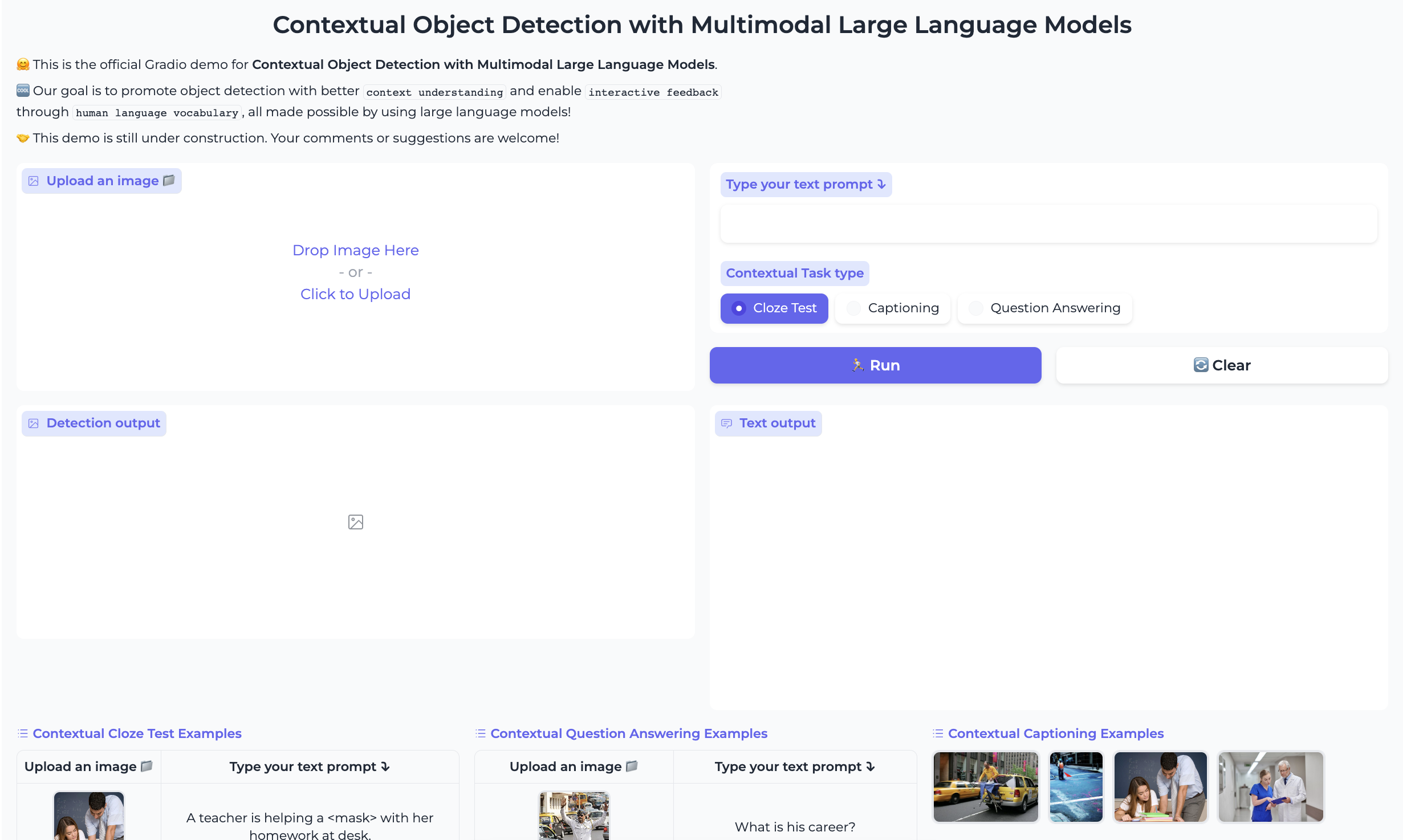

🤗 You can try our demo on HuggingFace spaces. To avoid waiting in the queue and speed up your inference, consider duplicating the space and use GPU resources.

🤗 If you want to try the demo on your own computer with GPU, follow these steps

- Install the required python packages:

pip install -r requirements.txt- Download the checkpoint file from the following URL and save it in your local directory.

- Now, you're ready to run the demo. Execute the following command:

python app.pyYou are expected to see the following web page:

We would be grateful if you consider citing our work if you find it useful:

@article{zang2023contextual,

author = {Zang, Yuhang and Li, Wei and Han, Jun and Zhou, Kaiyang and Loy, Chen Change},

title = {Contextual Object Detection with Multimodal Large Language Models},

journal = {arXiv preprint arXiv:2305.18279},

year = {2023}

}This project is licensed under S-Lab License 1.0. Redistribution and use for non-commercial purposes should follow this license.

We acknowledge the use of the following public code in this project: 1DETR, 2Deformable DETR, 3DETA, 4OV DETR, 5BLIP2.

If you have any questions, please feel free to contact Yuhang Zang (zang0012 AT ntu.edu.sg).