Code for our paper :

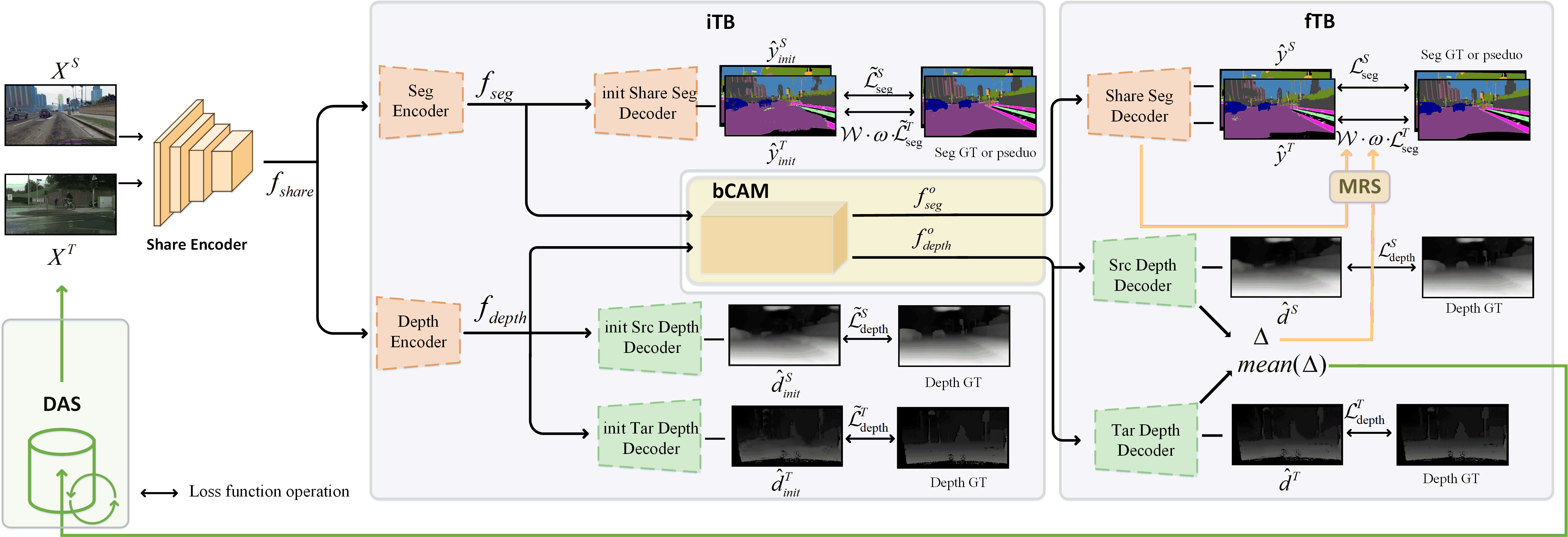

BiCoD: Bidirectional Correlated Depth Guidance for Domain Adaptation Segmentation

Please create and activate the following conda envrionment. To reproduce our results, please kindly create and use this environment.

# create environment

conda update BiCoD

conda env create -f environment.yml

conda activate BiCoD Code was tested on an NVIDIA 3090Ti with 24G Memory.

-

CITYSCAPES: Follow the instructions in Cityscape to download the images and validation ground-truths. Please follow the dataset directory structure:

<CITYSCAPES_DIR>/ % Cityscapes dataset root ├── leftImg8bit_trainvaltest/ % input image (leftImg8bit_trainvaltest.zip) ├── depth/ # From https://people.ee.ethz.ch/~csakarid/SFSU_synthetic/, also downloadable at https://www.qin.ee/depth/ ├── disparity/ % stereo depth (disparity_trainvaltest.zip) └── gtFine_trainvaltest/ % semantic segmentation labels (gtFine_trainvaltest.zip)

-

SYNTHIA: Follow the instructions here to download the images from the SYNTHIA-RAND-CITYSCAPES (CVPR16) split. Download the segmentation labels from CTRL-UDA using the link here. Please follow the dataset directory structure:

<SYNTHIA_DIR>/ % Synthia dataset root ├── RGB/ % input images ├── GT/ % semseg labels labels └── Depth/ % depth labels

-

GTA5: Follow the instructions here to download the images from the SYNTHIA-RAND-CITYSCAPES (CVPR16) split. Download the segmentation labels from CTRL-UDA using the link here. Please follow the dataset directory structure:

<GTA_DIR>/ % Synthia dataset root ├── images/ % input images ├── labels/ % semseg labels labels └── disparity/ % Our generated monodepth 0-65535 in normalized disparity, downloadable at https://www.qin.ee/depth/

comming soonPre-trained models are provided (Google Drive).

# Test the model for the SYNTHIA2Cityscapes task

python3 evaluateUDA.py --full-resolution -m deeplabv2_synthia --model-path=<"model path">

# Test the model for the GTA2Cityscapes task

python3 evaluateUDA.py --full-resolution -m deeplabv2_gta --model-path=<"model path">Reported Results on SYNTHIA2Cityscapes (The reported results are based on 5 runs instead of the best run.)

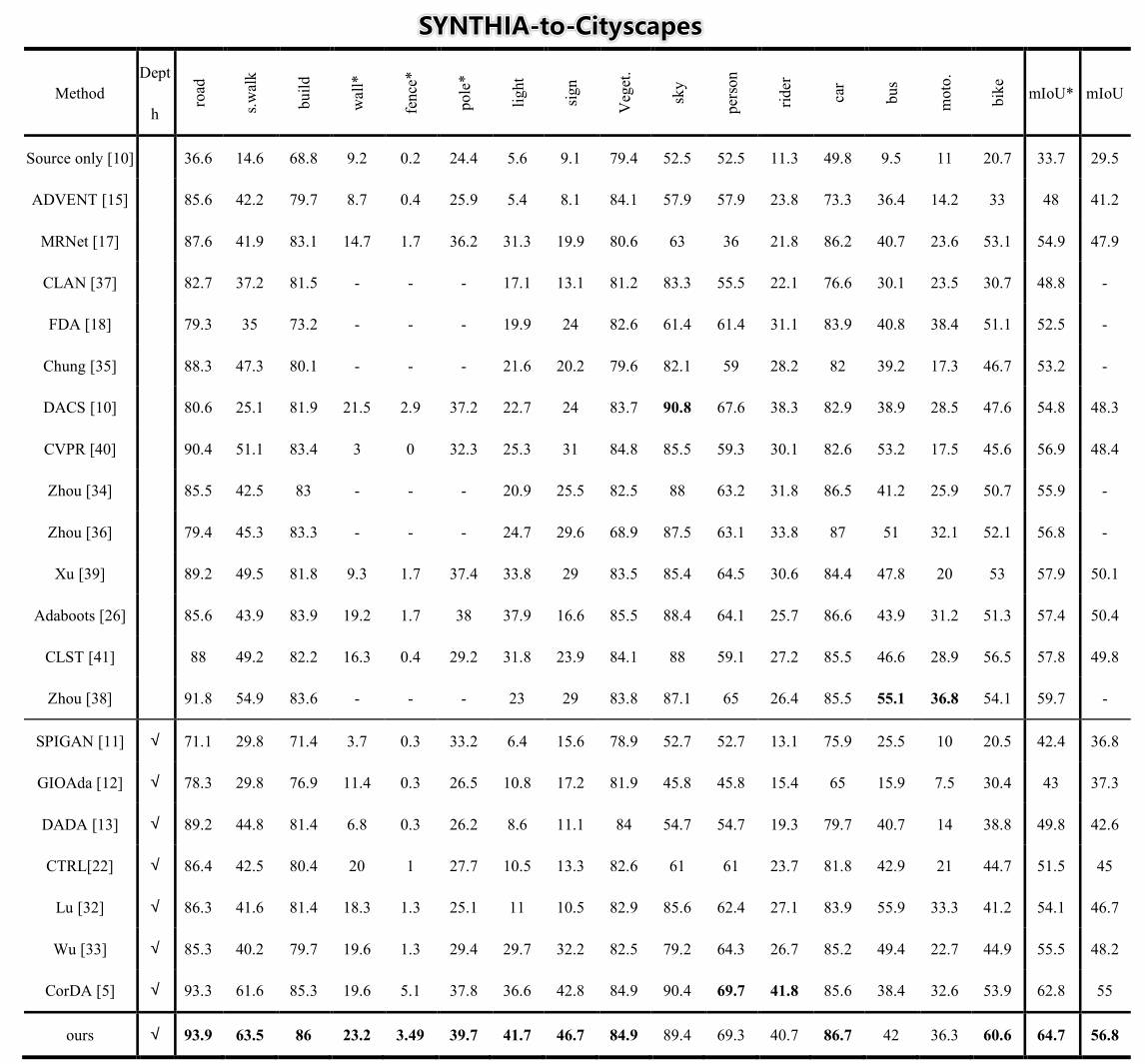

| Method | mIoU*(13) | mIoU(16) |

|---|---|---|

| CBST | 48.9 | 42.6 |

| FDA | 52.5 | - |

| DADA | 49.8 | 42.6 |

| CTRL | 51.5 | 45 |

| CorDA | 62.8 | 55.0 |

| BiCoD | 64.4 | 56.8 |

- This codebase depends on CorDA, AdaBoost_seg and DenseMTL. Thank you for the work you've done!!!

- DACS is used as our codebase official

- SFSU as the source of stereo Cityscapes depth estimation Official

Those compents are provided by CorDA,Thanks!

- Download links

- Stereo Depth Estimation for Cityscapes

- Mono Depth Estimation for GTA

- SYNTHIA Depth and images SYNTHIA-RAND-CITYSCAPES (CVPR16)