CVPR2021 paper "Guided Interactive Video Object Segmentation Using Reliability-Based Attention Maps"

ECCV2020 paper "Interactive Video Object Segmentation Using Global and Local Transfer Modules"

Project Pages:

CVPR2021 /

ECCV2020

Codes in this github:

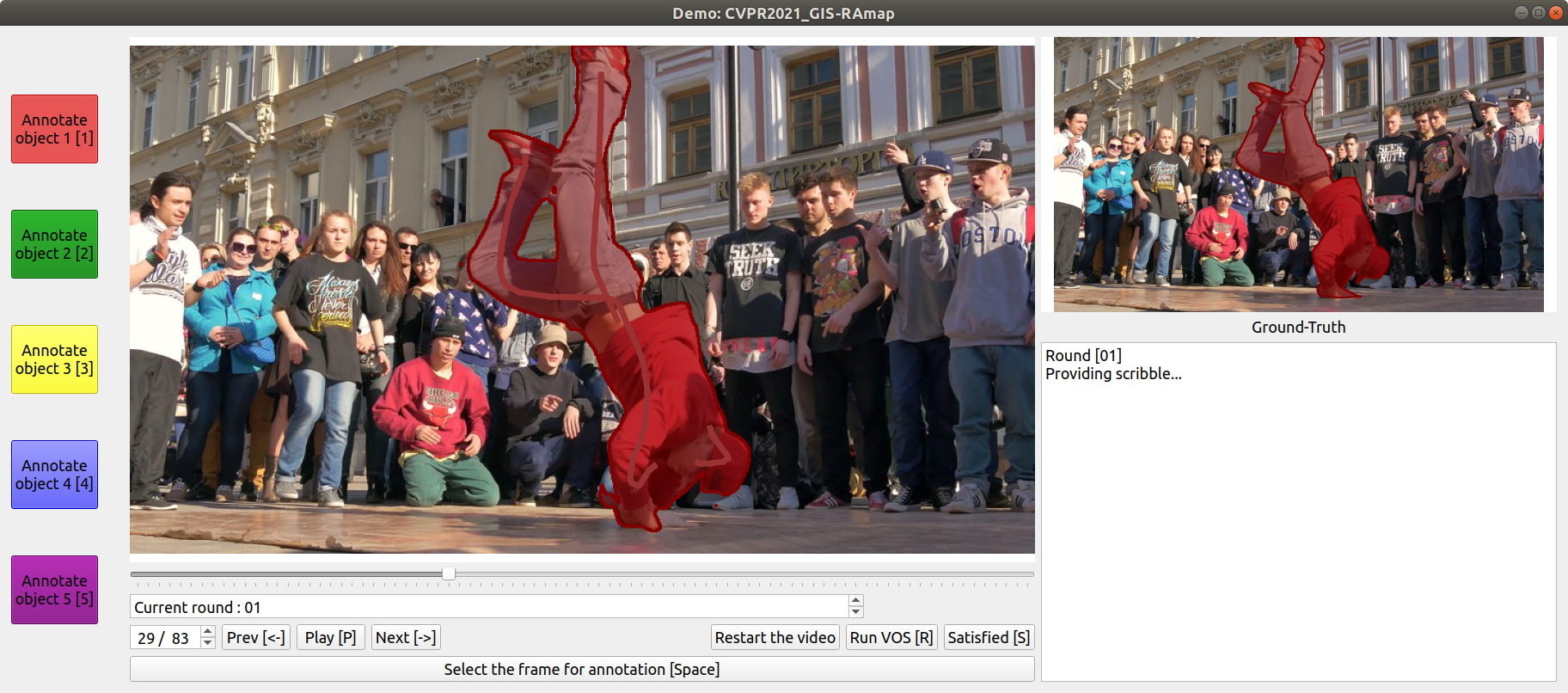

- Real-world GUI evaluation on DAVIS2017 based on the DAVIS framework

- GUI for other videos

- cuda 11.0

- python 3.6

- pytorch 1.6.0

- davisinteractive 1.0.4

- numpy, cv2, PtQt5, and other general libraries of python3

-

root/apps: QWidget apps. -

root/checkpoints: save our checkpoints (pth extensions) here. -

root/dataset_torch: pytorch datasets. -

root/libs: library of utility files. -

root/model_CVPR2021: networks and GUI models for CVPR2021- detailed explanations on [Github:CVPR2021]

-

root/model_ECCV2020: networks and GUI models for ECCV2020- detailed explanations (building correlation package) on [Github:ECCV2020]

-

root/eval_GIS_RS1.py: DAVIS2017 evaluation based on the DAVIS framework. -

root/eval_GIS_RS4.py: DAVIS2017 evaluation based on the DAVIS framework. -

root/eval_IVOS.py: DAVIS2017 evaluation based on the DAVIS framework. -

root/IVOS_demo_customvideo.py: GUI for custom videos

- Edit

eval_GIS_RS1.py``eval_GIS_RS4.py``eval_IVOS.py``IVOS_demo_customvideo.pyto set the directory of your DAVIS2017 dataset and other configurations. - Download our parameters and place the file as

root/checkpoints/GIS-ckpt_standard.pth.- For CVPR2021 evaluation [Google-Drive]

- For ECCV2020 evaluation [Google-Drive]

- Run

eval_GIS_RS1.py``eval_GIS_RS4.py``eval_IVOS.pyfor real-world GUI evaluation on DAVIS2017 or - Run

IVOS_demo_customvideo.pyto apply our method on the other videos

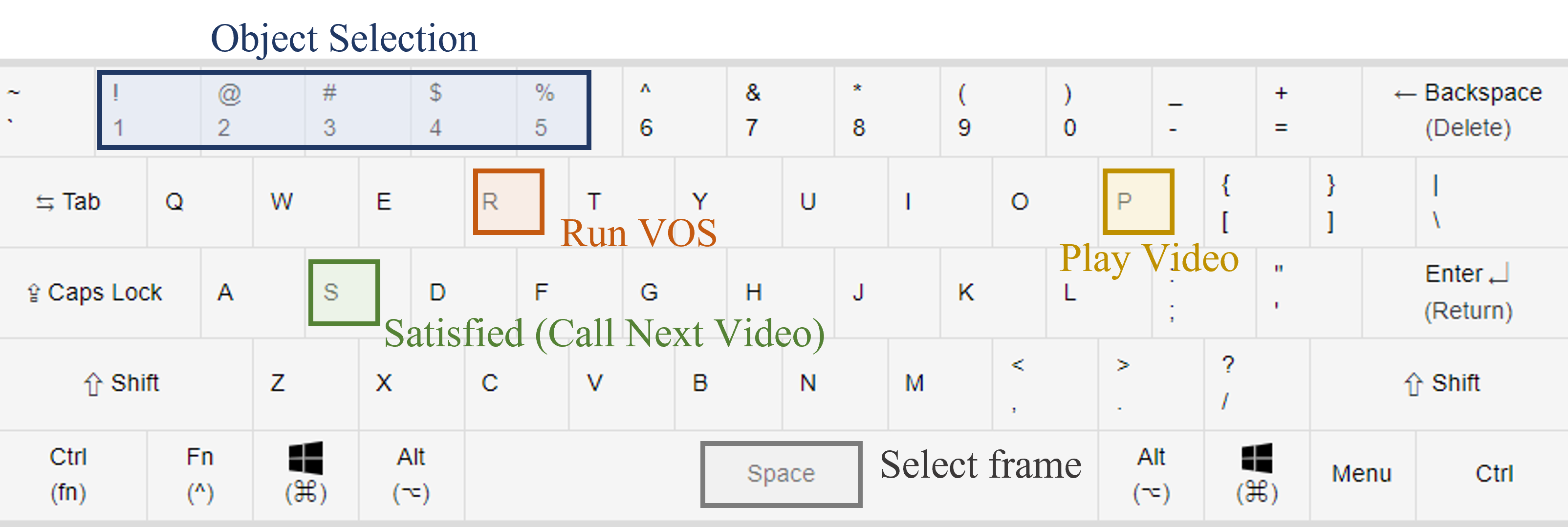

Left click for the target object and right click for the background.

- Select any frame to interact by dragging the slidder under the main image

- Give interaction

- Run VOS

- Find worst frame and reinteract. - For GIS, a candidate frame(RS1) or candidate frames(RS4) are given

- Iterate until you get satisfied with VOS results.

- By selecting satisfied button, your evaluation result (consumed time and frames) will be recorded on

root/results.

Please cite our paper if the implementations are useful in your work:

@Inproceedings{

Yuk2021GIS,

title={Guided Interactive Video Object Segmentation Using Reliability-Based Attention Maps},

author={Yuk Heo and Yeong Jun Koh and Chang-Su Kim},

booktitle={CVPR},

year={2021},

url={https://openaccess.thecvf.com/content/CVPR2021/papers/Heo_Guided_Interactive_Video_Object_Segmentation_Using_Reliability-Based_Attention_Maps_CVPR_2021_paper.pdf}

}

@Inproceedings{

Yuk2020IVOS,

title={Interactive Video Object Segmentation Using Global and Local Transfer Modules},

author={Yuk Heo and Yeong Jun Koh and Chang-Su Kim},

booktitle={ECCV},

year={2020},

url={https://openreview.net/forum?id=bo_lWt_aA}

}

Our real-world evaluation demo is based on the GUI of IPNet:

@Inproceedings{

Oh2019IVOS,

title={Fast User-Guided Video Object Segmentation by Interaction-and-Propagation Networks},

author={Seoung Wug Oh and Joon-Young Lee and Seon Joo Kim},

booktitle={CVPR},

year={2019},

url={https://openaccess.thecvf.com/content_ICCV_2019/papers/Oh_Video_Object_Segmentation_Using_Space-Time_Memory_Networks_ICCV_2019_paper.pdf}

}