Pytorch implementation of CartoonGAN [1] (CVPR 2018)

- Parameters without information in the paper were set arbitrarily.

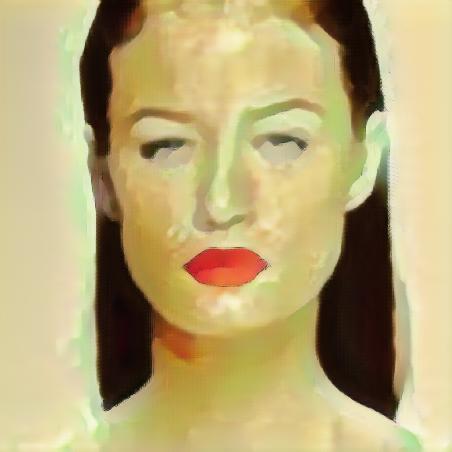

- I used face-cropped celebA (src) and anime (tgt) collected from the web data because I could not find the author's data.

python CartoonGAN.py --name your_project_name --src_data src_data_path --tgt_data tgt_data_path --vgg_model pre_trained_VGG19_model_path

The following shows basic folder structure.

├── data

│ ├── src_data # src data (not included in this repo)

│ │ ├── train

│ │ └── test

│ └── tgt_data # tgt data (not included in this repo)

│ ├── train

│ └── pair # edge-promoting results to be saved here

│

├── CartoonGAN.py # training code

├── edge_promoting.py

├── utils.py

├── networks.py

└── name_results # results to be saved here

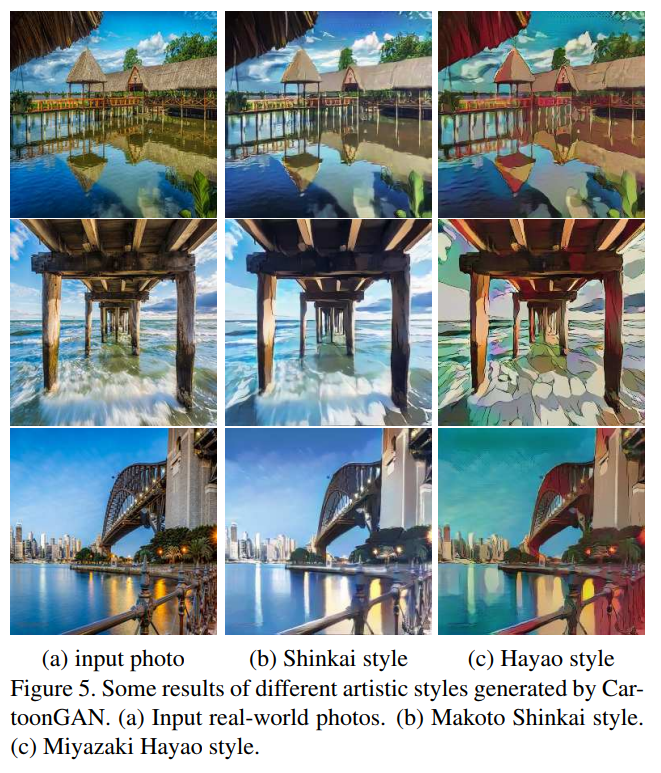

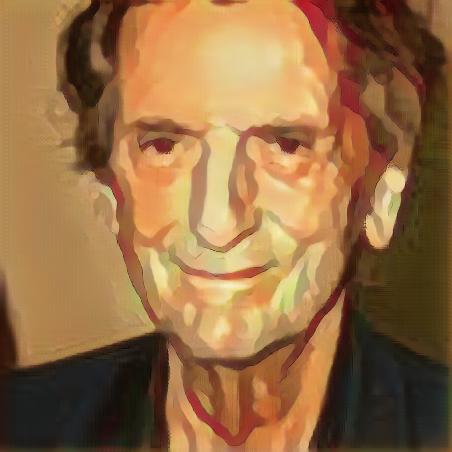

| Input - Result (this repo) |

|

|

|

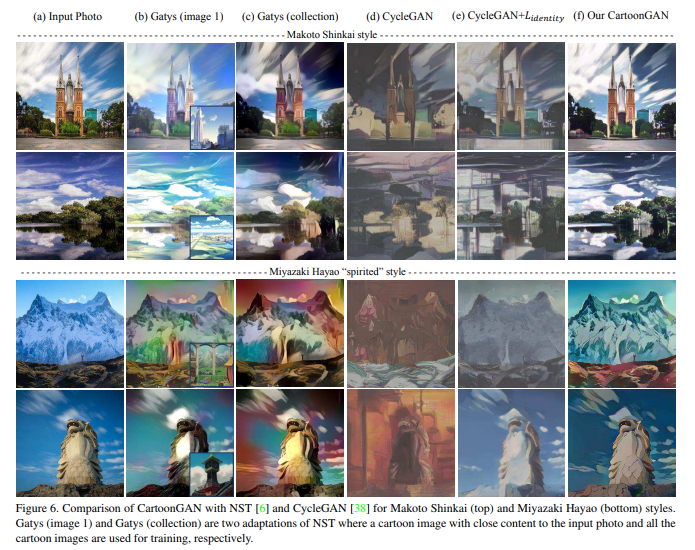

- I got the author's results from CaroonGAN-Test-Pytorch-Torch.

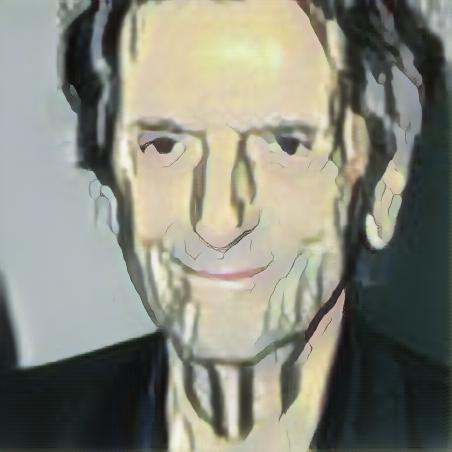

| Input - Result (this repo) | Author's pre-trained model (Hayao) | Author's pre-trained model (Hosoda) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

- NVIDIA GTX 1080 ti

- cuda 8.0

- python 3.5.3

- pytorch 0.4.0

- torchvision 0.2.1

- opencv 3.2.0

[1] Chen, Yang, Yu-Kun Lai, and Yong-Jin Liu. "CartoonGAN: Generative Adversarial Networks for Photo Cartoonization." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018.

(Full paper: http://openaccess.thecvf.com/content_cvpr_2018/papers/Chen_CartoonGAN_Generative_Adversarial_CVPR_2018_paper.pdf)