The repository for Apache Cassandra OpenTelemetry integration demo.

cassandra/- Dockerfile to build container image from https://github.com/k8ssandra/management-api-for-apache-cassandra to include Apache Cassandra with OpenTelemetry integration.

k8s/- Resource definitions for the demo

- AWS EKS deployed on us-east-1 region

- 6 x t3.xlarge (4 vCPU / 16GiB mem) across 3 AZ

- 3 nodes dedicated for Cassandra

- other 3 nodes for apps / opentelemetry collector

- 6 x t3.xlarge (4 vCPU / 16GiB mem) across 3 AZ

The demo environment can be created in ap-northeast-1 region with eksctl:

eksctl create cluster -f eks/cluster.yaml

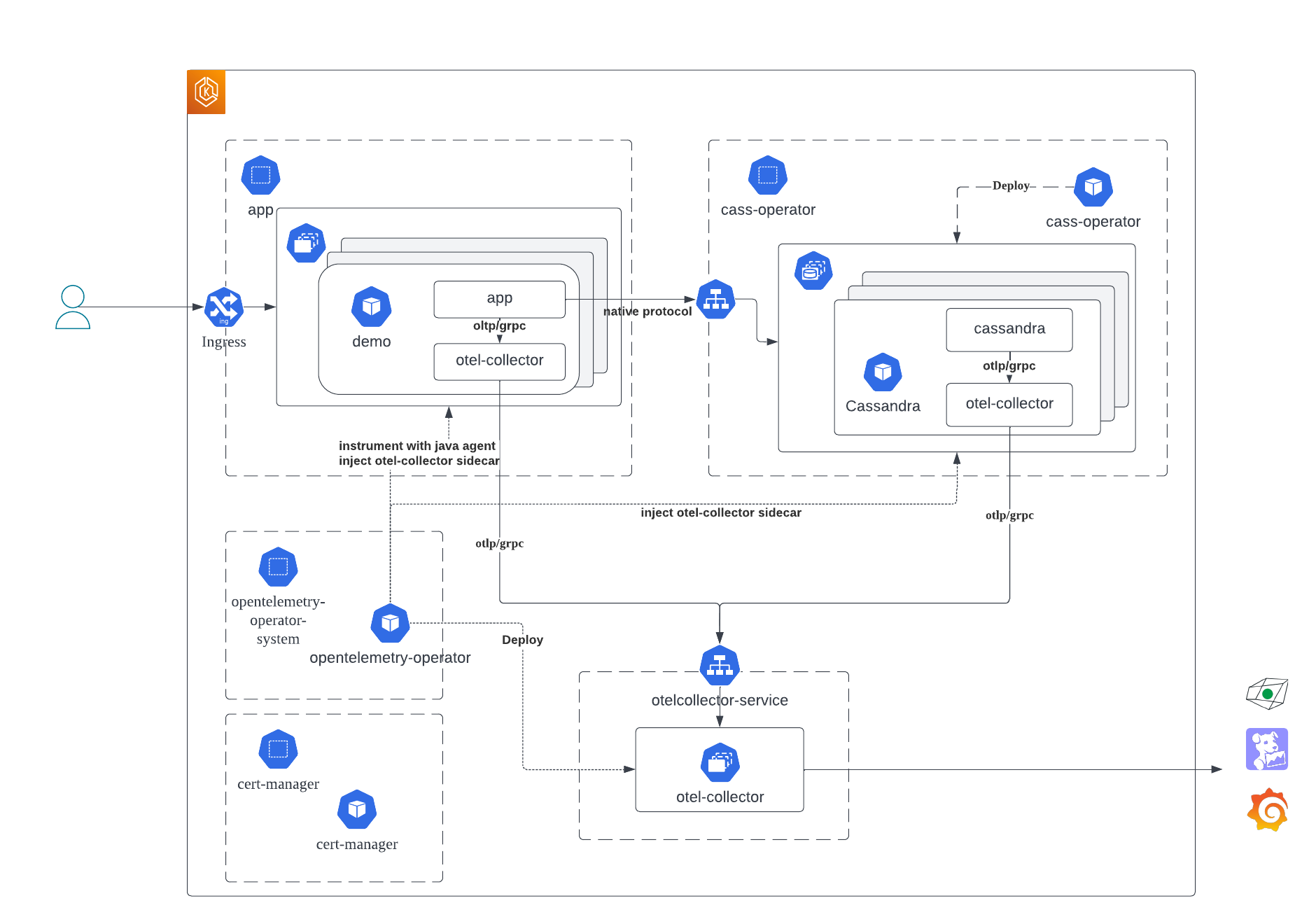

cert-manager is used by OpenTelemetry operator.

In this demo, we use the dafault static install:

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.13.2/cert-manager.yaml

OpenTelemetry operator is used to inject instrumentation to Java app, and deploy OpenTelemetry collectors.

By default, operator is installed in opentelemetry-operator-system namespace.

kubectl apply -f https://github.com/open-telemetry/opentelemetry-operator/releases/download/v0.90.0/opentelemetry-operator.yaml

Cassandra cluster is created by k8ssandra-oeprator in the default k8ssandra-operator namespace.

kubectl apply --force-conflicts --server-side -k "github.com/k8ssandra/k8ssandra-operator/config/deployments/control-plane?ref=v1.10.3"

Deploy OpenTelemetry collectors in its own namespace otel.

In this demo, OpenTelemetry collectors that export telemetry to external services are deployed in its own otel namespace.

Create namespace:

kubectl create namespace otel

Before deploying, generate appropriate secret with API key for external services.

In this demo, Honeycomb, datadog, and Grafana Cloud are used.

Create .env file according to .env.sample file, and create kubernetes Secret from env file.

kubectl -n otel create secret generic otel-collector-keys --from-env-file k8s/opentelemetry-collector/.env

kubectl -n otel apply -f k8s/opentelemetry-collector/exporter.yaml

This should deploy 3 OpenTelemetry collectors deployment named exporter, along with the service exporter-collector in otel namespace.

Cassandra pods are injected with OpenTelemetry collector sidecar by OpenTelemetry operator. Before deploying Cassandra, OpenTelemetry configuration for sidecar should be created in the same namespace.

kubectl -n k8ssandra-operator apply -f k8s/opentelemetry-collector/otel-sidecar.yaml

Create appropriate storage class named gp3 to store Cassandra data if not yet created.

kubectl apply -f eks/sc.yaml

Deploy Cassandra cluster:

kubectl -n k8ssandra-operator apply -f k8s/k8ssandra/dc1.yaml

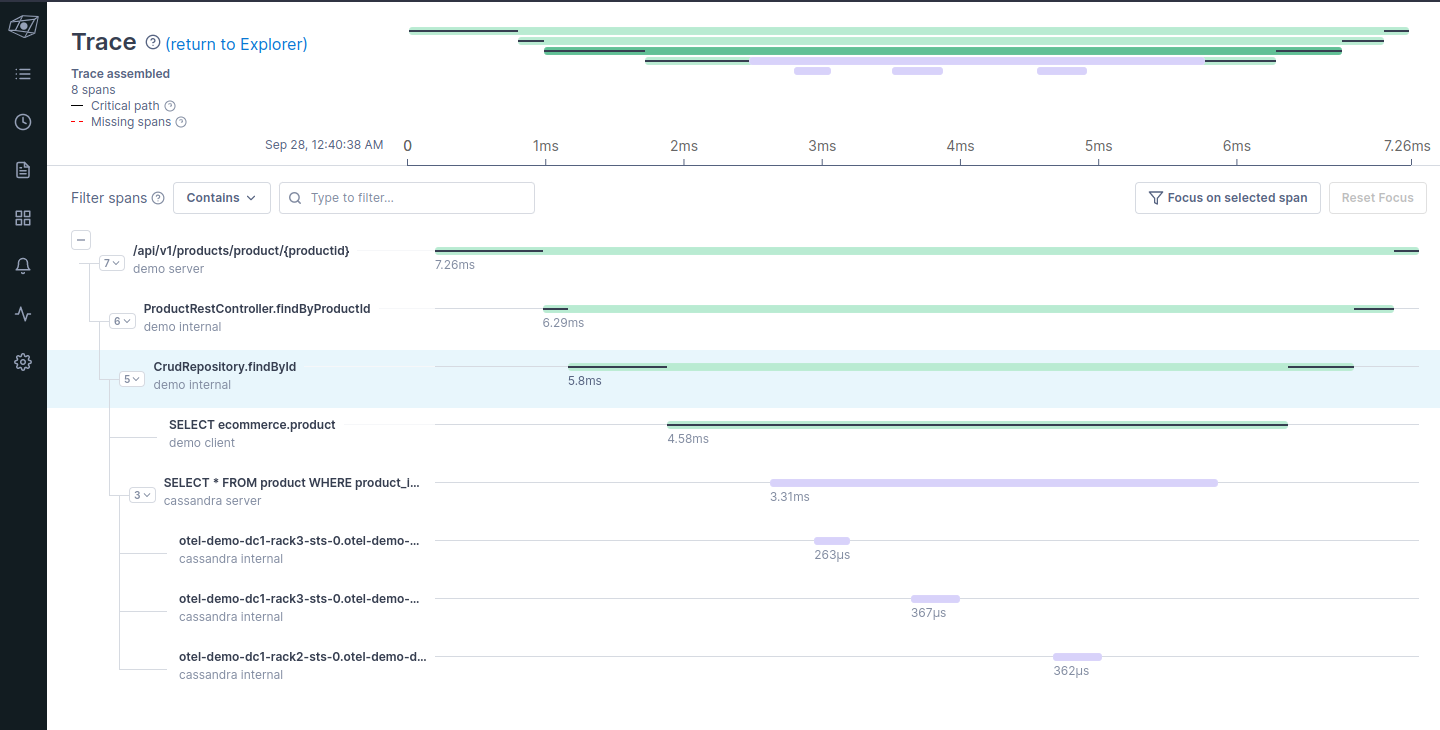

In this demo, the modified version of "Building an E-commerce Website" is used.

The app connects to the deployed Cassandra cluster, and uses OpenTelemetry context propagation for tracing.

In this demo, the demo application is deployed to its own app namespace.

Create app namespace by executing the following:

kubectl create namespace app

OpenTelemetry project develops auto instrumentation libraries for various programming languages and their frameworks.

Java instrumentation can automatically instrument the application that uses Spring framework or DataStax java driver.

OpenTelemetry operator can inject the instrumentation java agent through configuration.

Execute the following to configure instrumentation:

kubectl -n app apply -f k8s/app/demo-instrumentation.yaml

Also, to deploy OpenTelemetry collector sidecar to the demo application, create sidecar configuration in app namespace as well.

kubectl -n app apply -f k8s/opentelemetry-collector/otel-sidecar.yaml

First, get the super user password from the kubernetes secret:

export PASSWORD=`kubectl -n k8ssandra-operator get secret otel-demo-superuser --template '{{ .data.password }}' | base64 -d`

Use that password to login to the cluster:

kubectl -n k8ssandra-operator exec -it otel-demo-dc1-rack1-sts-0 -c cassandra -- cqlsh -u otel-demo-superuser

Execute the following CQLs to create ecommerce keyspace and the database user used from the app:

otel-demo-superuser@cqlsh> CREATE KEYSPACE IF NOT EXISTS ecommerce WITH replication = {'class': 'NetworkTopologyStrategy', 'dc1': 3};

otel-demo-superuser@cqlsh> CREATE ROLE demo WITH LOGIN = true AND PASSWORD = 'xxxxxx';

otel-demo-superuser@cqlsh> GRANT ALL PERMISSIONS ON KEYSPACE ecommerce TO demo;

While you are in cqlsh, also create tables and insert initial data set according to this and this

The demo application needs to be configured through environmental variables to run.

You need to configure the following in k8s/app/.env file:

- Cassandra connection information

- Astra streaming connection

- Google Social login

For Cassandra connection information, put the keyspace name, user name and password you created in the previous step. For the latter two, please see the original repository for detail.

Create secret from the env file:

kubectl -n app create secret generic demo-app-env --from-env-file k8s/app/.env

Execute the following to deploy the demo application:

kubectl -n app apply -f k8s/app/deployment.yaml

Since the demo application depends on in memory session, we need ingress controller that supports sticky session.

In the demo, NGINX ingress controller is used to deploy NGINX ingress which support sticky session.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.9.4/deploy/static/provider/aws/deploy.yaml

Deploy the service to route to the application, and then deploy ingress.

kubectl -n app apply -f k8s/app/service.yaml

kubectl -n app apply -f k8s/app/ingress.yaml

Wait for the external load balancer to be created.

kubectl -n app get ingress

Make sure to update Google OAuth client setting with the load balancer URL.

Get the URL of the load balancer and access the demo in the browser.