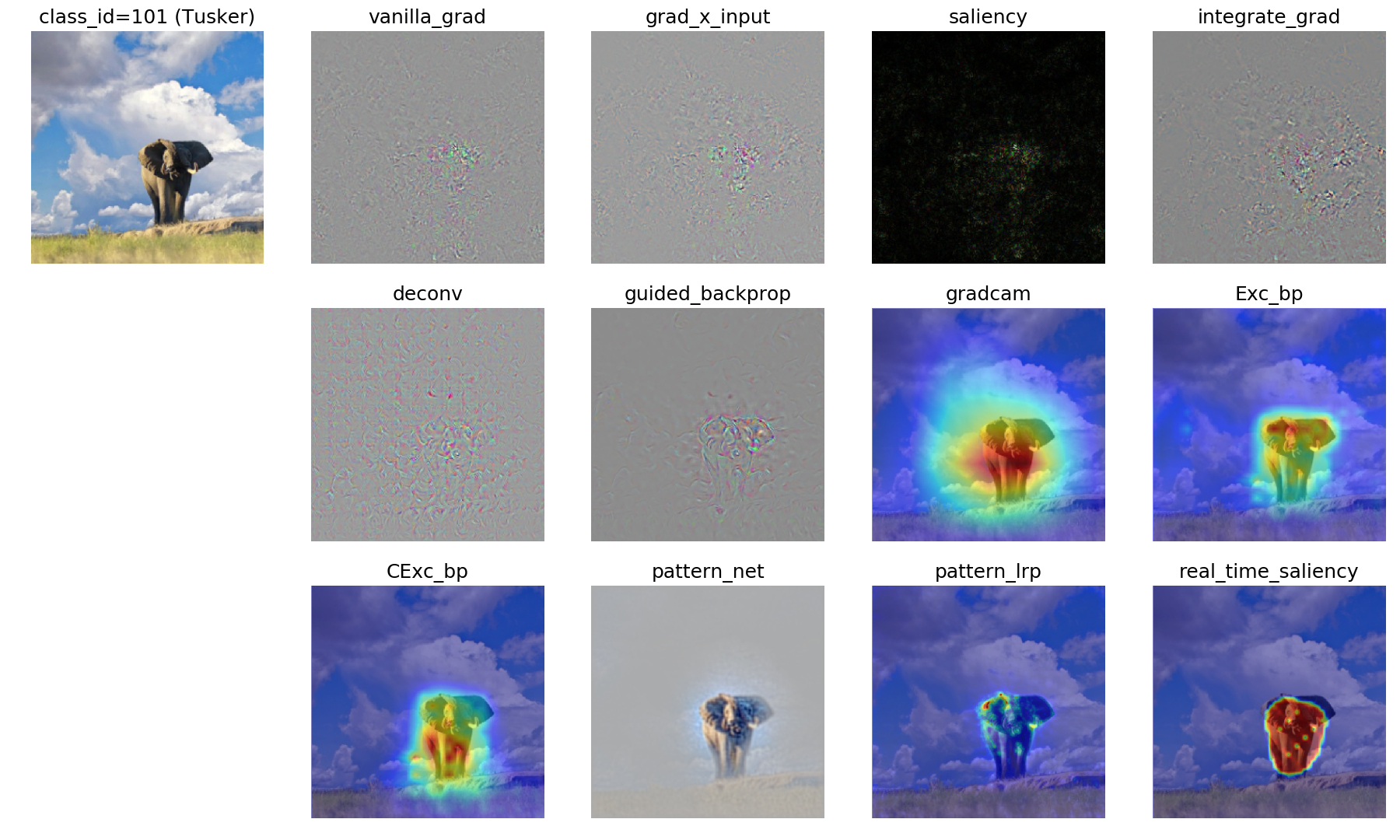

A collection of visual attribution methods for model interpretability

Including:

- Vanilla Gradient Saliency

- Grad X Input

- Integrated Gradient

- SmoothGrad

- Deconv

- Guided Backpropagation

- Excitation Backpropagation, Contrastive Excitation Backpropagation

- GradCAM

- PatternNet, PatternLRP

- Real Time Saliency

- Occlusion

- Feedback

- DeepLIFT

- Meaningful Perturbation

- Linux

- NVIDIA GPU + CUDA (Current only support running on GPU)

- Python 3.x

- PyTorch version == 0.2.0 (Sorry I haven't tested on newer versions)

- torchvision, skimage, matplotlib

- Clone this repo:

git clone git@github.com:yulongwang12/visual-attribution.git

cd visual-attribution- Download pretrained weights

cd weights

bash ./download_patterns.sh # for using PatternNet, PatternLRP

bash ./download_realtime_saliency.sh # for using Real Time SaliencyNote: I convert caffe bvlc_googlenet pretrained models in pytorch format (see googlenet.py and weights/googlenet.pth).

see notebook saliency_comparison.ipynb. If everything works, you will get the above image.

TBD

If you use our codebase or models in your research, please cite this project.

@misc{visualattr2018,

author = {Yulong Wang},

title = {Pytorch-Visual-Attribution},

howpublished = {\url{https://github.com/yulongwang12/visual-attribution}},

year = {2018}

}