Introduction

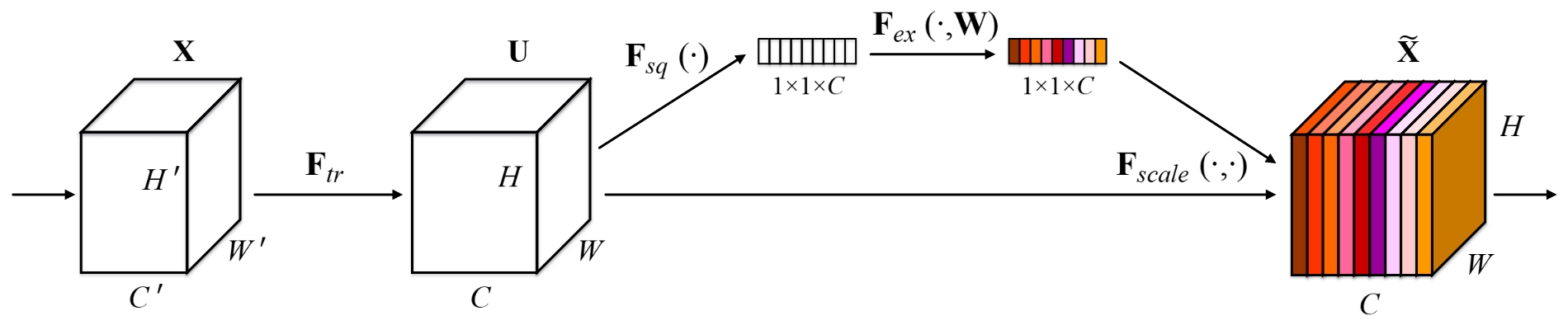

Channel Attention was first used as a squeeze and excitation block for classification, which generates channel attention maps by using the relationship between the channels.

Channel-Attention-family

2017

2018

- Image Super-Resolution Using Very Deep Residual Channel Attention Networks(ECCV).[paper][code]

- CBAM: Convolutional Block Attention Module(ECCV).[paper][keras][code]

- BAM: Bottleneck Attention Module(BMVC).[paper][code]

- Learning a Discriminative Feature Network for Semantic Segmentation(CVPR).[paper][code]

2019

- RCA-U-Net: Residual Channel Attention U-Net for Fast Tissue Quantification in Magnetic Resonance Fingerprinting(MICCAI).[paper]

- Bilinear Attention Networks for Person Retrieval(ICCV).[paper][code]

- DenseNet with Deep Residual Channel-Attention Blocks for Single Image Super Resolution(CVPR Workshop).[paper][code]