Fourier Features Let Networks Learn High Frequency Functions in Low Dimensional Domains

Project Page | Paper

Matthew Tancik*1,

Pratul P. Srinivasan*1,2,

Ben Mildenhall*1,

Sara Fridovich-Keil1,

Nithin Raghavan1,

Utkarsh Singhal1,

Ravi Ramamoorthi3,

Jonathan T. Barron2,

Ren Ng1

1UC Berkeley, 2Google Research, 3UC San Diego

*denotes equal contribution

Abstract

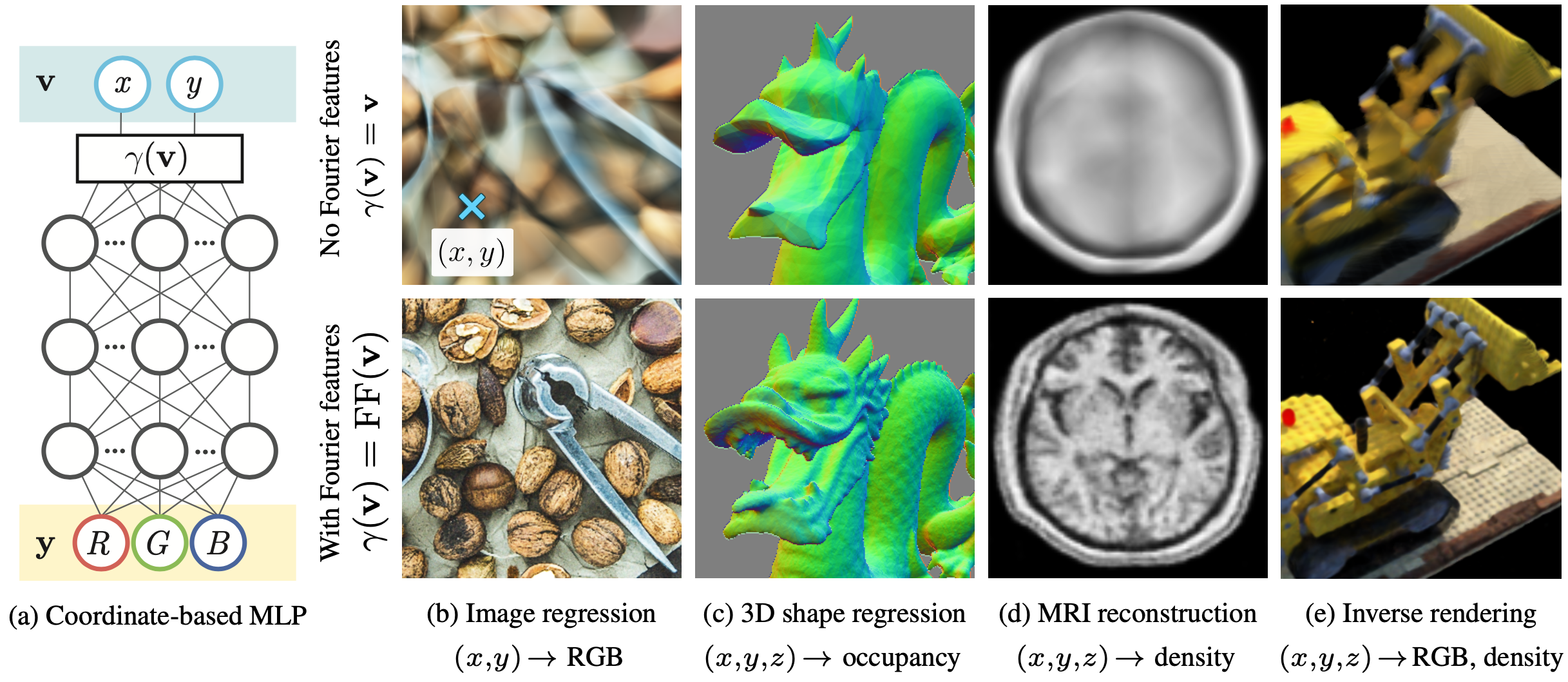

We show that passing input points through a simple Fourier feature mapping enables a multilayer perceptron (MLP) to learn high-frequency functions in low-dimensional problem domains. These results shed light on recent advances in computer vision and graphics that achieve state-of-the-art results by using MLPs to represent complex 3D objects and scenes. Using tools from the neural tangent kernel (NTK) literature, we show that a standard MLP fails to learn high frequencies both in theory and in practice. To overcome this spectral bias, we use a Fourier feature mapping to transform the effective NTK into a stationary kernel with a tunable bandwidth. We suggest an approach for selecting problem-specific Fourier features that greatly improves the performance of MLPs for low-dimensional regression tasks relevant to the computer vision and graphics communities.

Code

We provide a demo IPython notebook as a simple reference for the core idea. The scripts used to generate the paper plots and tables are located in the Experiments directory.