The directory contains source code of the article: Retrosynthesis Prediction with an Iterative String Editing Model.

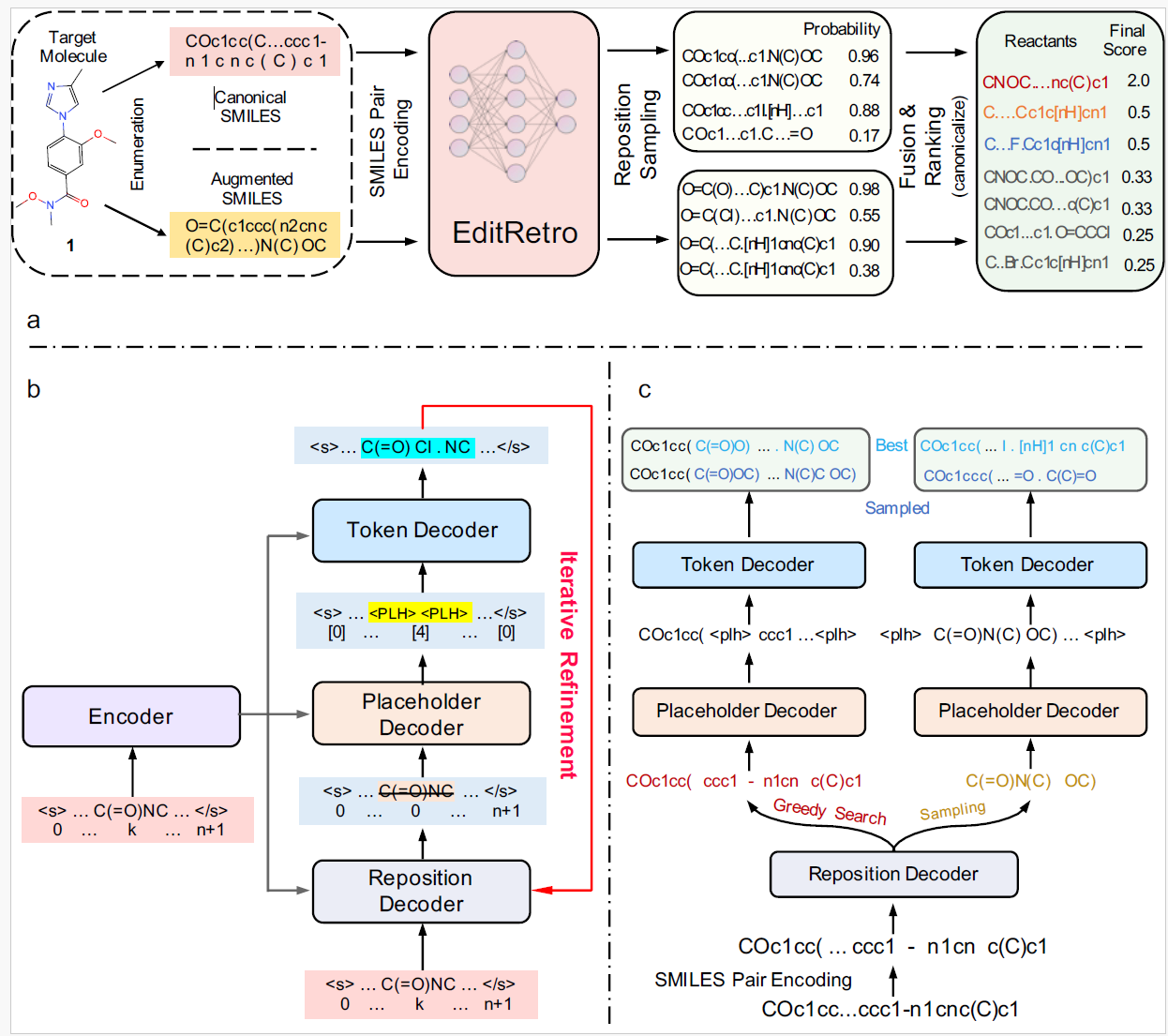

In this work, we propose an sequence edit-based retrosynthesis prediction method, called EditRetro, which formulaltes single-step retrosynthesis as a molecular string editing task. EditRetro offers an interpretable prediction process by performing explicit Levenshtein sequence editing operations, starting from the target product string.

- Create the environment:

conda create -n editretro python=3.10.9

pip install -r requirements.txt

You can install pytorch following the command:

pip install torch==1.12.0+cu116 torchvision==0.13.0+cu116 torchaudio==0.12.0 --extra-index-url https://download.pytorch.org/whl/cu116

- Install fairseq to enable the use of our model:

git clone https://github.com/yuqianghan/editretro.git

cd editretro/fairseq

pip install --editable ./

Remarks:

- Set export CUDA_HOME=/usr/local/cuda in .bashrc;

- To ensure a successful installation of fairseq, please make sure to install Ninja first.

sudo apt install re2c

sudo apt-get install ninja-build

The original datasets used in this paper are from:

USPTO-50K: https://github.com/Hanjun-Dai/GLN (schneider50k)

USPTO-FULL: https://github.com/Hanjun-Dai/GLN (1976_Sep2016_USPTOgrants_smiles.rsmi or uspto_multi)

Remark: USPTO_FULL dataset. The raw version of USPTO is 1976_Sep2016_USPTOgrants_smiles.rsmi. The script for cleaning and de-duplication can be found under gln/data_process/clean_uspto.py. If you run the script on this raw rsmi file, you are expected to get the same data split as used in the GLN paper. Or you can download the cleaned USPTO dataset released by the authors (see uspto_multi folder under their dropbox folder).

Download raw datasets and put them in the editretro/datasets/XXX(e.g., USPTO_50K)/raw folder, and then run the command to get the preprocessed datasets which will be stored in editretro/datasets/XXX/aug:

- cd editretro (the root directory of the project)

- python preprocess_data.py -dataset USPTO_50K -augmentation 20 -processes 8 -spe -dropout 0

- python preprocess_data.py -dataset USPTO_FULL -augmentation 5 -processes 8 -spe -dropout 0Then binarize the data using

sh binarize.sh ../datasets/USPTO_50K/aug20 dict.txtPretrain on the prepared specific dataset

sh ./scripts/0-pretrain.shFinetune on specific dataset, for example, USPTO-50K:

sh ./scripts/1-finetune.shTo generate and score the predictions on the test set with binarized data:

sh ./scripts/2-generate.shOur method achieves the state-of-the-art performance on the USPTO-50K dataset.

After download the checkpoints on USPTO-50K and USPTO-FULL https://drive.google.com/drive/folders/1em_I-PN-OvLXuCPfzWzRAUH-KZvSFL-U?usp=sharing, you can edit your own molecule following the ./interactive/README

sh ./interactive/interactive_gen.sh@article{han2024editretro,

title={Retrosynthesis Prediction with an Iterative String Editing Model},

author={Han, Yuqiang et al.},

journal={nature communications},

year={2024}

}

Our code is based on facebook fairseq-0.9.0 version modified from https://github.com/weijia-xu/fairseq-editor and https://github.com/nedashokraneh/fairseq-editor.

Should you have any questions, please contact Yuqiang Han at hyq2015@zju.edu.cn.