The Stock SEC Data Dashboard project is designed not only to create a dashboard but also to serve as a learning experience, introducing users to a range of tools, helping them develop new skills, and potentially assisting others in similar data-related endeavors. This versatile tool can be adapted to work with various forms of data, such as interactions on social networks, financial trading data, common crawl data, and more.

The project workflow is divided into several key steps:

-

AWS Infrastructure Setup: This step involves configuring Amazon Web Services (AWS) resources, including Amazon S3 for object storage and Amazon Redshift for data warehousing. We use Terraform for infrastructure-as-code to quickly set up these resources.

-

Configuration: A configuration file (

configuration.conf) is created to store project-specific details like AWS credentials, Redshift connection information, and other configurations required for data extraction and loading. -

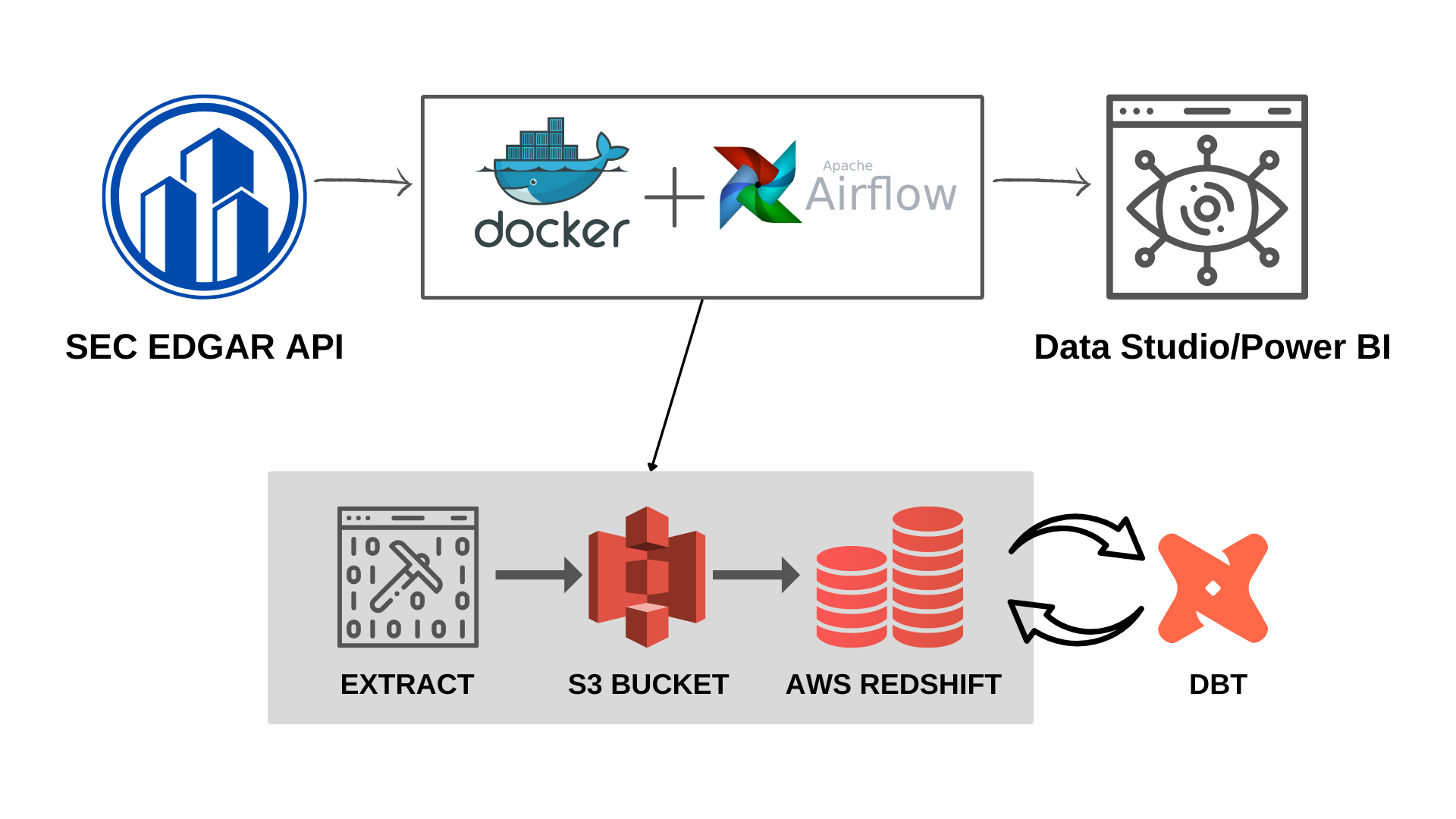

Docker & Airflow Setup: Apache Airflow is used to orchestrate the pipeline. Docker is employed to create and manage containers, simplifying the setup process. This step ensures that the necessary containers and services are running to facilitate data extraction, transformation, and loading.

-

Data Extraction and Loading: Within the Airflow environment, a DAG (Directed Acyclic Graph) named

sec_data_pipelineis defined. This DAG automates the extraction of data from SEC, uploading it to an S3 bucket, and loading it into Amazon Redshift. Tasks in this DAG utilize theBashOperatorto run Python scripts for data processing. -

Data Transformation with dbt (Optional): This optional step involves using dbt to connect to the data warehouse and perform data transformations. While not a core part of the project, it offers an opportunity to explore dbt and build skills in data transformation.

-

Visualization with BI Tools (Optional): The project also allows for connecting Business Intelligence (BI) tools to the data warehouse to create visualizations. Google Data Studio is recommended, but users are free to choose other BI tools based on their preferences and requirements.

To begin using the Stock SEC Data Dashboard project, follow these steps:

For more detailed steps visit the project starter

-

AWS Setup: Create a personal AWS account if you don't already have one. Secure your account by enabling multi-factor authentication (MFA) and set up an IAM user for admin permissions. Configure AWS CLI with your credentials.

-

Infrastructure Setup: Use Terraform to set up AWS resources for S3, Redshift, and related components. Customize the configuration in

variables.tfto match your project requirements. -

Configuration: Create a

configuration.conffile in theairflow/pipelinedirectory to store project-specific details such as AWS configurations, Redshift credentials, and bucket names. -

Docker & Airflow Setup: Install Docker and Docker Compose on your machine. Initialize the Airflow environment using Docker Compose, and ensure that the necessary containers are up and running. Access the Airflow Web Interface at

http://localhost:8080and monitor thesec_data_pipelineDAG. -

Data Extraction and Loading: The

sec_data_pipelineDAG runs automatically to extract data from sec, upload it to S3, and load it into Redshift. Monitor the DAG's status in the Airflow UI. -

Optional Steps: Explore optional steps such as data transformation with dbt and visualization with BI tools to enhance your project further.

-

Project Structure: The project's structure includes directories for infrastructure (Terraform), configuration (AWS and Airflow), data extraction (Python scripts), and optional steps like dbt and BI tools integration.

-

Customization: Feel free to customize the project by modifying configurations, adding new data sources, or integrating additional tools as needed.

- Harshit Sharma

This project is licensed under the MIT License.