This repository holds the Pytorch implementation of Construct Dynamic Graphs for Hand Gesture Recognition via Spatial-Temporal Attention by Yuxiao Chen, Long Zhao, Xi Peng, Jianbo Yuan, and Dimitris N. Metaxas.

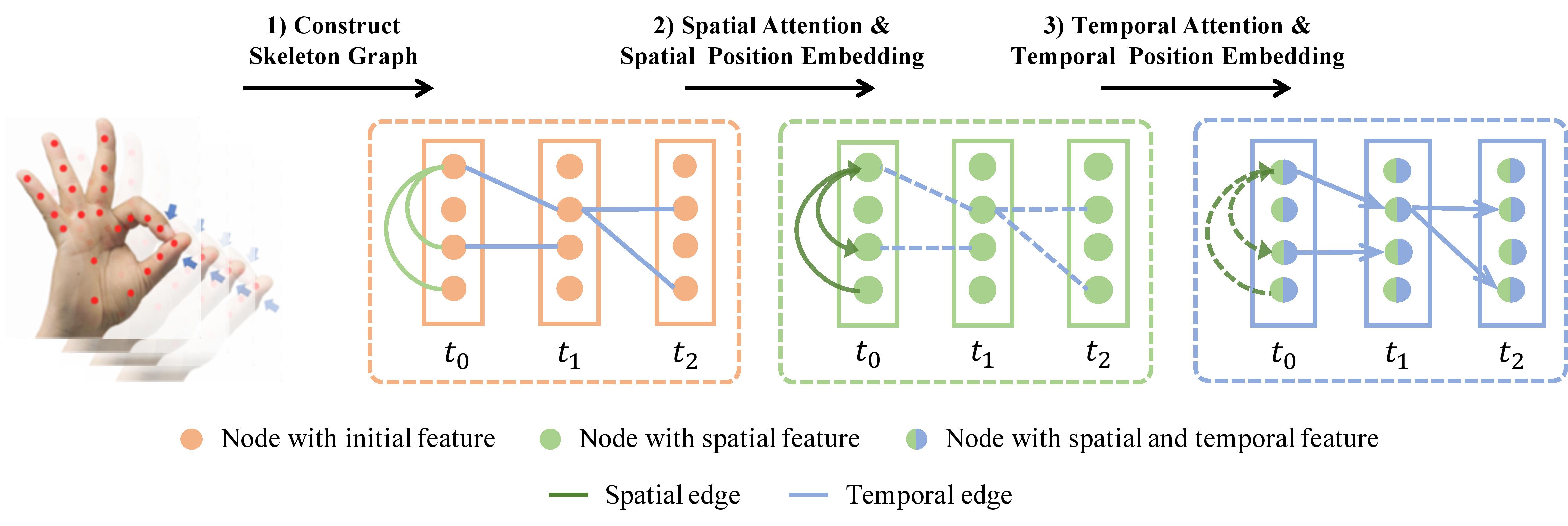

We propose a Dynamic Graph-Based Spatial-Temporal Attention (DG-STA) method for hand gesture recognition. The key idea is to first construct a fully-connected graph from a hand skeleton, where the node features and edges are then automatically learned via a self-attention mechanism that performs in both spatial and temporal domains. The code of training our approach for skeleton-based hand gesture recognition on the DHG-14/28 Dataset and the SHREC’17 Track Dataset is provided in this repository.

This package has the following requirements:

Python 3.6Pytorch v1.0.1

-

Download the DHG-14/28 Dataset or the SHREC’17 Track Dataset.

-

Set the path to your downloaded dataset folder in the

/util/DHG_parse_data.py (line 2)orthe /util/SHREC_parse_data.py (line 5). -

Set the path for saving your trained models in the

train_on_DHG.py (line 117)or thetrain_on_SHREC.py (line 109). -

Run one of following commands.

python train_on_SHREC.py # on SHREC’17 Track Dataset

python train_on_DHC.py # on DHG-14/28 Dataset

If you find this code useful in your research, please consider citing:

@inproceedings{chenBMVC19dynamic,

author = {Chen, Yuxiao and Zhao, Long and Peng, Xi and Yuan, Jianbo and Metaxas, Dimitris N.},

title = {Construct Dynamic Graphs for Hand Gesture Recognition via Spatial-Temporal Attention},

booktitle = {BMVC},

year = {2019}

}

Part of our code is borrowed from the pytorch implementation of Transformer. We thank to the authors for releasing their codes.