🔥🔥🔥 CCMB: A Large-scale Chinese Cross-modal Benchmark (ACM MM 2023)

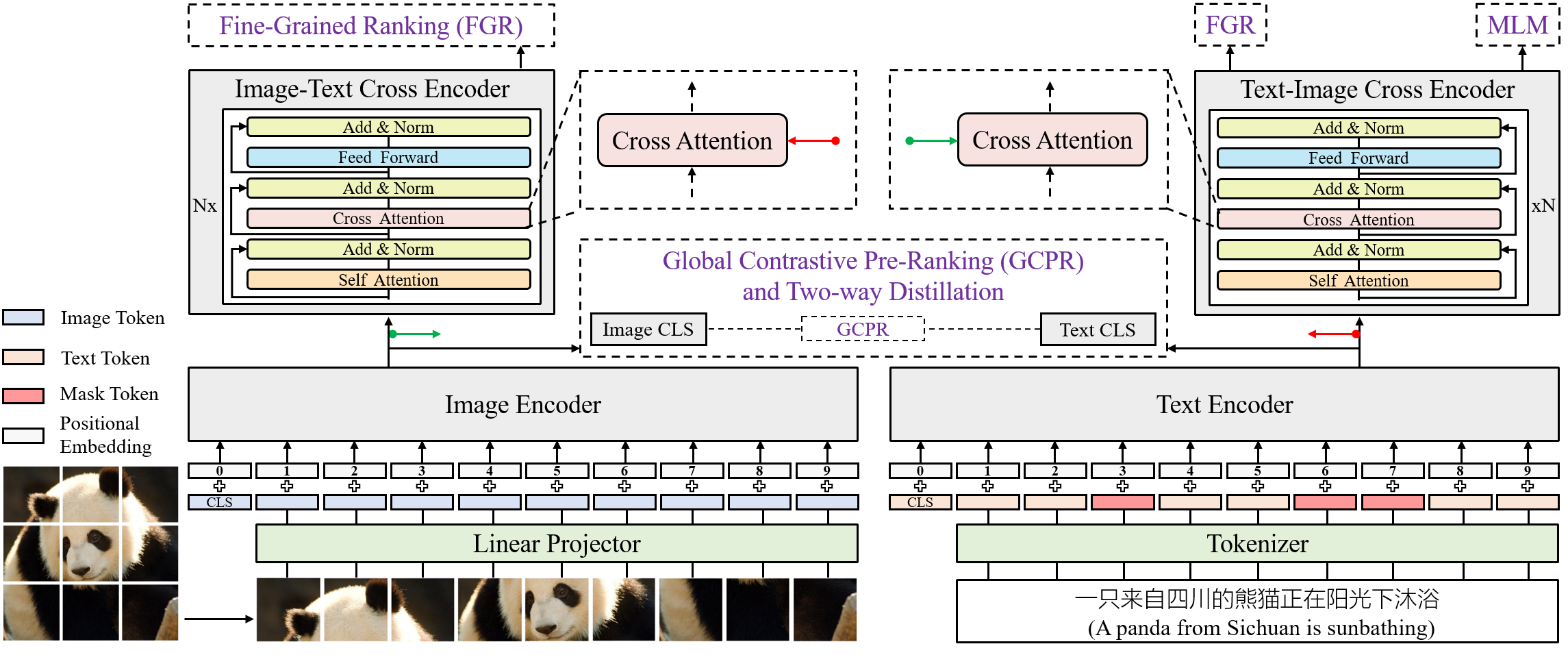

This repo is the official implementation of CCMB and R2D2.

CCMB is available. It include pre-train dataset (Zero) and 5 downstream datasets. The detailed introduction and download URL are in http://zero.so.com. The 250M data is in https://pan.baidu.com/s/1gnNbjOdCQdqZ4bRNN1S-Vw?pwd=iau8.

R2D2 is a vision-language framework. We release the following code and models:

✅Pre-trained checkpoints.

✅Inference demo.

✅Fine-tuning code and checkpoints for Image-Text Retrieval and Image-Text Matching tasks.

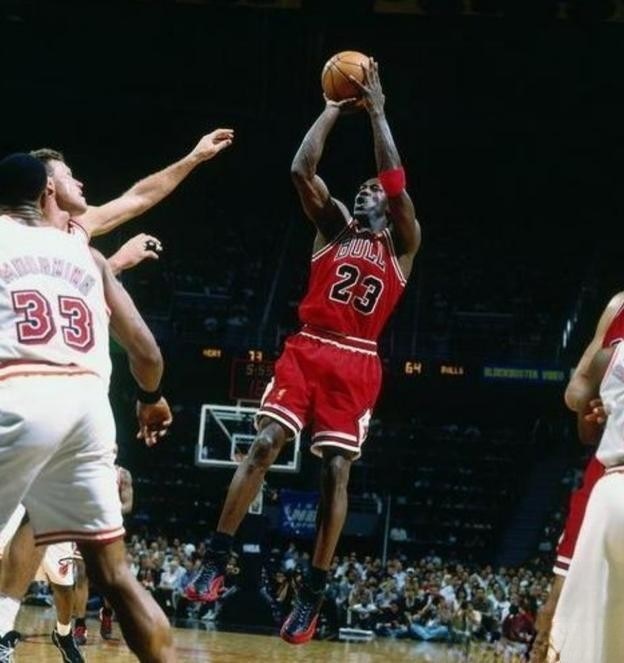

We show the performance of R2D2ViT-L fine-tuned on Flickr30k-CNA dataset. The output of R2D2 is a similarity score between 0 and 1.

| 中文 (English) | 乔丹投篮 (Jordan shot) | 乔丹运球 (Jordan dribble) | 詹姆斯投篮 (James shot) |

|---|---|---|---|

| Similarity score | 0.99033021 | 0.91078649 | 0.61231128 |

pip install -r requirements.txt

| Pre-trained image-text pairs | R2D2ViT-L | PRD2ViT-L |

|---|---|---|

| 250M | Download | Download |

| 23M | Download | - |

| Dataset | R2D2ViT-B(23M) |

|---|---|

| Flickr-CNA | Download |

| IQR | Download |

| ICR | Download |

| IQM | Download |

| ICM | Download |

- To evaluate the pretrained R2D2 model on image-text pairs, run:

python r2d2_inference_demo.py

- To evaluate the pretrained PRD2 model on image-text pairs, run:

python prd2_inference_demo.py

- Download datasets and pretrained models.

for ICR, IQR, ICM, IQM tasks, after downloading you should see the following folder structure:

├── Flickr30k-images │

├── IQR_IQM_ICR_ICM_images │ ├── IQR │ ├── train │ └── val ├── ICR │ ├── train │ └── val ├── IQM │ ├── train │ └── val │── ICM │ ├── train │ └── val for Flickr30k-CNA, after downloading you should see the following folder structure:

├── train │

├── val │

└── test - In config/retrieval_*.yaml, set the paths for the dataset and pretrain model paths.

- Run fine-tuning for the Image-Text Retrieval task.

sh train_r2d2_retrieval.sh - Run fine-tuning for the Image-Text Matching task.

sh train_r2d2_matching.sh

If you find this dataset and code useful for your research, please consider citing.

@inproceedings{xie2023ccmb,

title={CCMB: A Large-scale Chinese Cross-modal Benchmark},

author={Xie, Chunyu and Cai, Heng and Li, Jincheng and Kong, Fanjing and Wu, Xiaoyu and Song, Jianfei and Morimitsu, Henrique and Yao, Lin and Wang, Dexin and Zhang, Xiangzheng and others},

booktitle={Proceedings of the 31st ACM International Conference on Multimedia},

pages={4219--4227},

year={2023}

}