W-HMR: Human Mesh Recovery in World Space with Weak-supervised Camera Calibration and Orientation Correction

W-HMR is a human body pose and shape estimation method in world space. It predicts the SMPL body model in both camera and world coordinates for a monocular image. [Camera Calibration] predicts the focal length for better mesh-image alignment. [Orientation Correction] make recovered body reasonable in world space.

This implementation:

- has the demo code for W-HMR implemented in PyTorch.

[March 21, 2024] Release codes and pretrained weights for demo.

[March 26, 2024] Pre-processed labels are available now.

[April 24, 2024] Fix some import bugs and loading error.

[April 24, 2024] Release more necessary files and preprocessed labels.

-

Release demo codes.

-

Release pre-processed labels.

-

Release evluation codes.

-

Release training codes.

W-HMR has been implemented and tested on Ubuntu 18.04 with python == 3.8.

Install the requirements following environment.yml.

💃 If you have any difficulty configuring your environment or meet any bug, please let me know. I'd be happy to help!

First, you need to download the required data (i.e our trained model and SMPL model parameters) from Google Drive or Baidu Netdisk. It is approximately 700MB. Unzip it and put it in the repository root. Then, running the demo is as simple as:

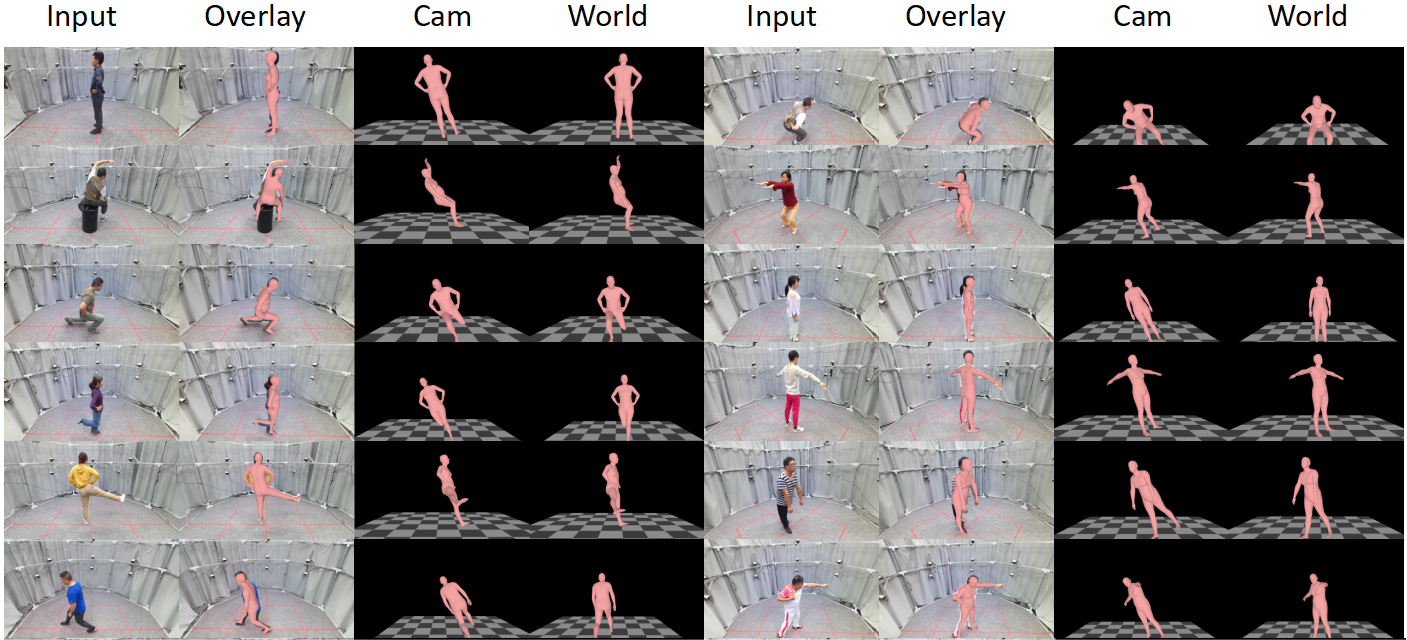

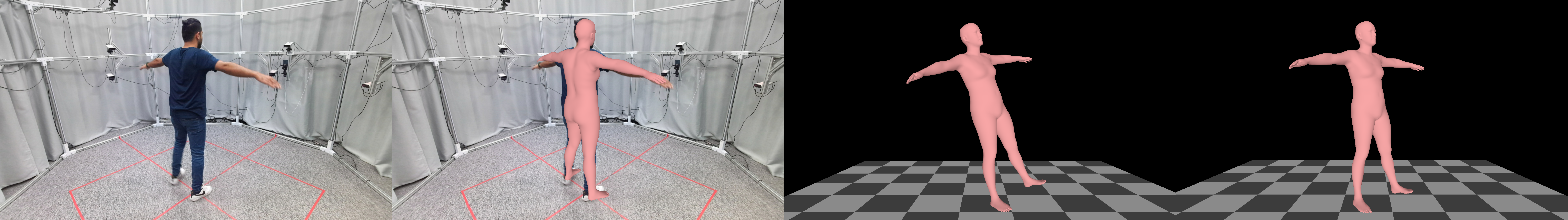

python demo/whmr_demo.py --image_folder data/sample_images --output_folder output/sample_imagesSample demo output:

On the right, they are the output in camera and world coordinate. We put them in world space and generate ground planes to show how accurate the orientation is.

Training instructions will follow after publication.

All the data used in our paper is publicly available. You can just download them from their official website following our dataset introduction in the paper.

But for your convenience, I provide some download links for pre-processed labels here.

The most important source is PyMAF. You can download the pre-processed labels of 3DPW, COCO, LSP, MPII and MPI-INF-3DHP, which include pseudo 3D joint label fitted by EFT.

We also use some augmented data from CLIFF and HMR 2.0.

I also processed some dataset labels (e.g. AGORA and HuMMan), you can download them from Google Drive or Baidu Pan.

You should unzip the dataset_extras.zip and put these files in the ./data/dataset_extras/ folder.

As for training W-HMR for global mesh recovery, I add pseudo-labels of global pose to some datasets. You can download them from Google Drive or Baidu Netdisk. Then unzip it and put files in the ./data/dataset_extras/ folder.

Part of the code is borrowed from the following projects, including ,PyMAF, AGORA, PyMAF-X, PARE, SPEC, MeshGraphormer, 4D-Humans, VitPose. Many thanks to their contributions.

If you find this repository useful, please consider citing our paper and lightning the star:

@article{yao2023w,

title={W-HMR: Human Mesh Recovery in World Space with Weak-supervised Camera Calibration and Orientation Correction},

author={Yao, Wei and Zhang, Hongwen and Sun, Yunlian and Tang, Jinhui},

journal={arXiv preprint arXiv:2311.17460},

year={2023}

}