Bending Reality: Distortion-aware Transformers for Adapting to Panoramic Semantic Segmentation, CVPR 2022, [PDF].

Behind Every Domain There is a Shift: Adapting Distortion-aware Vision Transformers for Panoramic Semantic Segmentation, arxiv preprint, [PDF].

-

[08/2022], a new panoramic semantic segmentation benchmark SynPASS is released.

-

[08/2022], Trans4PASS+ is released.

conda create -n trans4pass python=3.8

conda activate trans4pass

cd ~/path/to/trans4pass

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=11.1 -c pytorch -c conda-forge

pip install mmcv-full==1.3.9 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.8.0/index.html

pip install -r requirements.txt

python setup.py develop --user

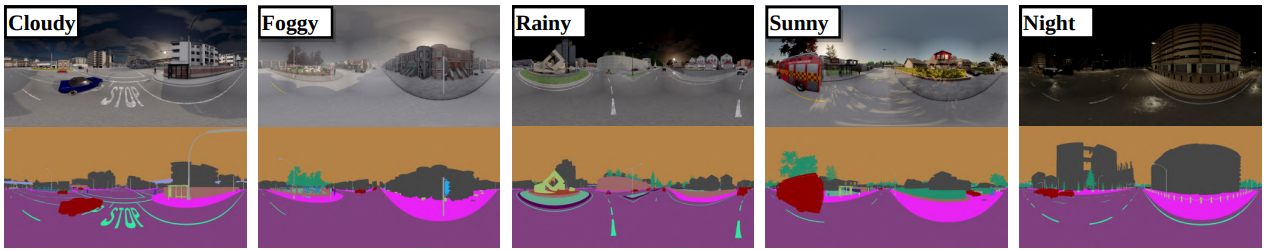

# Optional: install apex follow: https://github.com/NVIDIA/apexSynPASS dataset contains 9080 panoramic images (1024x2048) and 22 categories.

The scenes include cloudy, foggy, rainy, sunny, and day-/night-time conditions.

The SynPASS dataset is now availabel at GoogleDrive.

SynPASS statistic information:

| Cloudy | Foggy | Rainy | Sunny | ALL | |

|---|---|---|---|---|---|

| Split | train/val/test | train/val/test | train/val/test | train/val/test | train/val/test |

| #Frames | 1420/420/430 | 1420/430/420 | 1420/430/420 | 1440/410/420 | 5700/1690/1690 |

| Split | day/night | day/night | day/night | day/night | day/night |

| #Frames | 1980/290 | 1710/560 | 2040/230 | 1970/300 | 7700/1380 |

| Total | 2270 | 2270 | 2270 | 2270 | 9080 |

Prepare datasets:

datasets/

├── cityscapes

│ ├── gtFine

│ └── leftImg8bit

├── Stanford2D3D

│ ├── area_1

│ ├── area_2

│ ├── area_3

│ ├── area_4

│ ├── area_5a

│ ├── area_5b

│ └── area_6

├── Structured3D

│ ├── scene_00000

│ ├── ...

│ └── scene_00199

├── SynPASS

│ ├── img

│ │ ├── cloud

│ │ ├── fog

│ │ ├── rain

│ │ └── sun

│ └── semantic

│ ├── cloud

│ ├── fog

│ ├── rain

│ └── sun

├── DensePASS

│ ├── gtFine

│ └── leftImg8bit

Prepare pretrained weights, which can be found in the public repository of SegFormer.

pretrained/

├── mit_b1.pth

└── mit_b2.pth

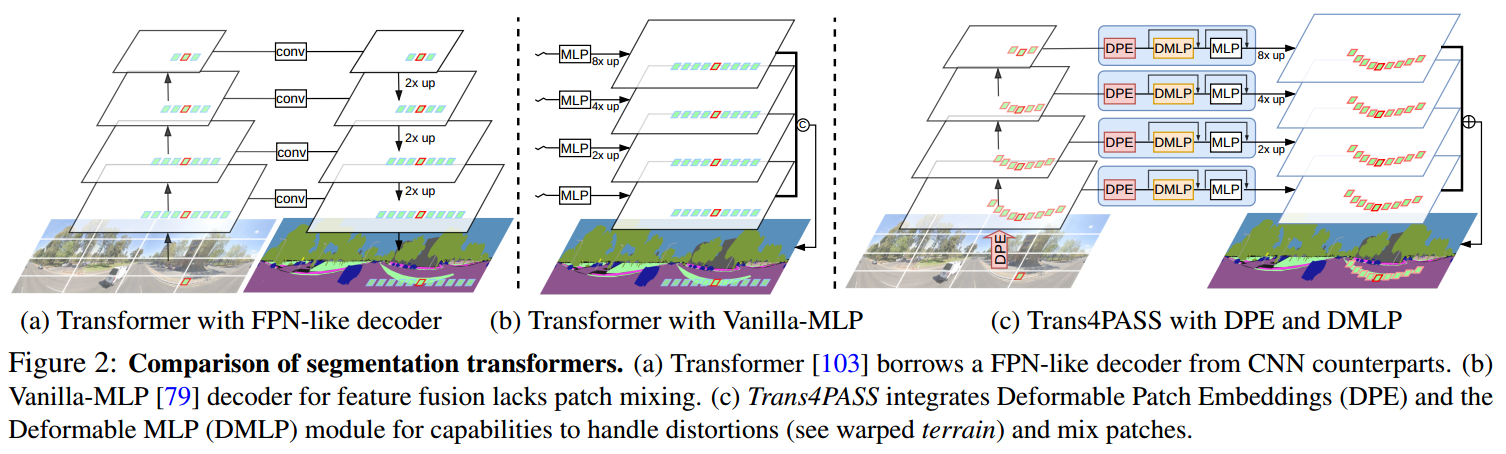

The code of Network pipeline is in segmentron/models/trans4pass.py.

The code of backbone is in segmentron/models/backbones/trans4pass.py.

The code of DMLP decoder is in segmentron/modules/dmlp.py.

The code of DMLPv2 decoder is in segmentron/modules/dmlp2.py.

For example, to use 4 1080Ti GPUs to run the experiments:

# Trans4PASS

python -m torch.distributed.launch --nproc_per_node=4 tools/train_cs.py --config-file configs/cityscapes/trans4pass_tiny_512x512.yaml

python -m torch.distributed.launch --nproc_per_node=4 tools/train_s2d3d.py --config-file configs/stanford2d3d/trans4pass_tiny_1080x1080.yaml

# Trans4PASS+, please modify the version at segmentron/models/trans4pass.py

python -m torch.distributed.launch --nproc_per_node=4 tools/train_cs.py --config-file configs/cityscapes/trans4pass_plus_tiny_512x512.yaml

python -m torch.distributed.launch --nproc_per_node=4 tools/train_sp.py --config-file configs/synpass/trans4pass_plus_tiny_512x512.yaml

python -m torch.distributed.launch --nproc_per_node=4 tools/train_s2d3d.py --config-file configs/stanford2d3d/trans4pass_plus_tiny_1080x1080.yamlDownload the models from GoogleDrive and save in ./workdirs folder as:

workdirs folder:

./workdirs

├── cityscapes

│ ├── trans4pass_plus_small_512x512

│ ├── trans4pass_plus_tiny_512x512

│ ├── trans4pass_small_512x512

│ └── trans4pass_tiny_512x512

├── cityscapes13

│ ├── trans4pass_plus_small_512x512

│ ├── trans4pass_plus_tiny_512x512

│ ├── trans4pass_small_512x512

│ └── trans4pass_tiny_512x512

├── stanford2d3d

│ ├── trans4pass_plus_small_1080x1080

│ ├── trans4pass_plus_tiny_1080x1080

│ ├── trans4pass_small_1080x1080

│ └── trans4pass_tiny_1080x1080

├── stanford2d3d8

│ ├── trans4pass_plus_small_1080x1080

│ ├── trans4pass_plus_tiny_1080x1080

│ ├── trans4pass_small_1080x1080

│ └── trans4pass_tiny_1080x1080

├── stanford2d3d_pan

│ ├── trans4pass_plus_small_1080x1080

│ ├── trans4pass_small_1080x1080

│ └── trans4pass_tiny_1080x1080

├── structured3d8

│ ├── trans4pass_plus_small_512x512

│ ├── trans4pass_plus_tiny_512x512

│ ├── trans4pass_small_512x512

│ └── trans4pass_tiny_512x512

├── synpass

│ ├── trans4pass_plus_small_512x512

│ ├── trans4pass_plus_tiny_512x512

│ ├── trans4pass_small_512x512

│ └── trans4pass_tiny_512x512

└── synpass13

├── trans4pass_plus_small_512x512

├── trans4pass_plus_tiny_512x512

├── trans4pass_small_512x512

└── trans4pass_tiny_512x512

Some test examples:

# Trans4PASS

python tools/eval.py --config-file configs/cityscapes/trans4pass_tiny_512x512.yaml

python tools/eval_s2d3d.py --config-file configs/stanford2d3d/trans4pass_tiny_1080x1080.yaml

# Trans4PASS+

python tools/eval.py --config-file configs/cityscapes/trans4pass_plus_tiny_512x512.yaml

python tools/eval_sp.py --config-file configs/synpass/trans4pass_plus_tiny_512x512.yaml

python tools/eval_dp13.py --config-file configs/synpass13/trans4pass_plus_tiny_512x512.yaml

python tools/eval_s2d3d.py --config-file configs/stanford2d3d/trans4pass_plus_tiny_1080x1080.yamlfrom Cityscapes to DensePASS

python tools/eval.py --config-file configs/cityscapes/trans4pass_plus_tiny_512x512.yaml| Network | CS | DP | Download |

|---|---|---|---|

| Trans4PASS (T) | 72.49 | 45.89 | model |

| Trans4PASS (S) | 72.84 | 51.38 | model |

| Trans4PASS+ (T) | 72.67 | 50.23 | model |

| Trans4PASS+ (S) | 75.24 | 51.41 | model |

from Stanford2D3D_pinhole to Stanford2D3D_panoramic

python tools/eval_s2d3d.py --config-file configs/stanford2d3d/trans4pass_plus_tiny_1080x1080.yaml| Network | SPin | SPan | Download |

|---|---|---|---|

| Trans4PASS (T) | 49.05 | 46.08 | model |

| Trans4PASS (S) | 50.20 | 48.34 | model |

| Trans4PASS+ (T) | 48.99 | 46.75 | model |

| Trans4PASS+ (S) | 53.46 | 50.35 | model |

supervised trained on Stanford2D3D_panoramic

# modify fold id at segmentron/data/dataloader/stanford2d3d_pan.py

# modify TEST_MODEL_PATH at configs/stanford2d3d_pan/trans4pass_plus_small_1080x1080.yaml

python tools/eval_s2d3d.py --config-file configs/stanford2d3d_pan/trans4pass_plus_tiny_1080x1080.yaml| Network | Fold | SPan | Download |

|---|---|---|---|

| Trans4PASS+ (S) | 1 | 54.05 | model |

| Trans4PASS+ (S) | 2 | 47.70 | model |

| Trans4PASS+ (S) | 3 | 60.25 | model |

| Trans4PASS+ (S) | avg | 54.00 |

Note: for the Trans4PASS versions (not Trans4PASS+), please modify the respective DMLP version, check here.

supervised trained on SynPASS

python tools/eval_sp.py --config-file configs/synpass/trans4pass_plus_tiny_512x512.yaml| Network | Cloudy | Foggy | Rainy | Sunny | Day | Night | ALL (val) | ALL (test) | Download |

|---|---|---|---|---|---|---|---|---|---|

| Trans4PASS (T) | 46.90 | 41.97 | 41.61 | 45.52 | 44.48 | 34.73 | 43.68 | 38.53 | model |

| Trans4PASS (S) | 46.74 | 43.49 | 43.39 | 45.94 | 45.52 | 37.03 | 44.80 | 38.57 | model |

| Trans4PASS+ (T) | 48.33 | 43.41 | 43.11 | 46.99 | 46.52 | 35.27 | 45.21 | 38.85 | model |

| Trans4PASS+ (S) | 48.87 | 44.80 | 45.24 | 47.62 | 47.17 | 37.96 | 46.47 | 39.16 | model |

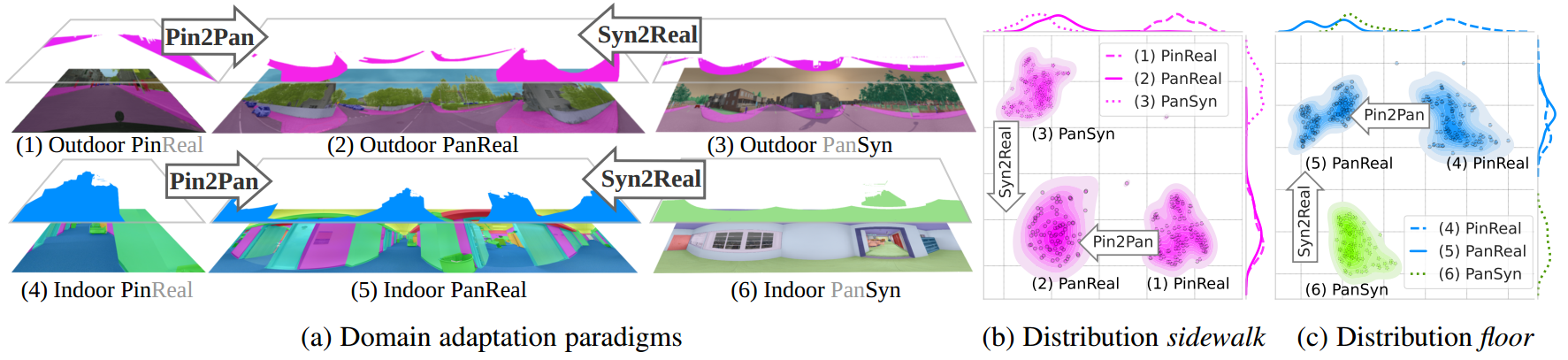

- (1) Indoor Pin2Pan: SPin8 -> SPan8

- (2) Indoor Syn2Real: S3D8 -> SPan8

- (3) Outdoor Pin2Pan: CS13 -> DP13

- (4) Outdoor Syn2Real: SP13 -> DP13

python tools/eval_dp13.py --config-file configs/synpass13/trans4pass_plus_tiny_512x512.yaml

python tools/eval_dp13.py --config-file configs/cityscaps13/trans4pass_plus_tiny_512x512.yaml

python tools/eval_s2d3d8.py --config-file configs/structured3d8/trans4pass_plus_tiny_512x512.yaml

python tools/eval_s2d3d8.py --config-file configs/stanford2d3d8/trans4pass_plus_tiny_512x512.yaml| (1) Outdoor Pin2Pan: | CS13 | DP13 | mIoU Gaps | model |

|---|---|---|---|---|

| Trans4PASS (Tiny) | 71.63 | 49.21 | -22.42 | model |

| Trans4PASS (Small) | 75.21 | 50.96 | -24.25 | model |

| Trans4PASS+ (Tiny) | 72.92 | 49.16 | -23.76 | model |

| Trans4PASS+ (Small) | 74.52 | 51.40 | -23.12 | model |

| (2) Outdoor Syn2Real: | SP13 | DP13 | mIoU Gaps | |

| Trans4PASS (Tiny) | 61.08 | 39.68 | -21.40 | model |

| Trans4PASS (Small) | 62.76 | 43.18 | -19.58 | model |

| Trans4PASS+ (Tiny) | 60.37 | 39.62 | -20.75 | model |

| Trans4PASS+ (Small) | 61.59 | 43.17 | -18.42 | model |

| (3) Indoor Pin2Pan: | SPin8 | SPan8 | mIoU Gaps | |

| Trans4PASS (Tiny) | 64.25 | 58.93 | -5.32 | model |

| Trans4PASS (Small) | 66.51 | 62.39 | -4.12 | model |

| Trans4PASS+ (Tiny) | 65.09 | 59.55 | -5.54 | model |

| Trans4PASS+ (Small) | 65.29 | 63.08 | -2.21 | model |

| (4) Indoor Syn2Real: | S3D8 | SPan8 | mIoU Gaps | |

| Trans4PASS (Tiny) | 76.84 | 48.63 | -28.21 | model |

| Trans4PASS (Small) | 77.29 | 51.70 | -25.59 | model |

| Trans4PASS+ (Tiny) | 76.91 | 50.60 | -26.31 | model |

| Trans4PASS+ (Small) | 76.88 | 51.93 | -24.95 | model |

After the model is trained on the source domain, the model can be further trained by the adversarial method for warm-up in the target domain.

cd adaptations

python train_warm.py

Then, use the warm up model to generate the pseudo label of the target damain.

python gen_pseudo_label.py

The proposed MPA method can be jointly used for perform domain adaptation.

# (optional) python train_ssl.py

python train_mpa.py

Domain adaptation with Trans4PASS+, for example, adapting model trained from pinhole to panoramic, i.e., from Cityscapes13 to DensePASS13 (CS13 -> DP13):

python train_warm_out_p2p.py

python train_mpa_out_p2p.pyDownload the models from GoogleDrive and save in adaptations/snapshots folder as:

adaptations/snapshots/

├── CS2DensePASS_Trans4PASS_v1_MPA

│ └── BestCS2DensePASS_G.pth

├── CS2DensePASS_Trans4PASS_v2_MPA

│ └── BestCS2DensePASS_G.pth

├── CS132CS132DP13_Trans4PASS_plus_v2_MPA

│ └── BestCS132DP13_G.pth

├── CS2DP_Trans4PASS_plus_v1_MPA

│ └── BestCS2DensePASS_G.pth

└── CS2DP_Trans4PASS_plus_v2_MPA

└── BestCS2DensePASS_G.pth

Change the RESTORE_FROM in evaluate.py file.

Or change the scales in evaluate.py for multi-scale evaluation.

# Trans4PASS

cd adaptations

python evaluate.py

# Trans4PASS+, CS132DP13

python evaluate_out13.pyWe appreciate the previous open-source works.

This repository is under the Apache-2.0 license. For commercial use, please contact with the authors.

If you are interested in this work, please cite the following works:

Trans4PASS+ [PDF]

@article{zhang2022behind,

title={Behind Every Domain There is a Shift: Adapting Distortion-aware Vision Transformers for Panoramic Semantic Segmentation},

author={Zhang, Jiaming and Yang, Kailun and Shi, Hao and Rei{\ss}, Simon and Peng, Kunyu and Ma, Chaoxiang and Fu, Haodong and Wang, Kaiwei and Stiefelhagen, Rainer},

journal={arXiv preprint arXiv:2207.11860},

year={2022}

}

Trans4PASS [PDF]

@inproceedings{zhang2022bending,

title={Bending Reality: Distortion-aware Transformers for Adapting to Panoramic Semantic Segmentation},

author={Zhang, Jiaming and Yang, Kailun and Ma, Chaoxiang and Rei{\ss}, Simon and Peng, Kunyu and Stiefelhagen, Rainer},

booktitle={2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages={16917--16927},

year={2022}

}