The repository includes unofficial but full reproduction of the paper "Objects that Sound" from ECCV 2018. 😊

We implement this work in PyTorch with Python 3.6, and we strongly recommend to use Ubuntu 16.04.

It took about less than 20 hours to train the models on 48,000 videos, with Intel i7-9700k and RTX 2080 Ti.

For detailed package requirements, please refer to requirements.txt for more information.

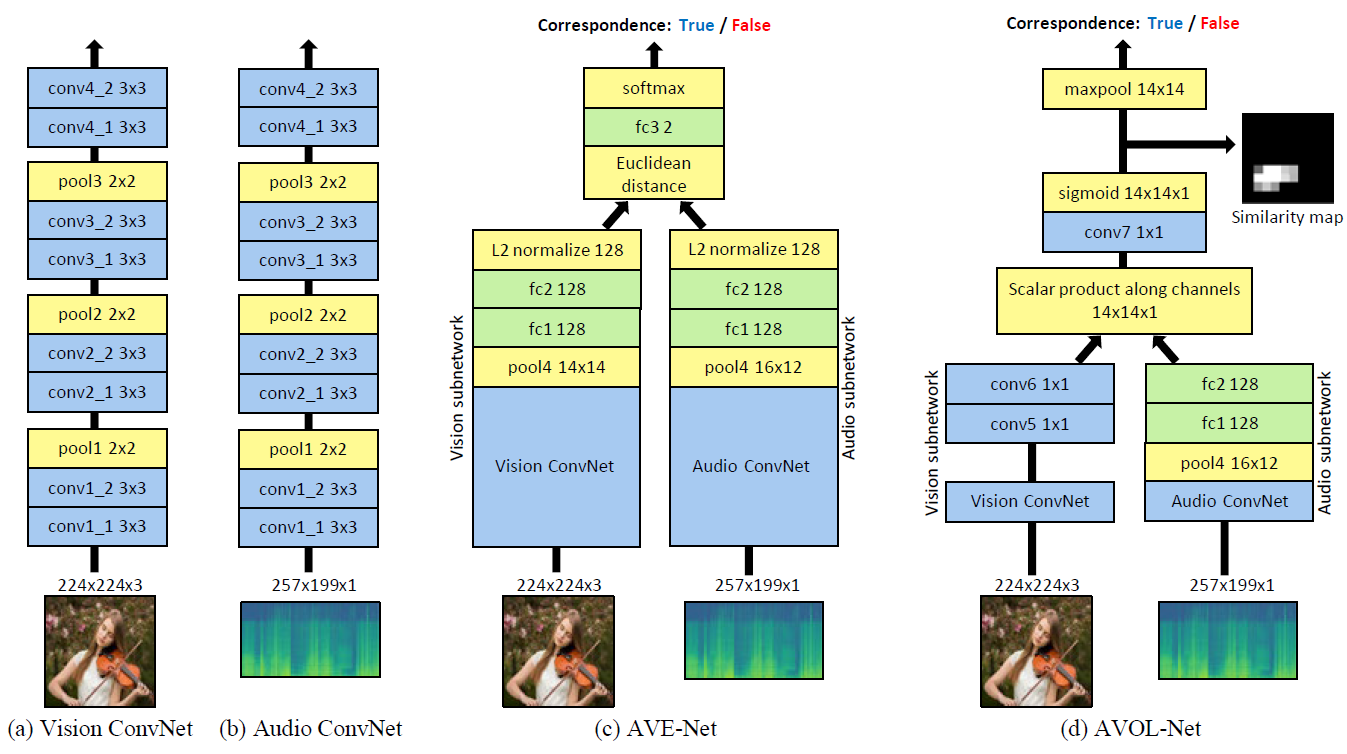

We implement AVE-Net, AVOL-Net, and also L3-Net which is one of the baseline models.

We implement AVE-Net, AVOL-Net, and also L3-Net which is one of the baseline models.

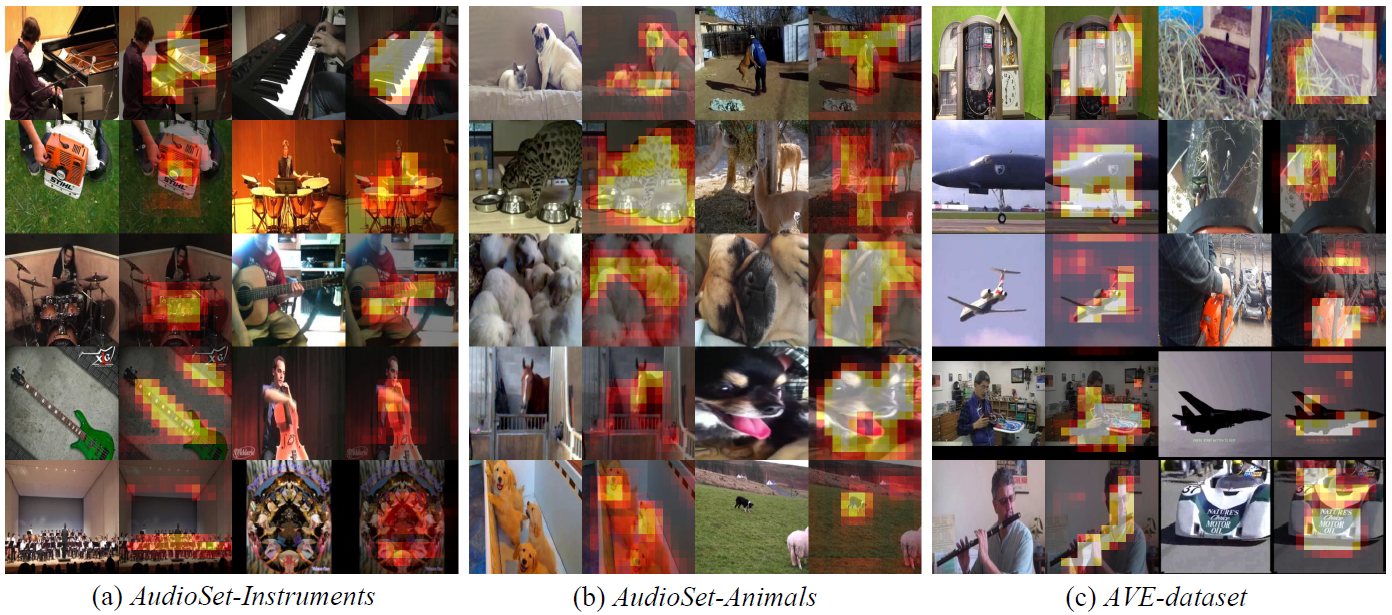

We use or construct 3 different dataset to train and evaluate the models.

- AudioSet-Instruments

- AudioSet-Animal

- AVE-Dataset

Due to the resource limitation, we use 20% subset of AudioSet-Instruments suggested in the paper.

Please refer to Final Report for more detailed explanations about each dataset.

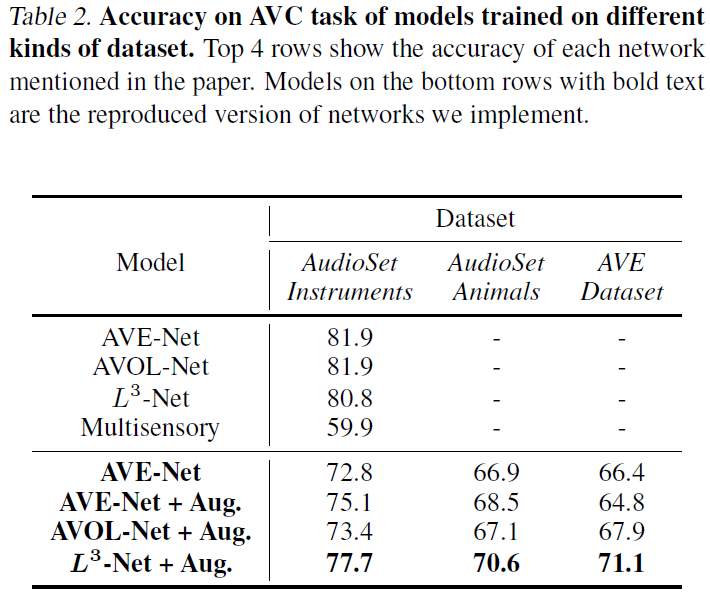

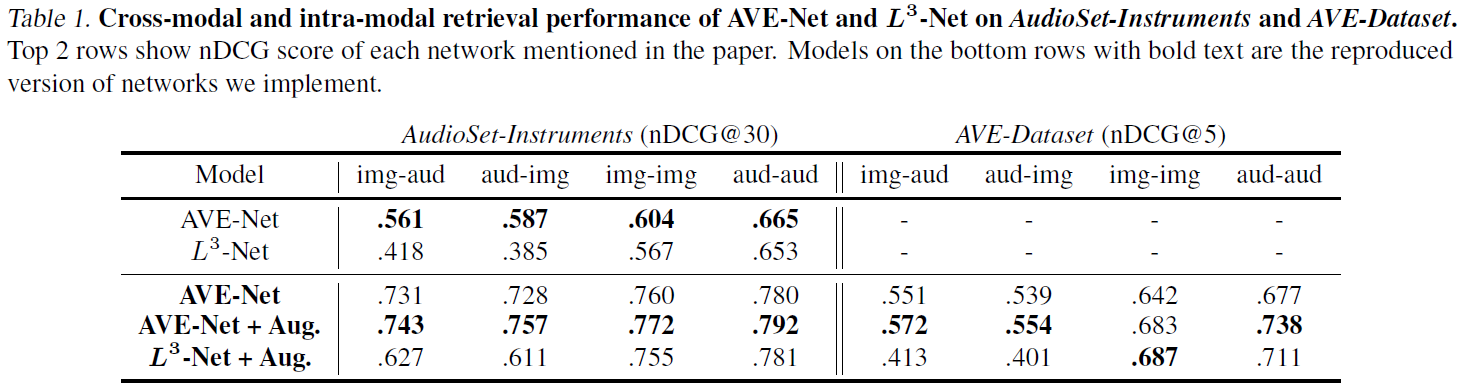

We note that our results are quite different from the paper.

We expect this difference may come from difference in size of the dataset, batch size, learning rate, or any other subtle difference in training configurations.

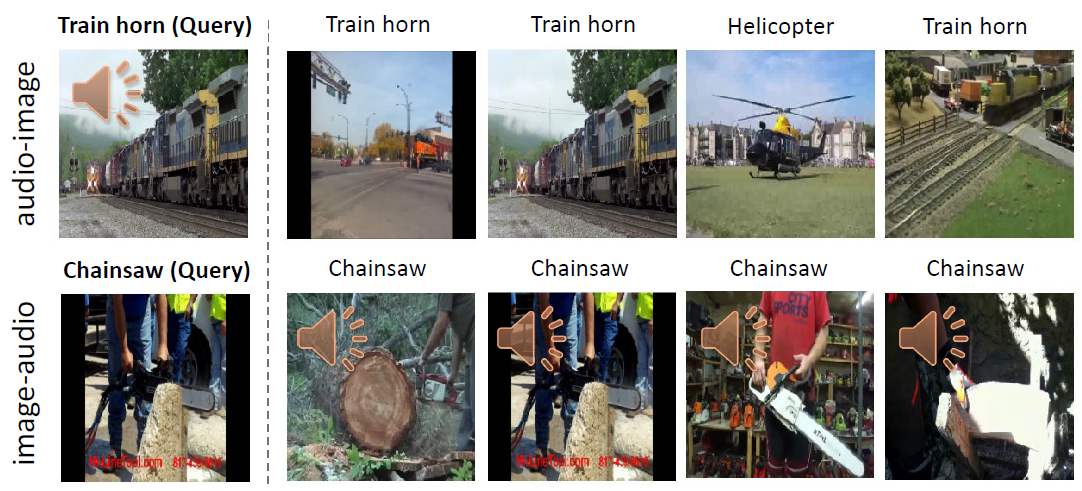

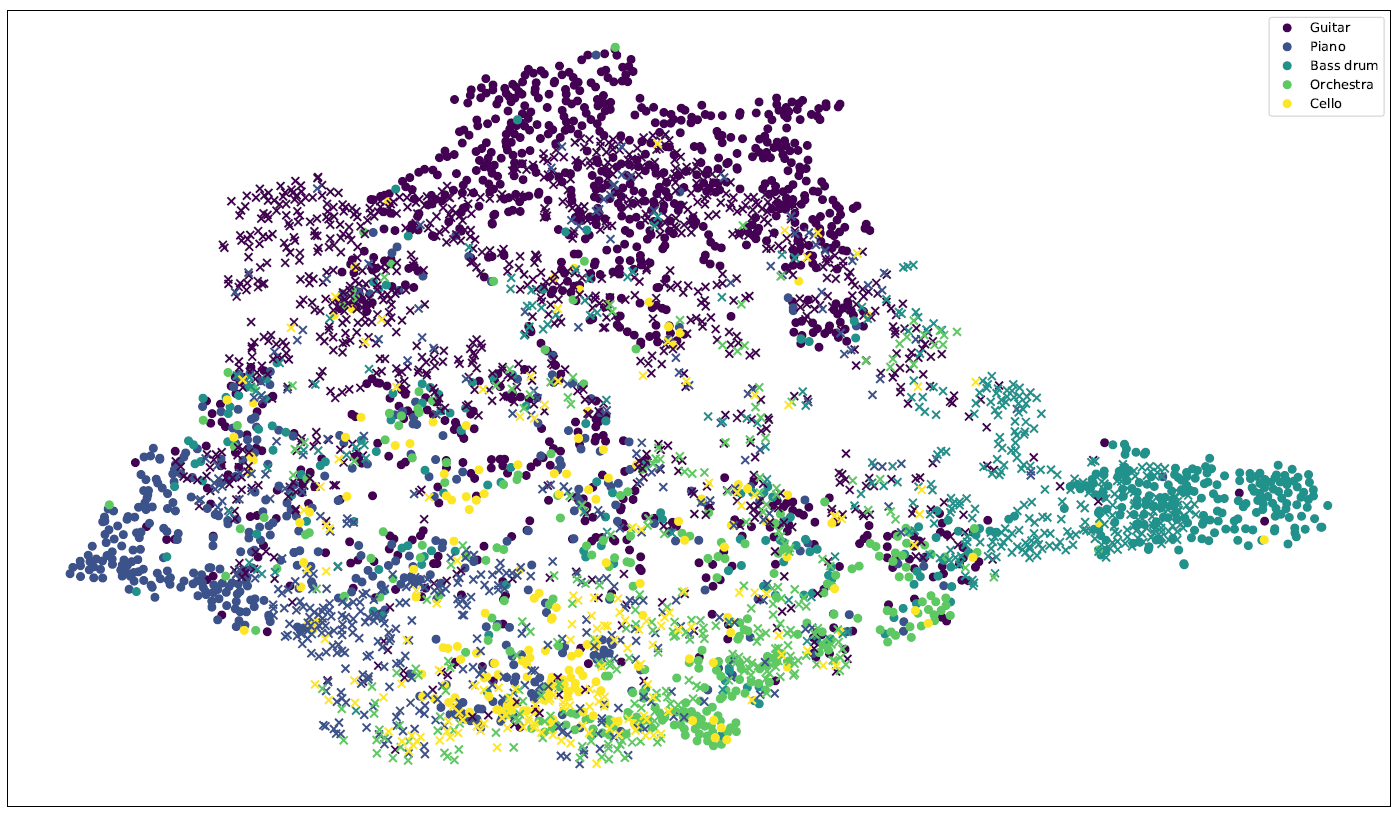

More qualitative results are available in our slides provided below. Embeddings with ● are from images, and embeddings with × are from audios.We have gotten many insights of implementation from this repository, thanks to @rohitrango.

As this is made for our course project, we ready for PPT slides with corresponding presentation.

| Name | Slide | Video |

|---|---|---|

| Project Proposal | PPTX | YouTube |

| Progress Update | PPTX | YouTube |

| Final Presentation | PPTX | YouTube |

Also, please refer to our Final Report for detailed explanation of implementation and training configurations.

We are welcoming any questions and issues of implementation. If you have any, please contact to e-mail below or leave a issue.

| Contributor | |

|---|---|

| Kyuyeon Kim | kyuyeonpooh@kaist.ac.kr |

| Hyeongryeol Ryu | hy.ryu@kaist.ac.kr |

| Yeonjae Kim | lion721@kaist.ac.kr |

If you find this repository to be useful, please Star ⭐ or Fork 🍴 this repository.