This repository contains the code and resources for the following paper:

https://aclanthology.org/2021.emnlp-main.399/

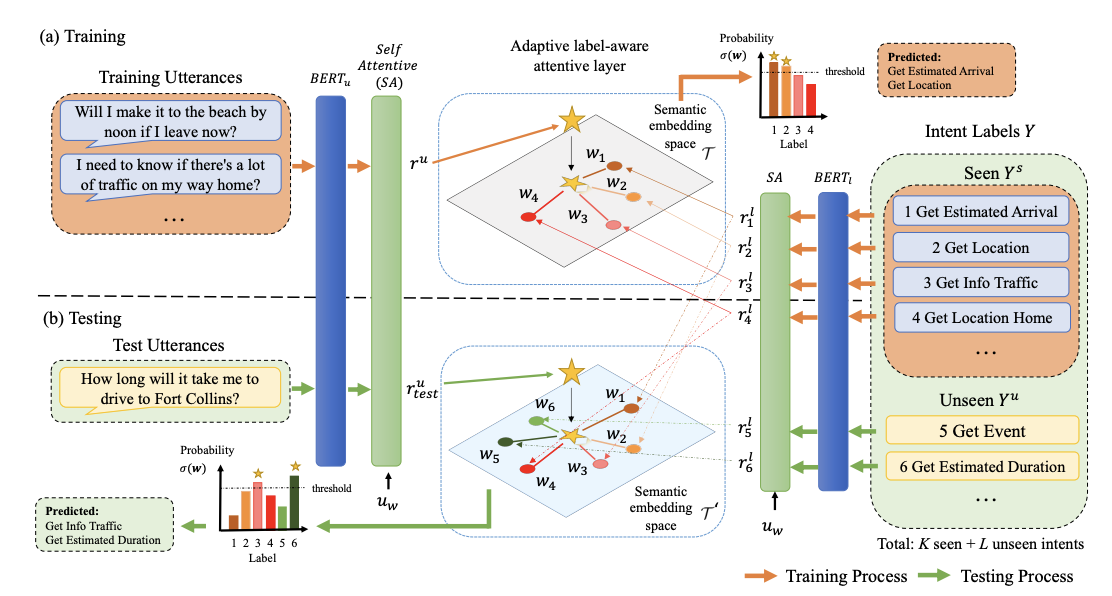

Ting-Wei Wu, Ruolin Su and Biing-Hwang Juang, "A Label-Aware BERT Attention Network for Zero-Shot Multi-Intent Detection in Spoken Language Understanding". In EMNLP 2021 (Main Conference)

It is published in EMNLP 2021.

(Under construction. More to update.)

The work proposes to adopt the linear approximation method by projecting an spoken utterance onto a intent semantic space to solve intent detection problem. It can handle the normal and zero-shot multi-intent detection cases where intents are unseen during training.

LABAN/

└── raw_datasets/

└── dstc8-schema-guided-dialogue/

└── e2e_dialogue/

└── MixATIS_clean/

└── MixSNIPS_clean/

└── SNIPS

└── top-dataset-semantic-parsing

└── data/

├── train_data.py (For normal detection)

├── train_data_zero_shot.py (For zero-shot detection)

├── train_data_baseline.py (For baseline data)

├── dialogue_data.py (For e2e/sgd)

└── model/

├── baseline_multi.py

├── bert_model_zsl.py

└── visualization/

├── visualize.ipynb

all_data.py

baseline_midsf.py

bert_laban.py

bert_zsl.py

config.py

README.md

requirements.txt

This repository contains five public available datasets in raw_datasets:

-

MixATIS (single)

-

MixSNIPS (single)

-

FSPS (single)

-

E2E (multi-turn)

-

SGD (multi-turn)

Please also download the sgd dataset throught the repo here.

There are three use cases for experiments for LABAN:

- Normal multi-intent detection

- Generalized zero-shot multi-intent detection

- Few-shot multi-intent detection

-

Python 3.6, Pytorch 1.4.0

-

If GPU available: CUDA 10.0 supported (Please check here for other CUDA version)

First create a conda environment with python 3.6 and run the following command to install pytorch:

conda install pytorch torchvision cudatoolkit=10.0 -c pytorchInstall dependency via command:

pip install -r requirements.txt

Specify the mode in config.py:

datatype: data to use (semantic, mixatis, mixsnips, e2e, sgd)data_mode:is_zero_shot: whether to use zero-shot (True/False)real_num: real number of seen intentsratio: parameter for splitting train/test labelsis_few_shot: whether to use few-shot (True/False)few_shot_ratio: few shot ratio of data for trainingretrain: use trained model weightstest_mode: test mode (validation, data, embedding, user)validation: produces scores data: produces scores & error analysis embedding: produces sentence embeddings user: predict tag given a sentence

Locate in data/

-

mixatis/mixsnips/semantic:

- normal

python train_data.py -d [data_type] - zero-shot (Creat directory data/<dataset_name>/zeroshot/ first)

python train_data_zero_shot.py -d [data_type] -r [ratio]

- normal

-

e2e/sgd:

(We do not provide sgd in data.zip since it exceeds upload limit, please download sgd dataset here.)

python dialogue_data.py -d [data_type]

We now support the multi-intent detection in all five datasets.

Set is_zero_shot: False.

To train:

python bert_laban.py train

To test:

(Set retrain: True)

python bert_laban.py test

We now support the zero-shot detection in semantic, mixatis, mixsnips dataset.

Set is_zero_shot: True.

Specify real_num and ratio.

To train:

python bert_zsl.py train

To test:

(Set retrain: True)

python bert_zsl.py test

We now support the few-shot detection in semantic, mixatis, mixsnips dataset.

Set is_zero_shot: True.

Set is_few_shot: True.

Specify few_shot_ratio.

To train:

python bert_zsl.py train

To test:

(retrain: True)

python bert_zsl.py test

Please cite if you use the above resources for your research:

@inproceedings{wu-etal-2021-label,

title = "A Label-Aware {BERT} Attention Network for Zero-Shot Multi-Intent Detection in Spoken Language Understanding",

author = "Wu, Ting-Wei and

Su, Ruolin and

Juang, Biing",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.399",

pages = "4884--4896"

}