Two-stage text recognition online system

Introduction

This is a web implementation of text recognition. It's model consists of two parts - detection and recognition, of which recognition is ASTER : An Attentional Scene Text Recognizer with Flexible Rectification implemented by bgshih . Base framework of detection part is EAST: An Efficient and Accurate Scene Text Detector and some improvements are added into the model. The web is built with Flask. While the application server is starting up, network model will be stored and wait for image input until the server is shut down. You can choose more than one images and view their results one by one.

Contents

Setting

- pip install -r requirements.txt (tensorflow version must be 1.4.0 or it won't work)

- set parameters according to your work environment: .

- .flaskenv - These parameters will be added into os path and you can get them using

os.environ.get(). - output.py -

tf.app.flagsparameters for model restore.

- .flaskenv - These parameters will be added into os path and you can get them using

Download

- resnet_v1_50 model or visit https://github.com/tensorflow/models/tree/master/official/resnet. It's placed in

model\data\resnet_v1_50. - detection model. It's placed in

model\data\detection_ckpt. - recognition model. It's placed in

model\aster\experiments\demo\log.

PSW: cj8r

Usage

- server start up -

flask runin your Terminal. - visit http://127.0.0.1:5000/ in your browser.

- select one or more images containing words as your input.

- upload current image.

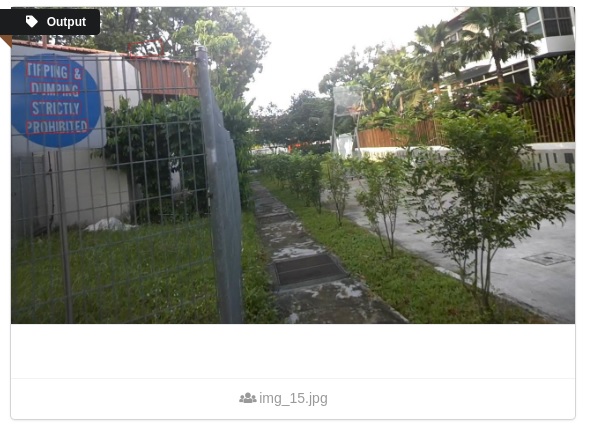

- view output.

- next image or re-select images and upload.

Example

- input

- output

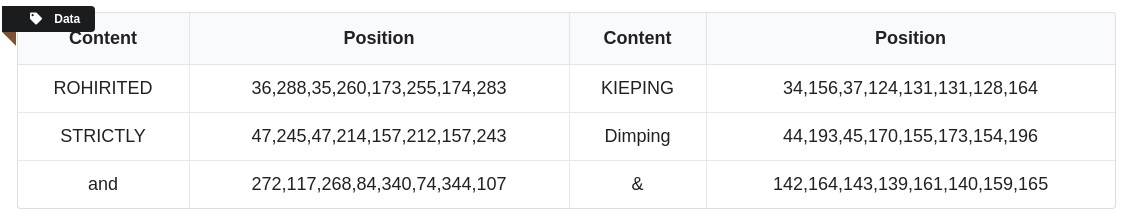

- data

Data_Flow

flask run:(1)

main.pyset system environment variables[^1].

(2)app/_init_.py(import app) start up flask.

(3)model/output.pythe server begin to maintain a model object - restore checkpoint file and wait for input.

[^1] In the project, variables set by .flaskenv are used in many places. So in the main.py, import app must be after the load_dotenv().

- upload image :

(1)

app/routes.py upload_image()receive image and save it intomodel\test_images\images.

(2)model/output.pyput image in the model graph and the output image will be saved inapp\static\output. At the same time,json_dictvariable stores the text data and it will be forwarded to the page.