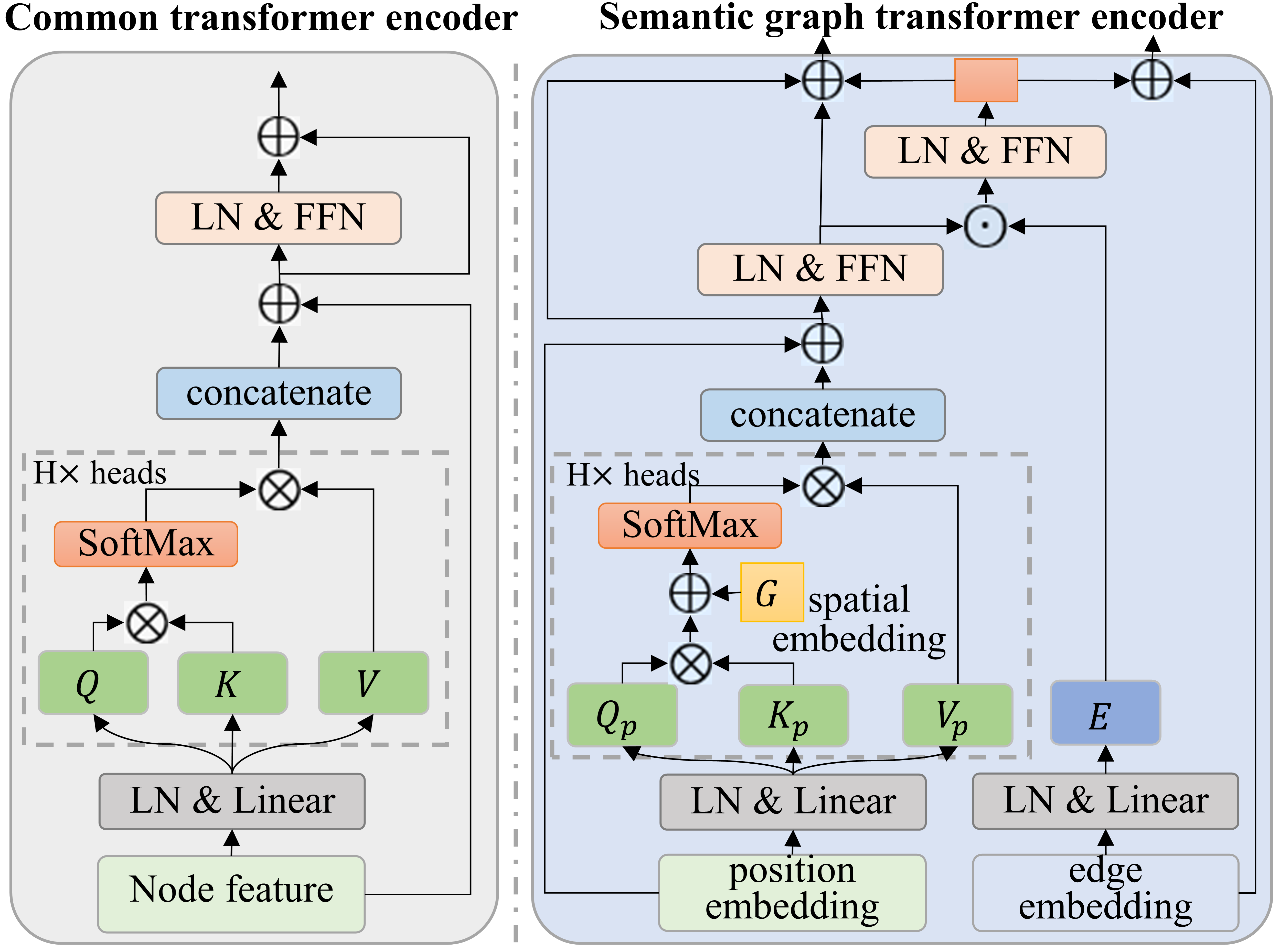

Deep Semantic Graph Transformer for Multi-view 3D Human Pose Estimation,

Lijun Zhang, Kangkang Zhou, Feng Lu, Xiang-Dong Zhou, Yu Shi,

The 38th Annual AAAI Conference on Artificial Intelligence (AAAI), 2024

- The paper will be released soon!

- Test code and model weights will be released soon!

- [14/12/2023] We released the model and training code for SGraFormer.

- Create a conda environment:

conda create -n SGraFormer python=3.7 - Download cudatoolkit=11.0 from here and install

pip3 install torch==1.7.1+cu110 torchvision==0.8.2+cu110 -f https://download.pytorch.org/whl/torch_stable.htmlpip3 install -r requirements.txt

Please download the dataset from Human3.6M website and refer to VideoPose3D to set up the Human3.6M dataset ('./dataset' directory). Or you can download the processed data from here.

${POSE_ROOT}/

|-- dataset

| |-- data_3d_h36m.npz

| |-- data_2d_h36m_gt.npz

| |-- data_2d_h36m_cpn_ft_h36m_dbb.npzTo train a model on Human3.6M:

python main.py --frames 27 --batch_size 1024 --nepoch 50 --lr 0.0002 If you find our work useful in your research, please consider citing:

@inproceedings{

The 38th Annual AAAI Conference on Artificial Intelligence (AAAI)

author = {Lijun Zhang, Kangkang Zhou, Feng Lu, Xiang-Dong Zhou, Yu Shi},

title = {Deep Semantic Graph Transformer for Multi-view 3D Human Pose Estimation},

year = {2024},

}

Our code is extended from the following repositories. We thank the authors for releasing the codes.