[![LinkedIn][linkedin-shield]][linkedin-url]

Developed and deployed a classifier for heart disease based on 45 machine-learning models, achieving accuracy and recall of 99.6% using a tuned stacking classifier (model)

.

Table of Contents

- About the project

- Dataset Description

- Libraries

- Data Cleaning & Preprocessing

- Converting features to catetgorical values

- Checking missing values

- Exploratory Data Analysis

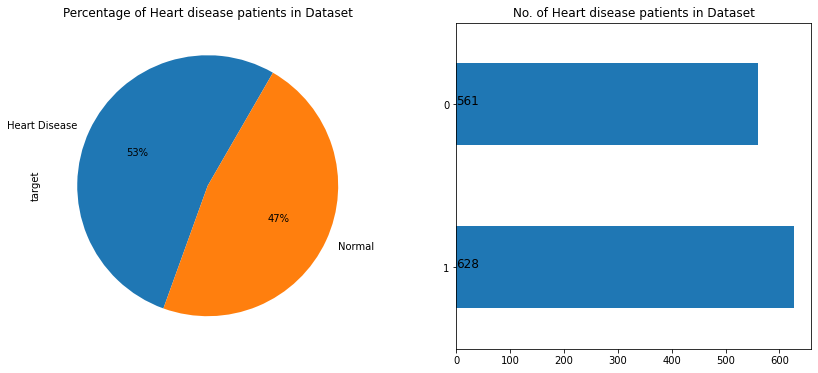

- Distribution of heart disease

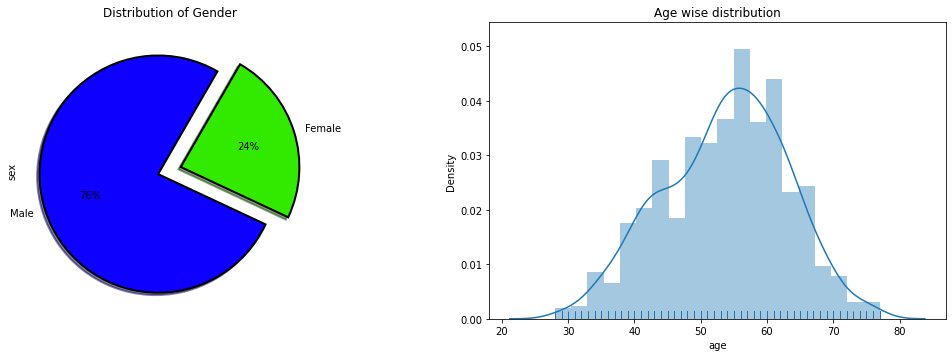

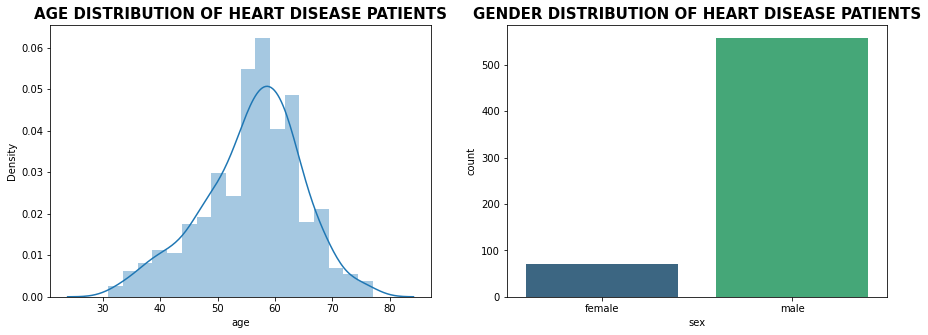

- Gender & Agewise distribution

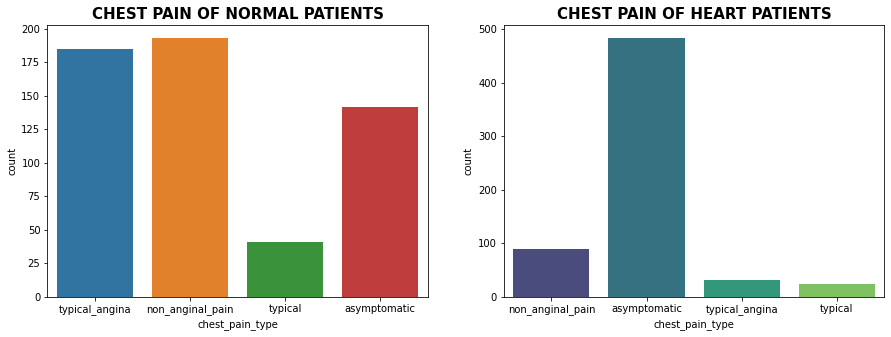

- Chest pain type distribution

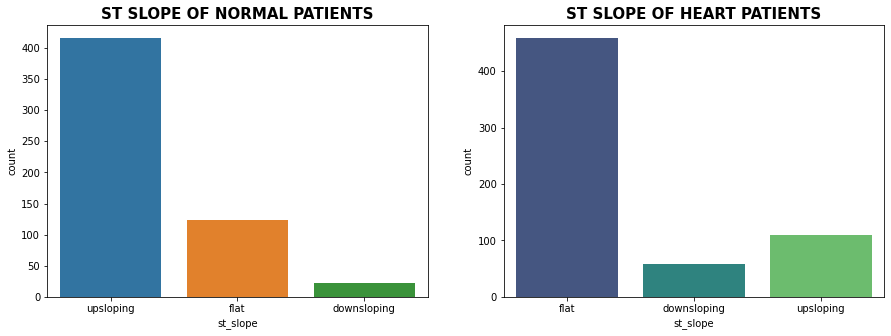

- ST-Slope Distribution

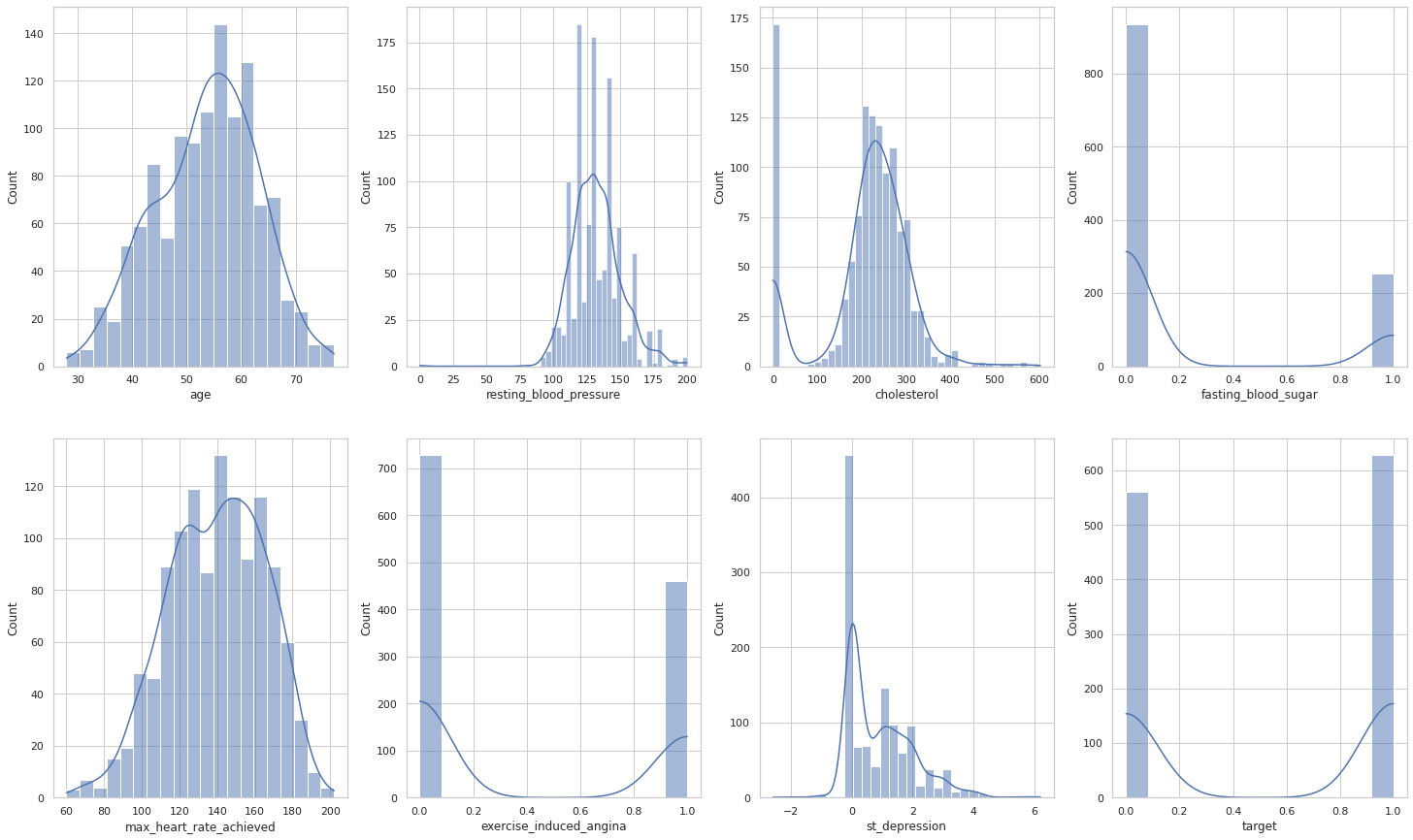

- Numerical features distribution

- Outlier Detection & Removal

- Z-score

- Identify & Remove outliers with therdhold =3

- Converts categorical data into dummy

- Segregate dataset into feature X and target variables y

- Check Correlation

- Dataset Split & Feature Normalization

- 80/20 Split

- Min/Max Scaler

- Cross Validation

- Model Building

- Model Evaluation

- Best Model

- ROC AUC Curve

- Precision Recall Curve

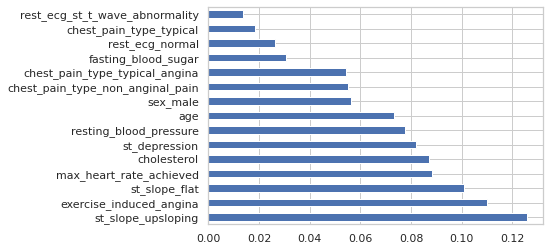

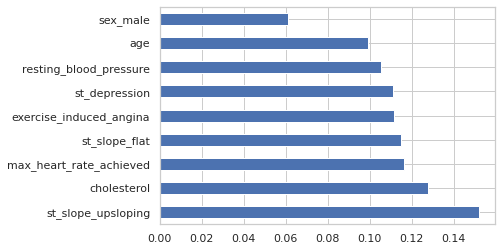

- Feature Importance

- Model Exported

- Feature Selections

- Pearson correlation FS method

- Chi-square

- Recursive Feature elimination

- Embedded Logistic Regression

- Embedded Random forest

- Embedded Light gbm

- Identify & Remove least important features

- Split & Feature Normalization

- Model Building after feature selection

- Model Evaluation after feature selection

- Soft Voting

- Soft Voting Model Evaluation

- Feature Importance

- Conclusion

In today's world, heart disease is one of the leading causes of mortality. Predicting cardiovascular disease is an important challenge in clinical data analysis. Machine learning (ML) has been proven to be effective for making predictions and decisions based on the enormous amount of healthcare data produced each year. Various studies give only a glimpse into predicting heart disease with ML techniques.

I developed and deployed a classifier for heart disease based on 45 machine-learning models, achieving accuracy and recall of 99.6% using a tuned stacking classifier (model) .

.

As well as using the feature selection method to reduce 15 input variables to 9 variables and using a soft voting classifier, I trained a new model ExtraTreesClassifier1000 with a new accuracy of 92.27%

Kaggle's Heart Disease Dataset (Comprehensive) has been used in this project. There are 11 features and a target variable in this dataset. There are 6 nominal variables and 5 numeric variables.

Features variables:

- Age: Patients Age in years (Numeric)

- Sex: Gender of patient (Male – 1, Female – 0)

- Chest Pain Type: Type of chest pain experienced by patient categorized into 1 typical, 2 typical angina, 3 non-anginal pain, 4 asymptomatic (Nominal)

- Resting bp s: Level of blood pressure at resting mode in mm/HG (Numerical)

- Cholesterol: Serum cholesterol in mg/dl (Numeric)

- Fasting blood sugar: Blood sugar levels on fasting > 120 mg/dl represents 1 in case of true and 0 as false (Nominal)

- Resting ecg: Result of an electrocardiogram while at rest are represented in 3 distinct values 0 : Normal 1: Abnormality in ST-T wave 2: Left ventricular hypertrophy (Nominal)

- Max heart rate: Maximum heart rate achieved (Numeric)

- Exercise angina: Angina induced by exercise 0 depicting NO 1 depicting Yes (Nominal)

- Oldpeak: Exercise-induced ST-depression in comparison with the state of rest (Numeric)

- ST slope: ST-segment measured in terms of the slope during peak exercise 0: Normal 1: Upsloping 2: Flat 3: Downsloping (Nominal)

Target variable

- target: It is the target variable that we have to predict 1 means the patient is suffering from heart risk and 0 means the patient is norma

This project requires Python 3.8 and the following Python libraries should be installed to get the project started:

- Numpy

- Pandas

- matplotlib

- scikit-learn

- seaborn

- xgboost

- Converting features to catetgorical values

- Checking missing values

As per the above figure, we can observe that the dataset is balanced having 628 heart disease patients and 561 normal patients.

As we can see from above plot, in this dataset males percentage is way too higher than females where as average age of patients is around 55.

As we can see from above plot more patients accounts for heart disease in comparison to females whereas mean age for heart disease patients is around 58 to 60 years

| target | 0 | 1 |

|---|---|---|

| chest_pain_type | ||

| asymptomatic | 25.310000 | 76.910000 |

| non_anginal_pain | 34.400000 | 14.170000 |

| typical | 7.310000 | 3.980000 |

| typical_angina | 32.980000 | 4.940000 |

| target | 0 | 1 |

|---|---|---|

| st_slope | ||

| downsloping | 3.920000 | 9.390000 |

| flat | 21.930000 | 73.090000 |

| upsloping | 74.150000 | 17.520000 |

The ST segment /heart rate slope (ST/HR slope), has been proposed as a more accurate ECG criterion for diagnosing significant coronary artery disease (CAD) in most of the research papers.

As we can see from above plot upsloping is positive sign as 74% of the normal patients have upslope where as 73.09% heart patients have flat sloping.

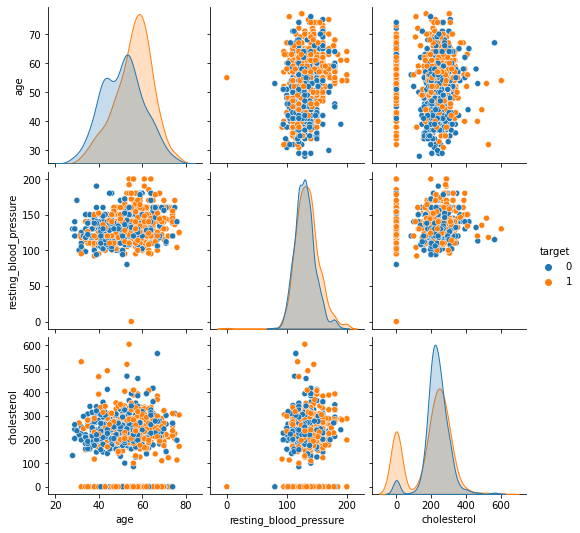

It is evident from the above plot that heart disease risks increase with age

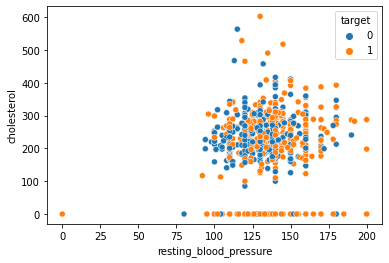

According to the above graph, patients with high cholesterol and high blood pressure are more likely to develop heart disease, whereas those with normal cholesterol and blood pressure do not.

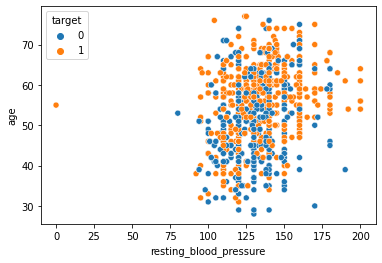

Using the scatterplot above, we can observe that older patients with blood pressure levels >150 are more likely to develop heart disease than younger patients <50 years of age.

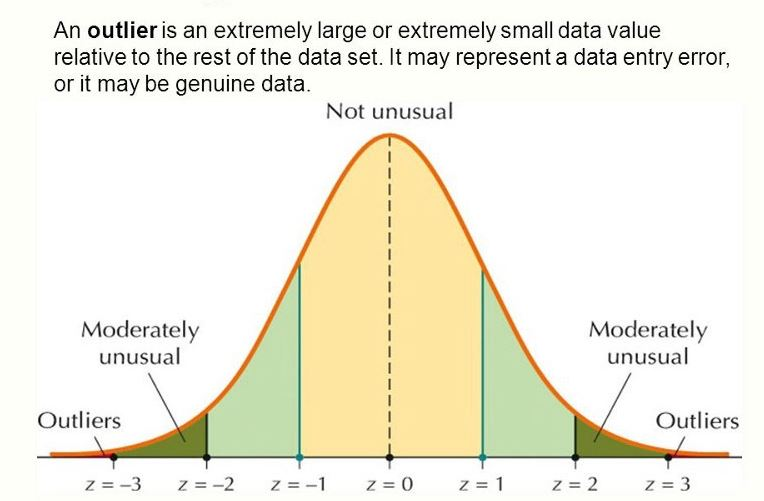

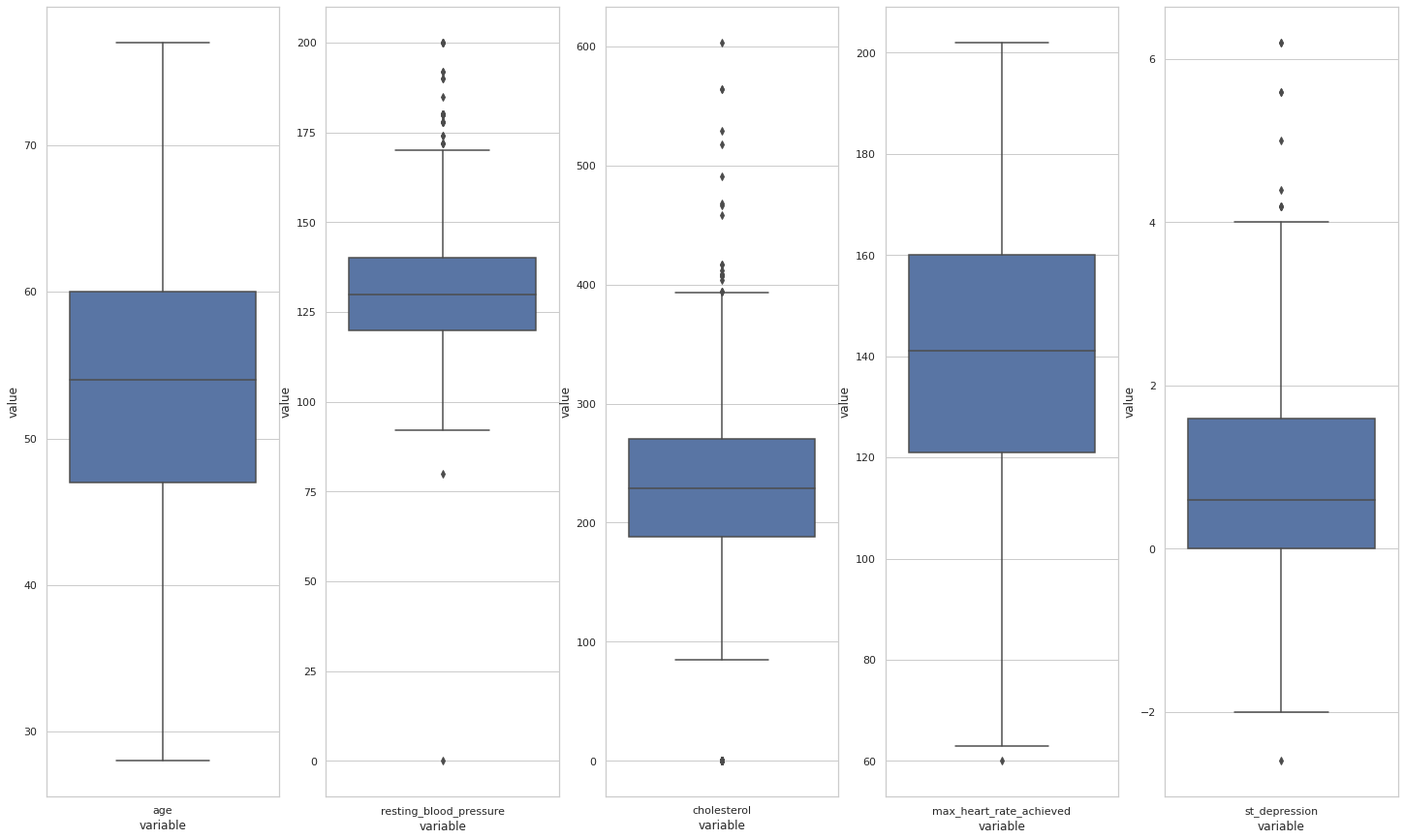

Outliers are defined as values that are disproportionately large or small compared to the rest of the dataset. It may be a result of human error, a change in system behavior, an instrument error, or a genuine error caused by natural deviations in the population.

According to the box plot below, there are some outliers in the following numbers: resting blood pressure, cholesterol, max heart rate and depression.

We've set a threshold >3 here, i.e., points that fall a standard deviation beyond 3 will be treated as outliers, big or small.

In order to segregate feature and target variables, we must first encode categorical variables as dummy variables and encrypt categorical variables as dummy variables.

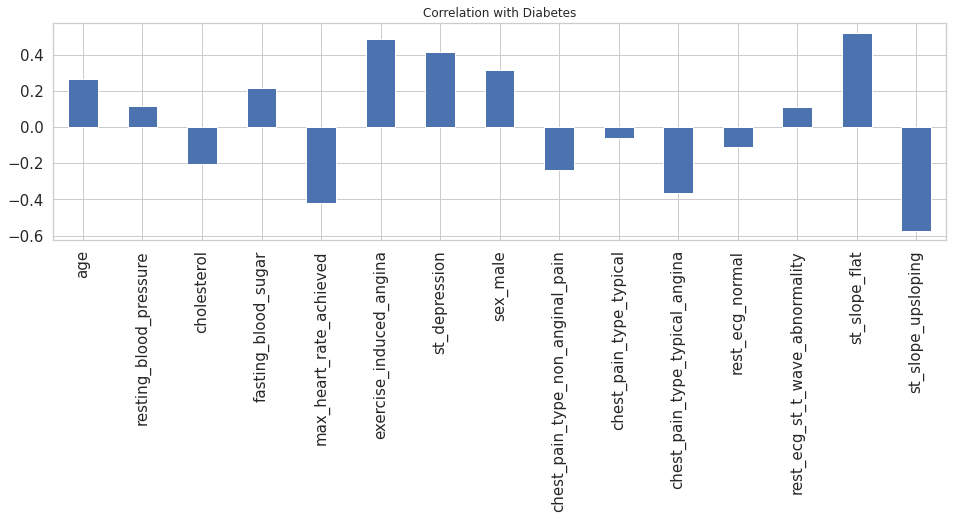

Exercise_induced_angina, st_slope_flat, st_depression, and sex_male are all highly positive correlated variables, which means that as their value increases, chances of heart disease increase.

Exercise_induced_angina, st_slope_flat, st_depression, and sex_male are all highly positive correlated variables, which means that as their value increases, chances of heart disease increase.

An 80:20 split has been performed, i.e., 80% of the data will be used to train the machine learning model, and the remaining 20% will be used to test it.

---Training Set--- (928, 15) (928,) ---Test Set--- (233, 15) (233,)

Both the training and test sets have a balanced distribution for the target variable.

As we can see in the dataset, many variables have 0,1 values whereas some values have continuous values of different scales which may result in giving higher priority to large-scale values to handle this scenario we have to normalize the features having continuous values in the range of [0,1].

So for normalization, we have used MinMaxScaler for scaling values in the range of [0,1]. Firstly, we have to fit and transform the values on the training set i.e., X_train while for the testing set we have to only transform the values.

In order to understand which machine learning model performs well within the training set, we'll do a 10-fold cross-validation.

For this step, we need to define the machine learning model.

For this project, we will use more than 20 different machine learning algorithms with varying hyperparameters.

All machine learning algorithms will be cross-validated 10-fold after the model is defined.

LogisticRegression12: 0.850187 (0.049795)

LinearDiscriminantAnalysis: 0.853436 (0.044442)

KNeighborsClassifier7: 0.846914 (0.043866)

KNeighborsClassifier5: 0.851251 (0.030615)

KNeighborsClassifier9: 0.844811 (0.052060)

KNeighborsClassifier11: 0.844811 (0.038097)

DecisionTreeClassifier: 0.862108 (0.045041)

GaussianNB: 0.848001 (0.050105)

SVC_Linear: 0.849100 (0.048983)

SVC_RBF: 0.857714 (0.052635)

AdaBoostClassifier: 0.851239 (0.048960)

GradientBoostingClassifier: 0.882504 (0.041317)

RandomForestClassifier_Entropy100: 0.914867 (0.032195)

RandomForestClassifier_Gini100: 0.920266 (0.033830)

ExtraTreesClassifier100: 0.909467 (0.038372)

ExtraTreesClassifier500: 0.915930 (0.037674)

MLPClassifier: 0.868478 (0.043864)

SGDClassifier1000: 0.832971 (0.035837)

XGBClassifier2000: 0.911641 (0.032727)

XGBClassifier500: 0.920278 (0.030163)

XGBClassifier100: 0.886816 (0.037999)

XGBClassifier1000: 0.915965 (0.034352)

ExtraTreesClassifier1000: 0.912705 (0.037856).

From the above results, it is clear that the XGBClassifier500 model outperformed others by attaining accuracy of 92.027%.

Next, we will train all the machine learning models that were cross-validated in the prior step and evaluate their performance on test data.

This step compares the performance of all trained machine learning models.

To evaluate our model, we must first define which evaluation metrics will be used.

F1-measure, ROC AUC curve, and sensitivity, specificity, and precision are the most important evaluation metrics for classification

We will also use two additional performance measures, the Matthews correlation coefficient (MCC) and the Log Loss, which are more reliable statistical measures.

| Model | Accuracy | Precision | Sensitivity | Specificity | F1 Score | ROC | Log_Loss | mathew_corrcoef | |

|---|---|---|---|---|---|---|---|---|---|

| 15 | ExtraTreesClassifier500 | 0.931330 | 0.906977 | 0.966942 | 0.892857 | 0.936000 | 0.929900 | 2.371803 | 0.864146 |

| 14 | ExtraTreesClassifier100 | 0.927039 | 0.900000 | 0.966942 | 0.883929 | 0.932271 | 0.925435 | 2.520041 | 0.856002 |

| 18 | XGBClassifier2000 | 0.922747 | 0.905512 | 0.950413 | 0.892857 | 0.927419 | 0.921635 | 2.668273 | 0.846085 |

| 22 | ExtraTreesClassifier1000 | 0.922747 | 0.893130 | 0.966942 | 0.875000 | 0.928571 | 0.920971 | 2.668280 | 0.847907 |

| 21 | XGBClassifier1000 | 0.918455 | 0.898438 | 0.950413 | 0.883929 | 0.923695 | 0.917171 | 2.816511 | 0.837811 |

| 12 | RandomForestClassifier_Entropy100 | 0.918455 | 0.880597 | 0.975207 | 0.857143 | 0.925490 | 0.916175 | 2.816522 | 0.841274 |

| 13 | RandomForestClassifier_Gini100 | 0.918455 | 0.880597 | 0.975207 | 0.857143 | 0.925490 | 0.916175 | 2.816522 | 0.841274 |

| 19 | XGBClassifier500 | 0.914163 | 0.897638 | 0.942149 | 0.883929 | 0.919355 | 0.913039 | 2.964746 | 0.828834 |

| 20 | XGBClassifier100 | 0.871245 | 0.876033 | 0.876033 | 0.866071 | 0.876033 | 0.871052 | 4.447104 | 0.742104 |

| 6 | DecisionTreeClassifier | 0.866953 | 0.846154 | 0.909091 | 0.821429 | 0.876494 | 0.865260 | 4.595356 | 0.734925 |

| 11 | GradientBoostingClassifier | 0.862661 | 0.861789 | 0.876033 | 0.848214 | 0.868852 | 0.862124 | 4.743581 | 0.724836 |

| 16 | MLPClassifier | 0.858369 | 0.843750 | 0.892562 | 0.821429 | 0.867470 | 0.856995 | 4.891827 | 0.716959 |

| 10 | AdaBoostClassifier | 0.854077 | 0.853659 | 0.867769 | 0.839286 | 0.860656 | 0.853527 | 5.040055 | 0.707629 |

| 9 | SVC_RBF | 0.828326 | 0.818898 | 0.859504 | 0.794643 | 0.838710 | 0.827073 | 5.929483 | 0.656330 |

| 4 | KNeighborsClassifier9 | 0.828326 | 0.813953 | 0.867769 | 0.785714 | 0.840000 | 0.826741 | 5.929486 | 0.656787 |

| 2 | KNeighborsClassifier5 | 0.824034 | 0.822581 | 0.842975 | 0.803571 | 0.832653 | 0.823273 | 6.077714 | 0.647407 |

| 8 | SVC_Linear | 0.819742 | 0.811024 | 0.851240 | 0.785714 | 0.830645 | 0.818477 | 6.225956 | 0.639080 |

| 1 | LinearDiscriminantAnalysis | 0.815451 | 0.809524 | 0.842975 | 0.785714 | 0.825911 | 0.814345 | 6.374191 | 0.630319 |

| 0 | LogisticRegression12 | 0.815451 | 0.804688 | 0.851240 | 0.776786 | 0.827309 | 0.814013 | 6.374195 | 0.630637 |

| 3 | KNeighborsClassifier7 | 0.811159 | 0.808000 | 0.834711 | 0.785714 | 0.821138 | 0.810213 | 6.522426 | 0.621619 |

| 7 | GaussianNB | 0.811159 | 0.798450 | 0.851240 | 0.767857 | 0.824000 | 0.809548 | 6.522433 | 0.622227 |

| 5 | KNeighborsClassifier11 | 0.811159 | 0.793893 | 0.859504 | 0.758929 | 0.825397 | 0.809216 | 6.522437 | 0.622814 |

| 17 | SGDClassifier1000 | 0.776824 | 0.719745 | 0.933884 | 0.607143 | 0.812950 | 0.770514 | 7.708376 | 0.576586 |

The ExtraTreesClassifier500 is the best performer among all the models based on the results above

| Model | Accuracy | Precision | Sensitivity | Specificity | F1 Score | ROC | Log_Loss | mathew_corrcoef | |

|---|---|---|---|---|---|---|---|---|---|

| 15 | ExtraTreesClassifier500 | 0.931330 | 0.906977 | 0.966942 | 0.892857 | 0.936000 | 0.929900 | 2.371803 | 0.864146 |

Feature selection (FS) is the process of removing irrelevant and redundant features from the dataset to reduce training time, build simple models, and interpret the features.

In this project, we have used two filter-based FS techniques:

- Pearson Correlation Coefficient

- Chi-square.

One wrapper-based FS:

- Recursive Feature Elimination.

And three embedded FS methods:

- Embedded logistic regression

- Embedded random forest

- Embedded Light GBM.

| Feature | Pearson | Chi-2 | RFE | Logistics | Random Forest | LightGBM | Total | |

|---|---|---|---|---|---|---|---|---|

| 1 | st_slope_flat | True | True | True | True | True | True | 6 |

| 2 | st_depression | True | True | True | True | True | True | 6 |

| 3 | cholesterol | True | True | True | True | True | True | 6 |

| 4 | resting_blood_pressure | True | True | True | False | True | True | 5 |

| 5 | max_heart_rate_achieved | True | True | True | False | True | True | 5 |

| 6 | exercise_induced_angina | True | True | True | False | True | True | 5 |

| 7 | age | True | True | True | False | True | True | 5 |

| 8 | st_slope_upsloping | True | True | True | False | True | False | 4 |

| 9 | sex_male | True | True | True | True | False | False | 4 |

| 10 | chest_pain_type_typical_angina | True | True | True | True | False | False | 4 |

| 11 | chest_pain_type_typical | True | True | True | True | False | False | 4 |

| 12 | chest_pain_type_non_anginal_pain | True | True | True | True | False | False | 4 |

| 13 | rest_ecg_st_t_wave_abnormality | True | True | True | False | False | False | 3 |

| 14 | rest_ecg_normal | True | True | True | False | False | False | 3 |

| 15 | fasting_blood_sugar | True | True | True | False | False | False | 3 |

As a result, we will now select only the top 9 features. Our machine learning models will be retrained with these 9 selected features and their performance will be compared to see if there is an improvement.

Top 5 classifers after features selection

| Model | Accuracy | Precision | Sensitivity | Specificity | F1 Score | ROC | Log_Loss | mathew_corrcoef | |

|---|---|---|---|---|---|---|---|---|---|

| 15 | ExtraTreesClassifier500 | 0.918455 | 0.880597 | 0.975207 | 0.857143 | 0.925490 | 0.916175 | 2.816522 | 0.841274 |

| 22 | ExtraTreesClassifier1000 | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

| 18 | XGBClassifier2000 | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

| 14 | ExtraTreesClassifier100 | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

| 12 | RandomForestClassifier_Entropy100 | 0.914163 | 0.874074 | 0.975207 | 0.848214 | 0.921875 | 0.911710 | 2.964760 | 0.833381 |

Soft Voting Classifier

| Model | Accuracy | Precision | Sensitivity | Specificity | F1 Score | ROC | Log_Loss | mathew_corrcoef | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Soft Voting | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

Top 5 final classifier after feature selections

| Model | Accuracy | Precision | Sensitivity | Specificity | F1 Score | ROC | Log_Loss | mathew_corrcoef | |

|---|---|---|---|---|---|---|---|---|---|

| 22 | ExtraTreesClassifier1000 | 0.922747 | 0.887218 | 0.975207 | 0.866071 | 0.929134 | 0.920639 | 2.668283 | 0.849211 |

| 14 | ExtraTreesClassifier100 | 0.922747 | 0.887218 | 0.975207 | 0.866071 | 0.929134 | 0.920639 | 2.668283 | 0.849211 |

| 18 | XGBClassifier2000 | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

| 15 | ExtraTreesClassifier500 | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

| 0 | Soft Voting | 0.914163 | 0.879699 | 0.966942 | 0.857143 | 0.921260 | 0.912043 | 2.964757 | 0.831855 |

- As part of this project, we analyzed the Heart Disease Dataset (Comprehensive) and performed detailed data analysis and data processing.

- A total of more than 20 machine learning models were trained and evaluated, and their performance was compared and found that the ExtraTreesClassifier500 model with entropy criteria performed better than the others with an accuracy of 93.13 percent.

- We have also implemented a majority vote feature selection method that involves two filter-based, one wrapper-based, and three embedded feature selection methods.

- As a result of feature selection, ExtraTreesClassifier1000 performs at the highest level of accuracy with a 92.27% accuracy rate, which is less than 1% lower than its accuracy before feature selection.

- Based on feature importance plots, ST-Slope, cholesterol and maximum heart rate achieved contributed the most