This repository contains the code and data related to the BIOSCAN-5M project. BIOSCAN-5M is a comprehensive multi-modal dataset comprised of over 5 million specimens, 98% of which are insects. Every record has both image and DNA data.

If you make use of the BIOSCAN-5M dataset and/or this code repository, please cite the following paper:

@misc{gharaee2024bioscan5m,

title={{BIOSCAN-5M}: A Multimodal Dataset for Insect Biodiversity},

author={Zahra Gharaee and Scott C. Lowe and ZeMing Gong and Pablo Millan Arias

and Nicholas Pellegrino and Austin T. Wang and Joakim Bruslund Haurum

and Iuliia Zarubiieva and Lila Kari and Dirk Steinke and Graham W. Taylor

and Paul Fieguth and Angel X. Chang

},

year={2024},

eprint={2406.12723},

archivePrefix={arXiv},

primaryClass={cs.LG},

doi={10.48550/arxiv.2406.12723},

}We present BIOSCAN-5M dataset to the machine learning community. We hope this dataset will facilitate the development of tools to automate aspects of the monitoring of global insect biodiversity.

Each record of the BIOSCAN-5M dataset contains six primary attributes:

- RGB image

- Metadata field:

processid

- Metadata field:

- DNA barcode sequence

- Metadata field:

dna_barcode

- Metadata field:

- Barcode Index Number (BIN)

- Metadata field:

dna_bin

- Metadata field:

- Biological taxonomic classification

- Metadata fields:

phylum,class,order,family,subfamily,genus,species

- Metadata fields:

- Geographical information

- Metadata fields:

country,province_state,latitude,longitude

- Metadata fields:

- Specimen size

- Metadata fields:

image_measurement_value,area_fraction,scale_factor

- Metadata fields:

All dataset image packages and metadata files are accessible for download through the GoogleDrive folder. Additionally, the dataset is available on Zenodo, Kaggle, and HuggingFace.

The images and metadata included in the BIOSCAN-5M dataset available through this repository are subject to copyright and licensing restrictions shown in the following:

- Copyright Holder: CBG Photography Group

- Copyright Institution: Centre for Biodiversity Genomics (email:CBGImaging@gmail.com)

- Photographer: CBG Robotic Imager

- Copyright License: Creative Commons Attribution 3.0 Unported (CC BY 3.0)

- Copyright Contact: collectionsBIO@gmail.com

- Copyright Year: 2021

The dataset metadata file BIOSCAN_5M_Insect_Dataset_metadata contains biological information, geographic information as well as size information of the organisms. We provide this metadata in both CSV and JSONLD file types.

The BIOSCAN-5M dataset comprises resized and cropped images. We have provided various packages of the BIOSCAN-5M dataset, each tailored for specific purposes.

We trained a model on examples from this dataset in order to create a tool introduced in BIOSCAN-1M, which can automatically generate bounding boxes around the insect. We used this to crop each image down to only the region of interest.

- BIOSCAN_5M_original_full: The raw images of the dataset.

- BIOSCAN_5M_cropped: Images after cropping with our cropping tool.

- BIOSCAN_5M_original_256: Original images resized to 256 on their shorter side.

- BIOSCAN_5M_cropped_256: Cropped images resized to 256 on their shorter side.

| BIOSCAN_5M_original_full | BIOSCAN_5M_cropped |

|---|---|

|

|

| BIOSCAN_5M_original_256 | BIOSCAN_5M_cropped_256 |

|

|

The BIOSCAN-5M dataset provides Geographic information associated with the collection sites of the organisms.

The following geographic data is presented in the country, province_state, latitude, and

longitude fields of the metadata file(s):

- Latitude and Longitude coordinates

- Country

- Province or State

The BIOSCAN-5M dataset provides information about size of the organisms.

The size data is presented in the image_measurement_value, area_fraction, and

scale_factor fields of the metadata file(s):

- Image measurement value: Total number of pixels occupied by the organism

Furthermore, utilizing our cropping tool, we calculated the following information about size of the organisms:

- Area fraction: Fraction of the original image, the cropped image comprises.

- Scale factor: Ratio of the cropped image to the cropped and resized image.

We partitioned the BIOSCAN-5M dataset into splits for both closed-world and open-world machine learning problems.

To use the partitions we propose, see the split field of the metadata file(s).

-

The closed-world classification task uses samples labelled with a scientific name for their species (

train,val, andtestpartitions).- This task requires the model to correctly classify new images and DNA barcodes of across a known set of species labels that were seen during training.

-

The open-world classification task uses samples whose species name is a placeholder name, and whose genus name is a scientific name (

key_unseen,val_unseen, andtest_unseenpartitions).- This task requires the model to correctly group together new species that were not seen during training.

- In the retreival paradigm, this task can be performed using

test_unseenrecords as queries against keys from thekey_unseenrecords. - Alternatively, this data can be evaluated at the genus-level by classification via the species in the

trainpartition.

-

Samples labelled with placeholder species names, and whose genus name is not a scientific name are placed in the

other_heldoutpartition.- This data can be used to train an unseen species novelty detector.

-

Samples without species labels are placed in the

pretrainpartition, which comprises 90% of the data.- This data can be used for self-supervised or semi-supervised training.

Figure 8: Treemap diagram showing number of samples per partition. For the pretrain partition (blues), we provide a further breakdown indicating the most fine-grained taxonomic rank that is labelled for the samples. For the remainder of the partitions (all of which are labelled to species level) we show the number of samples in the partition. Samples for seen species are shown in shades of green, and unseen in shades of red.

Two stages of the proposed semi-supervised learning set-up based on BarcodeBERT.

- Pretraining: DNA sequences are tokenized using non-overlapping k-mers and 50% of the tokens are masked for the MLM task. Tokens are encoded and fed into a transformer model. The output embeddings are used for token-level classification.

- Fine-tuning: All DNA sequences in a dataset are tokenized using non-overlapping

$k$ -mer tokenization and all tokenized sequences, without masking, are passed through the pretrained transformer model. Global mean-pooling is applied over the token-level embeddings and the output is used for taxonomic classification.

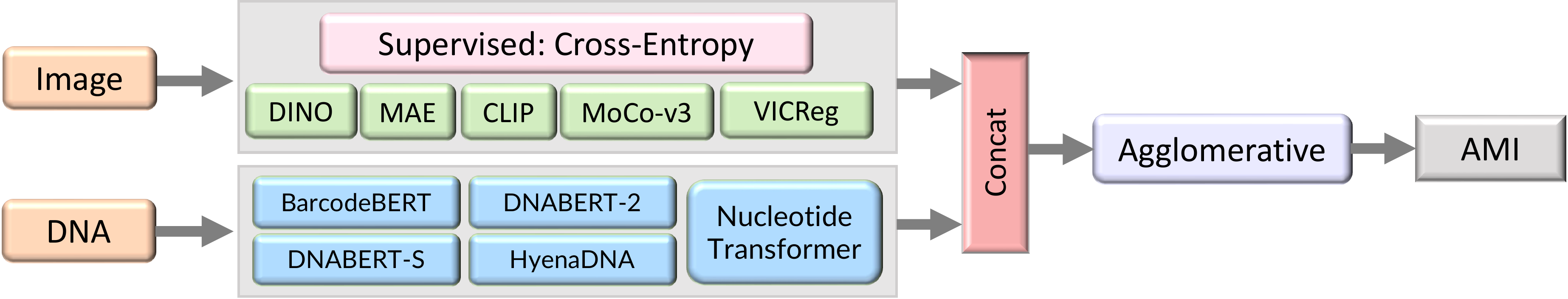

We follow the experimental setup recommended by zero-shot clustering, expanded to operate on multiple modalities.

- Take pretrained encoders.

- Extract feature vectors from the stimuli by passing them through the pretrained encoder.

- Reduce the embeddings with UMAP.

- Cluster the reduced embeddings with Agglomerative Clustering.

- Evaluate against the ground-truth annotations with Adjusted Mutual Information.

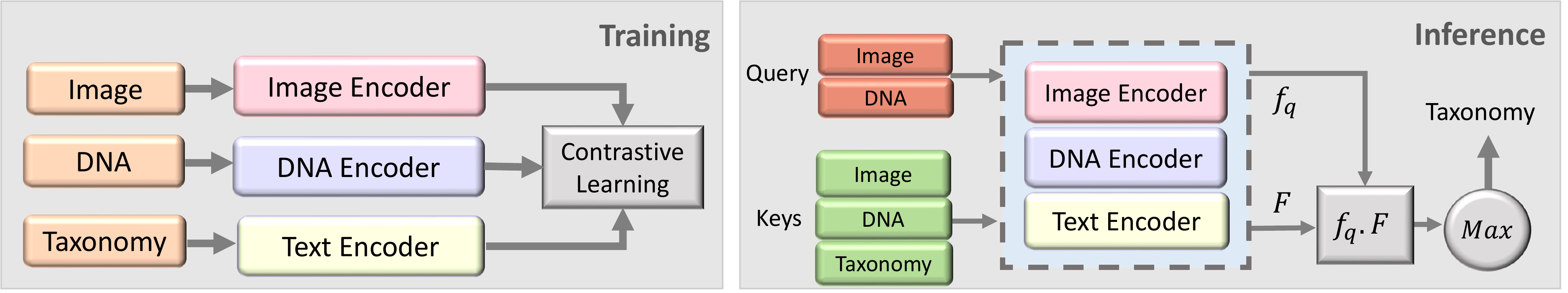

Our experiments using the BIOSCAN-CLIP are conducted in two steps.

- Training: Multiple modalities, including RGB images, textual taxonomy, and DNA sequences, are encoded separately, and trained using a contrastive loss function.

- Inference: Image vs DNA embedding is used as a query, and compared to the embeddings obtained from a database of image, DNA and text (keys). The cosine similarity is used to find the closest key embedding, and the corresponding taxonomic label is used to classify the query.