Time-Series Anomaly Detection Comprehensive Benchmark

This repository updates the comprehensive list of classic and state-of-the-art deep learning methods and datasets for Anomaly Detection in Time Series by

- Zahra Z. Darban [Monash University],

- Geoffrey I. Webb [Monash University],

- Shirui Pan [Griffith University],

- Charu C. Aggarwal [IBM], and

- Mahsa Salehi [Monash University]

If you use this repository in your works, please cite the main article:

[-] Zamanzadeh Darban, Z., Webb, G. I., Pan, S., Aggarwal, C. C., & Salehi, M. (2024). Deep Learning for Time Series Anomaly Detection: A Survey. doi:10.1145/3691338 [link]

@article{10.1145/3691338,

author = {Zamanzadeh Darban, Zahra and Webb, Geoffrey I. and Pan, Shirui and Aggarwal, Charu and Salehi, Mahsa},

title = {Deep Learning for Time Series Anomaly Detection: A Survey},

year = {2024},

issue_date = {January 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {57},

number = {1},

issn = {0360-0300},

url = {https://doi.org/10.1145/3691338},

doi = {10.1145/3691338}

}

- Revisiting Time Series Outlier Detection: Definitions and Benchmarks, NeurIPS 2021.

- Current Time Series Anomaly Detection Benchmarks are Flawed and are Creating the Illusion of Progress, TKDE, 2021.

- Towards a Rigorous Evaluation of Time-Series Anomaly Detection, AAAI 2022.

- Anomaly detection in time series: a comprehensive evaluation, VLDB 2022.

|

|

| Dataset/Benchmark | Real/Synth | MTS/UTS | # Samples | # Entities | # Dim | Domain |

|---|---|---|---|---|---|---|

| CalIt2 | Real | MTS | 10,080 | 2 | 2 | Urban events management |

| CAP | Real | MTS | 921,700,000 | 108 | 21 | Medical and health |

| CICIDS2017 | Real | MTS | 2,830,540 | 15 | 83 | Server machines monitoring |

| Credit Card fraud detection | Real | MTS | 284,807 | 1 | 31 | Fraud detectcion |

| DMDS | Real | MTS | 725,402 | 1 | 32 | Industrial Control Systems |

| Engine Dataset | Real | MTS | NA | NA | 12 | Industrial control systems |

| Exathlon | Real | MTS | 47,530 | 39 | 45 | Server machines monitoring |

| GECCO IoT | Real | MTS | 139,566 | 1 | 9 | Internet of things (IoT) |

| Genesis | Real | MTS | 16,220 | 1 | 18 | Industrial control systems |

| GHL | Synth | MTS | 200,001 | 48 | 22 | Industrial control systems |

| IOnsphere | Real | MTS | 351 | 32 | Astronomical studies | |

| KDDCUP99 | Real | MTS | 4,898,427 | 5 | 41 | Computer networks |

| Kitsune | Real | MTS | 3,018,973 | 9 | 115 | Computer networks |

| MBD | Real | MTS | 8,640 | 5 | 26 | Server machines monitoring |

| Metro | Real | MTS | 48,204 | 1 | 5 | Urban events management |

| MIT-BIH Arrhythmia (ECG) | Real | MTS | 28,600,000 | 48 | 2 | Medical and health |

| MIT-BIH-SVDB | Real | MTS | 17,971,200 | 78 | 2 | Medical and health |

| MMS | Real | MTS | 4,370 | 50 | 7 | Server machines monitoring |

| MSL | Real | MTS | 132,046 | 27 | 55 | Aerospace |

| NAB-realAdExchange | Real | MTS | 9,616 | 3 | 2 | Business |

| NAB-realAWSCloudwatch | Real | MTS | 67,644 | 1 | 17 | Server machines monitoring |

| NASA Shuttle Valve Data | Real | MTS | 49,097 | 1 | 9 | Aerospace |

| OPPORTUNITY | Real | MTS | 869,376 | 24 | 133 | Computer networks |

| Pooled Server Metrics (PSM) | Real | MTS | 132,480 | 1 | 24 | Server machines monitoring |

| PUMP | Real | MTS | 220,302 | 1 | 44 | Industrial control systems |

| SMAP | Real | MTS | 562,800 | 55 | 25 | Environmental management |

| SMD | Real | MTS | 1,416,825 | 28 | 38 | Server machines monitoring |

| SWAN-SF | Real | MTS | 355,330 | 5 | 51 | Astronomical studies |

| SWaT | Real | MTS | 946,719 | 1 | 51 | Industrial control systems |

| WADI | Real | MTS | 957,372 | 1 | 127 | Industrial control systems |

| NYC Bike | Real | MTS/UTS | +25M | NA | NA | Urban events management |

| NYC Taxi | Real | MTS/UTS | +200M | NA | NA | Urban events management |

| UCR | Real/Synth | MTS/UTS | NA | NA | NA | Multiple domains |

| Dodgers Loop Sensor Dataset | Real | UTS | 50,400 | 1 | 1 | Urban events management |

| IOPS | Real | UTS | 2,918,821 | 29 | 1 | Business |

| KPI AIOPS | Real | UTS | 5,922,913 | 58 | 1 | Business |

| MGAB | Synth | UTS | 100,000 | 10 | 1 | Medical and health |

| MIT-BIH-LTDB | Real | UTS | 67,944,954 | 7 | 1 | Medical and health |

| NAB-artificialNoAnomaly | Synth | UTS | 20,165 | 5 | 1 | - |

| NAB-artificialWithAnomaly | Synth | UTS | 24,192 | 6 | 1 | - |

| NAB-realKnownCause | Real | UTS | 69,568 | 7 | 1 | Multiple domains |

| NAB-realTraffic | Real | UTS | 15,662 | 7 | 1 | Urban events management |

| NAB-realTweets | Real | UTS | 158,511 | 10 | 1 | Business |

| NeurIPS-TS | Synth | UTS | NA | 1 | 1 | - |

| NormA | Real/Synth | UTS | 1,756,524 | 21 | 1 | Multiple domains |

| Power Demand Dataset | Real | UTS | 35,040 | 1 | 1 | Industrial control systems |

| SensoreScope | Real | UTS | 621,874 | 23 | 1 | Internet of things (IoT) |

| Space Shuttle Dataset | Real | UTS | 15,000 | 15 | 1 | Aerospace |

| Yahoo | Real/Synth | UTS | 572,966 | 367 | 1 | Multiple domains |

| A1 | MA2 | Model | Year | Su/Un3 | Input | P/S4 | Code |

|---|---|---|---|---|---|---|---|

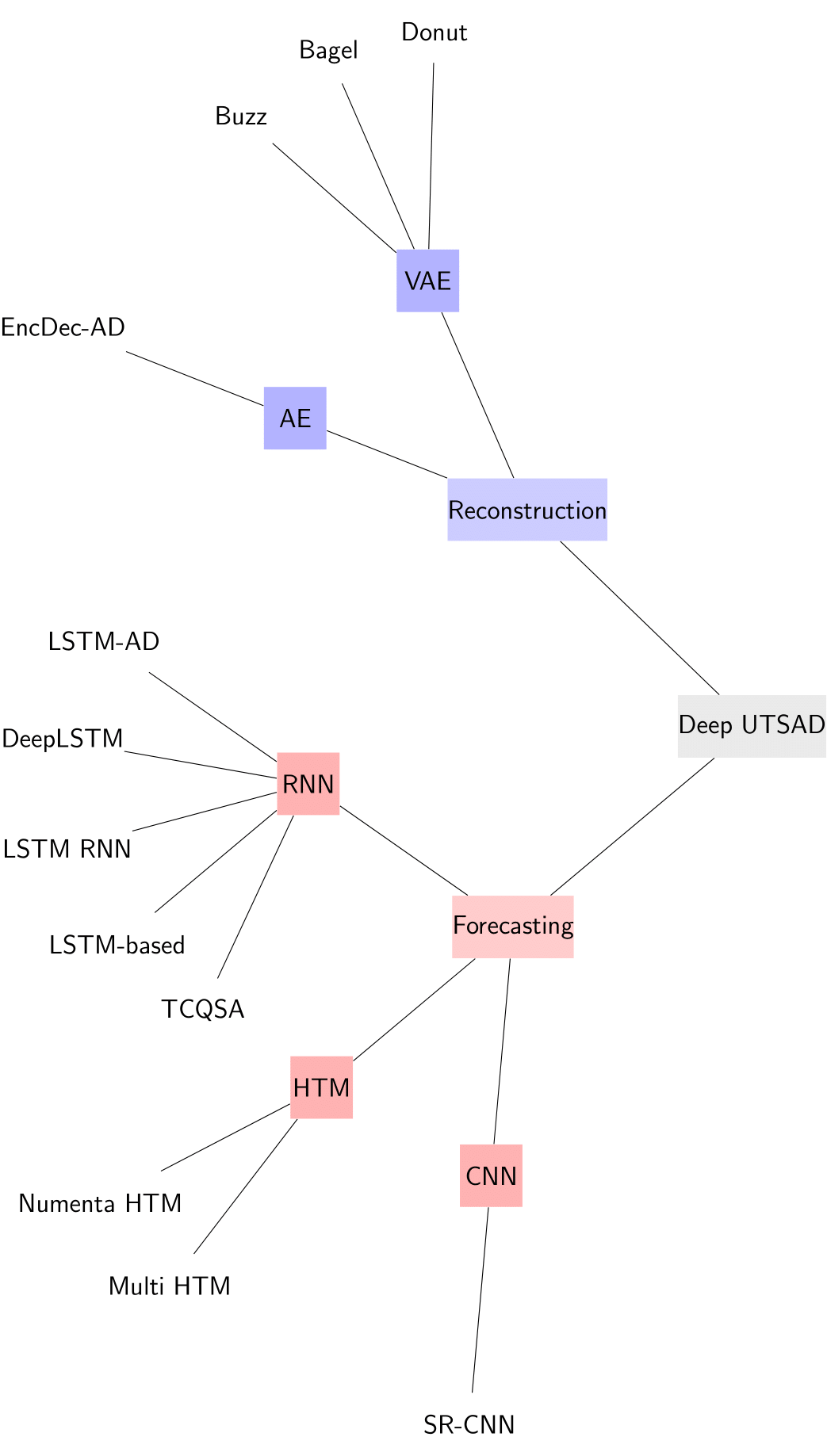

| Forecasting | RNN | LSTM-AD [1] | Year | Un | P | Point | Github |

| Forecasting | RNN | DeepLSTM [13] | 2015 | Semi | P | Point | |

| Forecasting | RNN | LSTM RNN [2] | 2015 | Semi | P | Subseq | |

| Forecasting | RNN | LSTM-based [3] | 2019 | Un | W | - | |

| Forecasting | RNN | TCQSA [4] | 2020 | Su | P | - | |

| Forecasting | HTM | Numenta HTM [5] | 2017 | Un | - | - | |

| Forecasting | HTM | Multi HTM [6] | 2018 | Un | - | - | Github |

| Forecasting | CNN | SR-CNN [7] | 2019 | Un | W | Point + Subseq | Github |

| Reconstruction | VAE | Donut [8] | 2018 | Un | W | Subseq | Github |

| Reconstruction | VAE | Bagel [10] | 2018 | Un | W | Subseq | Github |

| Reconstruction | VAE | Buzz [9] | 2019 | Un | W | Subseq | |

| Reconstruction | AE | EncDec-AD [11] | 2016 | Semi | W | Point | Github |

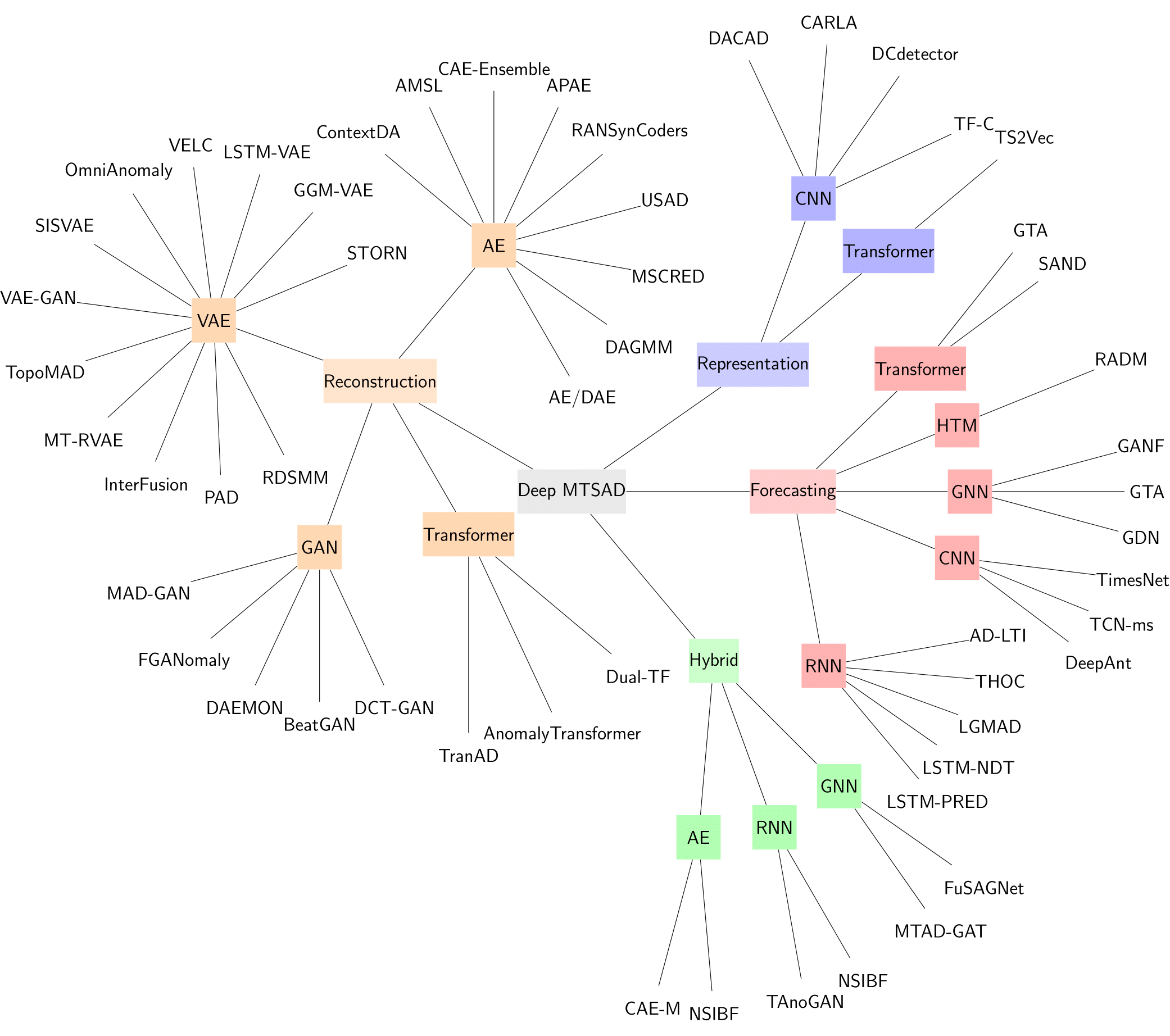

| A1 | MA2 | Model | Year | T/S3 | Su/Un4 | Input | Int5 | P/S6 | Code |

|---|---|---|---|---|---|---|---|---|---|

| Forecasting | RNN | LSTM-PRED [14] | 2017 | T | Un | W | ✓ | - | |

| Forecasting | RNN | LSTM-NDT [12] | 2018 | T | Un | W | ✓ | Subseq | Github |

| Forecasting | RNN | LGMAD [15] | 2019 | T | Semi | P | Point | ||

| Forecasting | RNN | THOC [16] | 2020 | T | Self | W | Subseq | ||

| Forecasting | RNN | AD-LTI [17] | 2020 | T | Un | P | Point (frame) | ||

| Forecasting | CNN | DeepAnt [18] | 2018 | T | Un | W | Point + Subseq | Github | |

| Forecasting | CNN | TCN-ms [19] | 2019 | T | Un | W | - | ||

| Forecasting | CNN | TimesNet [57] | 2023 | T | Semi | W | Subseq | Github | |

| Forecasting | GNN | GDN [20] | 2021 | S | Un | W | ✓ | - | Github |

| Forecasting | GNN | GTA* [21] | 2021 | ST | Semi | - | - | Github | |

| Forecasting | GNN | GANF [22] | 2022 | ST | Un | W | Github | ||

| Forecasting | HTM | RADM [23] | 2018 | T | Un | W | - | ||

| Forecasting | Transformer | SAND [24] | 2018 | T | Semi | W | - | Github | |

| Forecasting | Transformer | GTA* [21] | 2021 | ST | Semi | - | - | Github | |

| Reconstruction | AE | AE/DAE [25] | 2014 | T | Semi | P | Point | Github | |

| Reconstruction | AE | DAGMM [26] | 2018 | S | Un | P | Point | Github | |

| Reconstruction | AE | MSCRED [27] | 2019 | ST | Un | W | ✓ | Subseq | Github |

| Reconstruction | AE | USAD [28] | 2020 | T | Un | W | Point | Github | |

| Reconstruction | AE | APAE [29] | 2020 | T | Un | W | - | ||

| Reconstruction | AE | RANSynCoders [30] | 2021 | ST | Un | P | ✓ | Point | Github |

| Reconstruction | AE | CAE-Ensemble [31] | 2021 | T | Un | W | Subseq | Github | |

| Reconstruction | AE | AMSL [32] | 2022 | T | Self | W | - | Github | |

| Reconstruction | AE | ContextDA [58] | 2023 | T | Un | W | Point + Subseq | ||

| Reconstruction | VAE | STORN [35] | 2016 | ST | Un | P | Point | ||

| Reconstruction | VAE | GGM-VAE [36] | 2018 | T | Un | W | Subseq | ||

| Reconstruction | VAE | LSTM-VAE [33] | 2018 | T | Semi | P | - | Github | |

| Reconstruction | VAE | OmniAnomaly [34] | 2019 | T | Un | W | ✓ | Point + Subseq | Github |

| Reconstruction | VAE | VELC [39] | 2019 | T | Un | - | - | Github | |

| Reconstruction | VAE | SISVAE [37] | 2020 | T | Un | W | Point | ||

| Reconstruction | VAE | VAE-GAN [38] | 2020 | T | Semi | W | Point | ||

| Reconstruction | VAE | TopoMAD [40] | 2020 | ST | Un | W | Subseq | Github | |

| Reconstruction | VAE | PAD [41] | 2021 | T | Un | W | Subseq | ||

| Reconstruction | VAE | InterFusion [42] | 2021 | ST | Un | W | ✓ | Subseq | Github |

| Reconstruction | VAE | MT-RVAE* [43] | 2022 | ST | Un | W | - | ||

| Reconstruction | VAE | RDSMM [44] | 2022 | T | Un | W | Point + Subseq | ||

| Reconstruction | GAN | MAD-GAN [45] | 2019 | ST | Un | W | Subseq | Github | |

| Reconstruction | GAN | BeatGAN [46] | 2019 | T | Un | W | Subseq | Github | |

| Reconstruction | GAN | DAEMON [47] | 2021 | T | Un | W | ✓ | Subseq | |

| Reconstruction | GAN | FGANomaly [48] | 2021 | T | Un | W | Point + Subseq | ||

| Reconstruction | GAN | DCT-GAN* [49] | 2021 | T | Un | W | - | ||

| Reconstruction | Transformer | Anomaly Transformer [50] | 2021 | T | Un | W | Subseq | Github | |

| Reconstruction | Transformer | DCT-GAN* [49] | 2021 | T | Un | W | - | ||

| Reconstruction | Transformer | TranAD [51] | 2022 | T | Un | W | ✓ | Subseq | Github |

| Reconstruction | Transformer | MT-RVAE* [43] | 2022 | ST | Un | W | - | ||

| Reconstruction | Transformer | Dual-TF [59] | 2024 | T | Un | W | Point + Subseq | ||

| Representation | Transformer | TS2Vec [60] | 2022 | T | Self | P | Point | Github | |

| Representation | CNN | TF-C [61] | 2022 | T | Self | W | - | Github | |

| Representation | CNN | DCdetector [62] | 2023 | ST | Self | W | Point + Subseq | Github | |

| Representation | CNN | CARLA [63] | 2024 | ST | Self | W | Point + Subseq | Github | |

| Representation | CNN | DACAD [64] | 2024 | ST | Self | W | Point + Subseq | Github | |

| Hybrid | AE | CAE-M [52] | 2021 | ST | Un | W | Subseq | ||

| Hybrid | AE | NSIBF* [53] | 2021 | T | Un | W | Subseq | Github | |

| Hybrid | RNN | TAnoGAN [54] | 2020 | T | Un | W | Subseq | Github | |

| Hybrid | RNN | NSIBF* [53] | 2021 | T | Un | W | Subseq | Github | |

| Hybrid | GNN | MTAD-GAT [55] | 2020 | ST | Self | W | ✓ | Subseq | Github |

| Hybrid | GNN | FuSAGNet [56] | 2022 | ST | Semi | W | Subseq | Github |

1: Approach.

2: Main Approach.

3: Temporal/Spatial

4: Supervised/Unsupervised | Values: [Su: Supervised, Un: Unsupervised, Semi: Semi-supervised, Self: Self-supervised].

5: Interpretability

6: Point/Sub-sequence

| Metrics | Value Explanation | When to Use |

|---|---|---|

| Precision | Low precision indicates many false alarms (normal instances classified as anomalies). High precision indicates most detected anomalies are actual anomalies, implying few false alarms. | Use when it is crucial to minimize false alarms and ensure that detected anomalies are truly significant. |

| Recall | Low recall indicates many true anomalies are missed, leading to undetected critical events. High recall indicates most anomalies are detected, ensuring prompt action on critical events. | Use when it is critical to detect all anomalies, even if it means tolerating some false alarms. |

| F1 | Low F1 score indicates poor balance between precision and recall, leading to either many missed anomalies and/or many false alarms. High F1 score indicates a good balance, ensuring reliable anomaly detection with minimal misses and false alarms. | Use when a balance between precision and recall is needed to ensure reliable overall performance. |

| F1PA Score | Low F1PA indicates difficulty in accurately identifying the exact points of anomalies. High F1PA indicates effective handling of slight deviations, ensuring precise anomaly detection. | Use when anomalies may not be precisely aligned, and slight deviations in detection points are acceptable. |

| PA%K | Low PA%K indicates that the model struggles to detect a sufficient portion of the anomalous segment. High PA%K indicates effective detection of segments, ensuring that a significant portion of the segment is identified as anomalous. | Use when evaluating the model's performance in detecting segments of anomalies rather than individual points. |

| AU-PR | Low AU-PR indicates poor model performance, especially with imbalanced datasets. High AU-PR indicates strong performance, maintaining high precision and recall across thresholds. | Use when dealing with imbalanced datasets, where anomalies are rare compared to normal instances. |

| AU-ROC | Low AU-ROC indicates the model struggles to distinguish between normal and anomalous patterns. High AU-ROC indicates effective differentiation, providing reliable anomaly detection. | Use for a general assessment of the model's ability to distinguish between normal and anomalous instances. |

| MTTD | High MTTD indicates significant delays in detecting anomalies. Low MTTD indicates quick detection, allowing prompt responses to critical events. | Use when the speed of anomaly detection is critical, and prompt action is required. |

| Affiliation | High value of the affiliation metric indicates a strong overlap or alignment between the detected anomalies and the true anomalies in a time series. | Use when a comprehensive evaluation is required, or the focus is early detection. |

| VUS | A lower VUS value indicates better performance, as it means the predicted anomaly signal is closer to the true signal. | Use when a holistic and threshold-free evaluation of TSAD methods is required. |

[1] Pankaj Malhotra, Lovekesh Vig, Gautam Shroff, Puneet Agarwal, et al . 2015. Long short term memory networks for anomaly detection in time series. In Proceedings of ESANN, Vol. 89. 89–94.

[2] Loïc Bontemps, Van Loi Cao, James McDermott, and Nhien-An Le-Khac. 2016. Collective anomaly detection based on long short-term memory recurrent neural networks. In International conference on future data and security engineering. Springer, 141–152.

[3] Tolga Ergen and Suleyman Serdar Kozat. 2019. Unsupervised anomaly detection with LSTM neural networks. IEEE Transactions on Neural Networks and Learning Systems 31, 8 (2019), 3127–3141.

[4] Fan Liu, Xingshe Zhou, Jinli Cao, Zhu Wang, Tianben Wang, Hua Wang, and Yanchun Zhang. 2020. Anomaly detection in quasi-periodic time series based on automatic data segmentation and attentional LSTM-CNN. IEEE Transactions on Knowledge and Data Engineering (2020).

[5] Subutai Ahmad, Alexander Lavin, Scott Purdy, and Zuha Agha. 2017. Unsupervised real-time anomaly detection for streaming data. Neurocomputing 262 (2017), 134–147.

[6] Jia Wu, Weiru Zeng, and Fei Yan. 2018. Hierarchical temporal memory method for time-series-based anomaly detection. Neurocomputing 273 (2018), 535–546.

[7] Hansheng Ren, Bixiong Xu, Yujing Wang, Chao Yi, Congrui Huang, Xiaoyu Kou, Tony Xing, Mao Yang, Jie Tong, and Qi Zhang. 2019. Time-series anomaly detection service at microsoft. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. 3009–3017.

[8] Haowen Xu, Wenxiao Chen, Nengwen Zhao, Zeyan Li, Jiahao Bu, Zhihan Li, Ying Liu, Youjian Zhao, Dan Pei, Yang Feng, et al. 2018. Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications. In World Wide Web Conference. 187–196.

[9] Wenxiao Chen, Haowen Xu, Zeyan Li, Dan Pei, Jie Chen, Honglin Qiao, Yang Feng, and Zhaogang Wang. 2019. Unsupervised anomaly detection for intricate kpis via adversarial training of vae. In IEEE INFOCOM 2019-IEEE Conference on Computer Communications. IEEE, 1891–1899.

[10] Zeyan Li, Wenxiao Chen, and Dan Pei. 2018. Robust and unsupervised kpi anomaly detection based on conditional variational autoencoder. In International Performance Computing and Communications Conference (IPCCC). IEEE, 1–9.

[11] Pankaj Malhotra, Anusha Ramakrishnan, Gaurangi Anand, Lovekesh Vig, Puneet Agarwal, and Gautam Shroff. 2016. LSTM-based encoder-decoder for multi-sensor anomaly detection. arXiv preprint arXiv:1607.00148 (2016).

[12] Kyle Hundman, Valentino Constantinou, Christopher Laporte, Ian Colwell, and Tom Soderstrom. 2018. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining. 387–395.

[13] Sucheta Chauhan and Lovekesh Vig. 2015. Anomaly detection in ECG time signals via deep long short-term memory networks. In 2015 IEEE International Conference on Data Science and Advanced Analytics (DSAA). IEEE, 1–7.

[14] Jonathan Goh, Sridhar Adepu, Marcus Tan, and Zi Shan Lee. 2017. Anomaly detection in cyber physical systems using recurrent neural networks. In 2017 IEEE 18th International Symposium on High Assurance Systems Engineering (HASE). IEEE, 140–145.

[15] Nan Ding, HaoXuan Ma, Huanbo Gao, YanHua Ma, and GuoZhen Tan. 2019. Real-time anomaly detection based on long short-Term memory and Gaussian Mixture Model. Computers & Electrical Engineering 79 (2019), 106458.

[16] Lifeng Shen, Zhuocong Li, and James Kwok. 2020. Timeseries anomaly detection using temporal hierarchical one-class network. Advances in Neural Information Processing Systems 33 (2020), 13016–13026.

[17] Wentai Wu, Ligang He, Weiwei Lin, Yi Su, Yuhua Cui, Carsten Maple, and Stephen A Jarvis. 2020. Developing an unsupervised real-time anomaly detection scheme for time series with multi-seasonality. IEEE Transactions on Knowledge and Data Engineering (2020).

[18] Mohsin Munir, Shoaib Ahmed Siddiqui, Andreas Dengel, and Sheraz Ahmed. 2018. DeepAnT: A deep learning approach for unsupervised anomaly detection in time series. Ieee Access 7 (2018), 1991–2005.

[19] Yangdong He and Jiabao Zhao. 2019. Temporal convolutional networks for anomaly detection in time series. In Journal of Physics: Conference Series, Vol. 1213. IOP Publishing, 042050.

[20] Ailin Deng and Bryan Hooi. 2021. Graph neural network-based anomaly detection in multivariate time series. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35. 4027–4035.

[21] Zekai Chen, Dingshuo Chen, Xiao Zhang, Zixuan Yuan, and Xiuzhen Cheng. 2021. Learning graph structures with transformer for multivariate time series anomaly detection in iot. IEEE Internet of Things Journal (2021).

[22] Enyan Dai and Jie Chen. 2022. Graph-Augmented Normalizing Flows for Anomaly Detection of Multiple Time Series. arXiv preprint arXiv:2202.07857 (2022).

[23] Nan Ding, Huanbo Gao, Hongyu Bu, Haoxuan Ma, and Huaiwei Si. 2018. Multivariate-time-series-driven real-time anomaly detection based on bayesian network. Sensors 18, 10 (2018), 3367.

[24] Huan Song, Deepta Rajan, Jayaraman Thiagarajan, and Andreas Spanias. 2018. Attend and diagnose: Clinical time series analysis using attention models. In Proceedings of the AAAI conference on artificial intelligence, Vol. 32.

[25] Mayu Sakurada and Takehisa Yairi. 2014. Anomaly detection using autoencoders with nonlinear dimensionality reduction. In Workshop on Machine Learning for Sensory Data Analysis. 4–11.

[26] Bo Zong, Qi Song, Martin Renqiang Min, Wei Cheng, Cristian Lumezanu, Daeki Cho, and Haifeng Chen. 2018. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In International conference on learning representations.

[27] Chuxu Zhang, Dongjin Song, Yuncong Chen, Xinyang Feng, Cristian Lumezanu, Wei Cheng, Jingchao Ni, Bo Zong, Haifeng Chen, and Nitesh V Chawla. 2019. A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In Proceedings of the AAAI conference on artificial intelligence, Vol. 33. 1409–1416.

[28] Julien Audibert, Pietro Michiardi, Frédéric Guyard, Sébastien Marti, and Maria A Zuluaga. 2020. Usad: Unsupervised anomaly detection on multivariate time series. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 3395–3404.

[29] Adam Goodge, Bryan Hooi, See-Kiong Ng, and Wee Siong Ng. 2020. Robustness of Autoencoders for Anomaly Detection Under Adversarial Impact.. In IJCAI. 1244–1250.

[30] Ahmed Abdulaal, Zhuanghua Liu, and Tomer Lancewicki. 2021. Practical approach to asynchronous multivariate time series anomaly detection and localization. In ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2485–2494.

[31] David Campos, Tung Kieu, Chenjuan Guo, Feiteng Huang, Kai Zheng, Bin Yang, and Christian S Jensen. 2021. Unsupervised Time Series Outlier Detection with Diversity-Driven Convolutional Ensembles–Extended Version. arXiv preprint arXiv:2111.11108 (2021).

[32] Yuxin Zhang, Jindong Wang, Yiqiang Chen, Han Yu, and Tao Qin. 2022. Adaptive memory networks with self-supervised learning for unsupervised anomaly detection. IEEE Transactions on Knowledge and Data Engineering (2022).

[33] Daehyung Park, Yuuna Hoshi, and Charles C Kemp. 2018. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robotics and Automation Letters 3, 3 (2018), 1544–1551.

[34] Ya Su, Youjian Zhao, Chenhao Niu, Rong Liu, Wei Sun, and Dan Pei. 2019. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining. 2828–2837.

[35] Maximilian Sölch, Justin Bayer, Marvin Ludersdorfer, and Patrick van der Smagt. 2016. Variational inference for on-line anomaly detection in high-dimensional time series. arXiv preprint arXiv:1602.07109 (2016).

[36] Yifan Guo, Weixian Liao, Qianlong Wang, Lixing Yu, Tianxi Ji, and Pan Li. 2018. Multidimensional time series anomaly detection: A gru-based gaussian mixture variational autoencoder approach. In Asian Conference on Machine Learning. PMLR, 97–112.

[37] Longyuan Li, Junchi Yan, Haiyang Wang, and Yaohui Jin. 2020. Anomaly detection of time series with smoothness-inducing sequential variational auto-encoder. IEEE transactions on neural networks and learning systems 32, 3 (2020), 1177–1191.

[38] Zijian Niu, Ke Yu, and Xiaofei Wu. 2020. LSTM-based VAE-GAN for time-series anomaly detection. Sensors 20, 13 (2020), 3738.

[39] Chunkai Zhang, Shaocong Li, Hongye Zhang, and Yingyang Chen. 2019. VELC: A new variational autoencoder based model for time series anomaly detection. arXiv preprint arXiv:1907.01702 (2019).

[40] Zilong He, Pengfei Chen, Xiaoyun Li, Yongfeng Wang, Guangba Yu, Cailin Chen, Xinrui Li, and Zibin Zheng. 2020. A spatiotemporal deep learning approach for unsupervised anomaly detection in cloud systems. IEEE Transactions on Neural Networks and Learning Systems (2020).

[41] Run-Qing Chen, Guang-Hui Shi, Wan-Lei Zhao, and Chang-Hui Liang. 2021. A joint model for IT operation series prediction and anomaly detection. Neurocomputing 448 (2021), 130–139.

[42] Zhihan Li, Youjian Zhao, Jiaqi Han, Ya Su, Rui Jiao, Xidao Wen, and Dan Pei. 2021. Multivariate time series anomaly detection and interpretation using hierarchical inter-metric and temporal embedding. In ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 3220–3230.

[43] Xixuan Wang, Dechang Pi, Xiangyan Zhang, Hao Liu, and Chang Guo. 2022. Variational transformer-based anomaly detection approach for multivariate time series. Measurement 191 (2022), 110791.

[44] Longyuan Li, Junchi Yan, Qingsong Wen, Yaohui Jin, and Xiaokang Yang. 2022. Learning Robust Deep State Space for Unsupervised Anomaly Detection in Contaminated Time-Series. IEEE Transactions on Knowledge and Data Engineering (2022).

[45] Dan Li, Dacheng Chen, Baihong Jin, Lei Shi, Jonathan Goh, and See-Kiong Ng. 2019. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In International conference on artificial neural networks. Springer, 703–716.

[46] Bin Zhou, Shenghua Liu, Bryan Hooi, Xueqi Cheng, and Jing Ye. 2019. BeatGAN: Anomalous Rhythm Detection using Adversarially Generated Time Series. In IJCAI. 4433–4439.

[47] Xuanhao Chen, Liwei Deng, Feiteng Huang, Chengwei Zhang, Zongquan Zhang, Yan Zhao, and Kai Zheng. 2021. Daemon: Unsupervised anomaly detection and interpretation for multivariate time series. In 2021 IEEE 37th International Conference on Data Engineering (ICDE). IEEE, 2225–2230.

[48] Bowen Du, Xuanxuan Sun, Junchen Ye, Ke Cheng, Jingyuan Wang, and Leilei Sun. 2021. GAN-Based Anomaly Detection for Multivariate Time Series Using Polluted Training Set. IEEE Transactions on Knowledge and Data Engineering (2021).

[49] Yifan Li, Xiaoyan Peng, Jia Zhang, Zhiyong Li, and Ming Wen. 2021. DCT-GAN: Dilated Convolutional Transformer-based GAN for Time Series Anomaly Detection. IEEE Transactions on Knowledge and Data Engineering (2021).

[50] Jiehui Xu, Haixu Wu, Jianmin Wang, and Mingsheng Long. 2021. Anomaly transformer: Time series anomaly detection with association discrepancy. arXiv preprint arXiv:2110.02642 (2021).

[51] Shreshth Tuli, Giuliano Casale, and Nicholas R Jennings. 2022. TranAD: Deep transformer networks for anomaly detection in multivariate time series data. arXiv preprint arXiv:2201.07284 (2022).

[52] Yuxin Zhang, Yiqiang Chen, Jindong Wang, and Zhiwen Pan. 2021. Unsupervised deep anomaly detection for multi-sensor time-series signals. IEEE Transactions on Knowledge and Data Engineering (2021).

[53] Cheng Feng and Pengwei Tian. 2021. Time series anomaly detection for cyber-physical systems via neural system identification and bayesian filtering. In ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2858–2867.

[54] Md Abul Bashar and Richi Nayak. 2020. TAnoGAN: Time series anomaly detection with generative adversarial networks. In 2020 IEEE Symposium Series on Computational Intelligence (SSCI). IEEE, 1778–1785.

[55] Hang Zhao, Yujing Wang, Juanyong Duan, Congrui Huang, Defu Cao, Yunhai Tong, Bixiong Xu, Jing Bai, Jie Tong, and Qi Zhang. 2020. Multivariate time-series anomaly detection via graph attention network. In 2020 IEEE International Conference on Data Mining (ICDM). IEEE, 841–850.

[56] Siho Han and Simon S Woo. 2022. Learning Sparse Latent Graph Representations for Anomaly Detection in Multivariate Time Series. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 2977–2986.

[57] Haixu Wu, Tengge Hu, Yong Liu, Hang Zhou, Jianmin Wang, and Mingsheng Long. 2023. TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis. In ICLR.

[58] Kwei-Herng Lai, Lan Wang, Huiyuan Chen, Kaixiong Zhou, Fei Wang, Hao Yang, and Xia Hu. 2023. Context-aware domain adaptation for time series anomaly detection. In SDM. SIAM, 676–684.

[59] Youngeun Nam, Susik Yoon, Yooju Shin, Minyoung Bae, Hwanjun Song, Jae-Gil Lee, and Byung Suk Lee. 2024. Breaking the Time-Frequency Granularity Discrepancy in Time-Series Anomaly Detection. In Proceedings of the ACM on Web Conference 2024. 4204–4215.

[60] Zhihan Yue, Yujing Wang, Juanyong Duan, Tianmeng Yang, Congrui Huang, Yunhai Tong, and Bixiong Xu. 2022. Ts2vec: Towards universal representation of time series. In AAAI, Vol. 36. 8980–8987.

[61] Xiang Zhang, Ziyuan Zhao, Theodoros Tsiligkaridis, and Marinka Zitnik. 2022. Self-supervised contrastive pre-training for time series via time-frequency consistency. NeurIPS 35 (2022), 3988–4003.

[62] Zahra Zamanzadeh Darban, Geoffrey I Webb, Shirui Pan, Charu C Aggarwal, and Mahsa Salehi. 2023. CARLA: Self-supervised contrastive representation learning for time series anomaly detection. Pattern Recognition (2024).

[63] Zahra Zamanzadeh Darban, Geoffrey I Webb, and Mahsa Salehi. 2024. DACAD: Domain Adaptation Contrastive Learning for Anomaly Detection in Multivariate Time Series. arXiv preprint arXiv:2404.11269 (2024).