This project shows how to analyze an HBase Snapshot using Spark.

Why do this?

The main motivation for writing this code is to reduce the impact on the HBase Region Servers while analyzing HBase records. By creating a snapshot of the HBase table, we can run Spark jobs against the snapshot, eliminating the impact to region servers and reducing the risk to operational systems.

At a high-level, here's what the code is doing:

- Reads an HBase Snapshot into a Spark

- Parses the HBase KeyValue to a Spark Dataframe

- Applies arbitrary data processing (timestamp and rowkey filtering)

- Saves the results back to an HBase (HFiles / KeyValue) format within HDFS, using HFileOutputFormat.

- The output format maintains the original rowkey, timestamp, column family, qualifier, and value structure.

- From here, you can bulkload the HDFS file into HBase.

Here's more detail on how to run this project:

1. Create an HBase table and populate it with data (or you can use an existing table). I've included two ways to simulate the HBase table within this repo (for testing purposes). Use the SimulateAndBulkLoadHBaseData.scala code (preferred method) or you can use write_to_hbase.py (this is very slow compared to the scala code).

2. Take an HBase Snapshot:

snapshot 'hbase_simulated_1m', 'hbase_simulated_1m_ss'

3. (Optional) The HBase Snapshot will already be in HDFS (at /apps/hbase/data), but you can use this if you want to load the HBase Snapshot to an HDFS location of your choice:

hbase org.apache.hadoop.hbase.snapshot.ExportSnapshot -snapshot hbase_simulated_1m_ss -copy-to /tmp/ -mappers 2

4. Run the included Spark (scala) code against the HBase Snapshot. This code will read the HBase snapshot, filter records based on rowkey range (80001 to 90000) and based on a timestamp threshold (which is set in the props file), then write the results back to HDFS in HBase format (HFiles/KeyValue).

a.) Build project:

mvn clean package

b.) Run Spark job:

spark-submit --class com.github.zaratsian.SparkHBase.SparkReadHBaseSnapshot --jars /tmp/SparkHBaseExample-0.0.1-SNAPSHOT.jar /usr/hdp/current/phoenix-client/phoenix-client.jar /tmp/props

c.) NOTE: Adjust the properties within the props file (if needed) to match your configuration.

Preliminary Performance Metrics:

| Number of Records | Spark Runtime (without write to HDFS) | Spark Runtime (with write to HDFS) | HBase Shell Scan (without write to HDFS) |

|---|---|---|---|

| 1,000,000 | 27.07 seconds | 36.05 seconds | 6.8600 seconds |

| 50,000,000 | 417.38 seconds | 764.801 seconds | 7.5970 seconds |

| 100,000,000 | 741.829 seconds | 1413.001 seconds | 8.1380 seconds |

NOTE: Here is the HBase Shell scan that was used

scan 'hbase_simulated_100m', {STARTROW => "\x00\x01\x38\x81", ENDROW => "\x00\x01\x5F\x90", TIMERANGE => [1474571655001,9999999999999]}. This scan will filtered a 100 Million record HBase table based on rowkey range of 80001-90000 (\x00\x01\x38\x81 - \x00\x01\x5F\x90) and also from an arbitrary timerange, specified in unix timestamp.

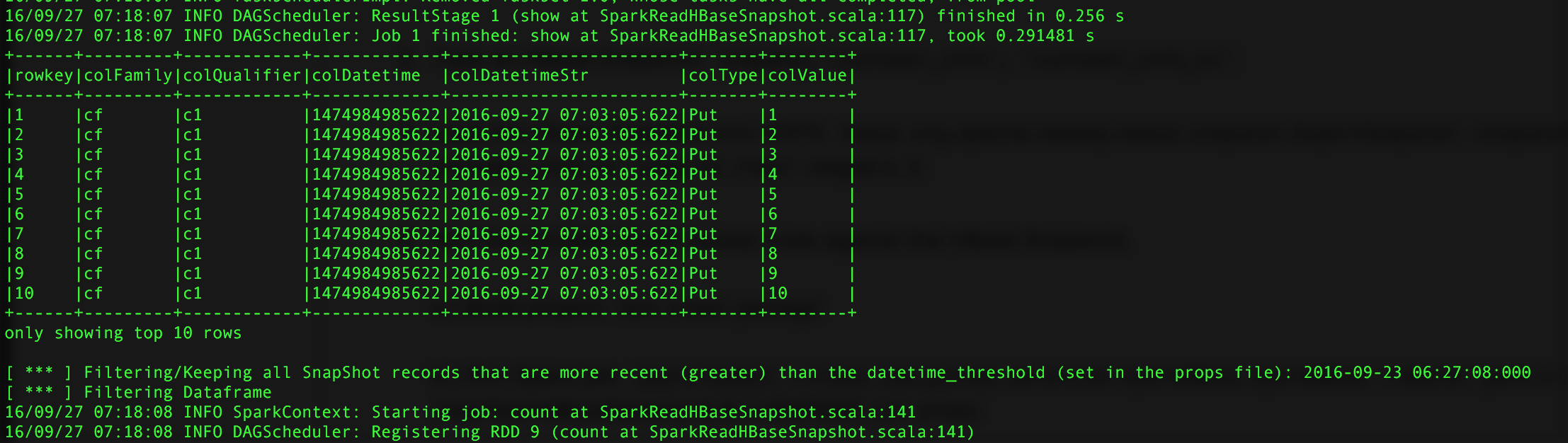

Sample output of HBase simulated data structure (using SimulateAndBulkLoadHBaseData.scala):

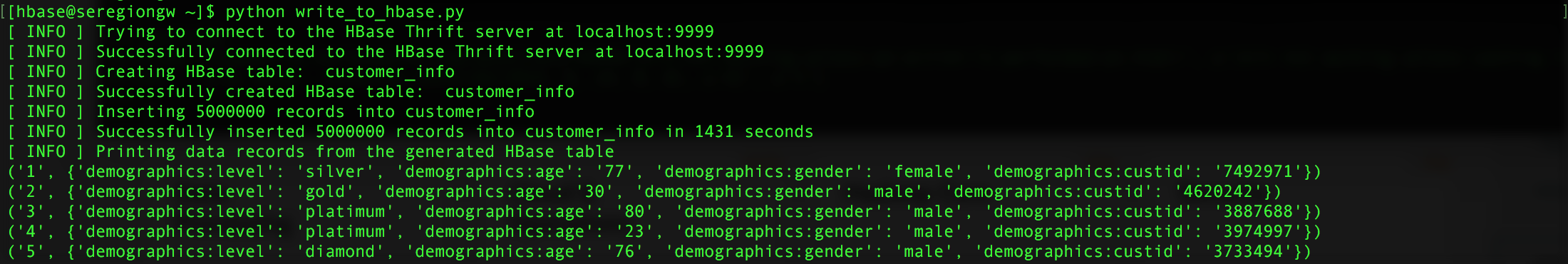

Sample output of HBase simulated data structure (using write_to_hbase.py):

Versions:

This code was tested using Hortonworks HDP HDP-2.5.0.0

HBase version 1.1.2

Spark version 1.6.2

Scala version 2.10.5

References:

HBaseConfiguration Class

HBase TableSnapshotInputFormat Class

HBase KeyValue Class

HBase Bytes Class

HBase CellUtil Class