You can see the final product here: mlb-data-lake.netlify.app

The purpose of this project is to give myself an end to end analytics app so I can explore and learn about different tools in the ecosystem.

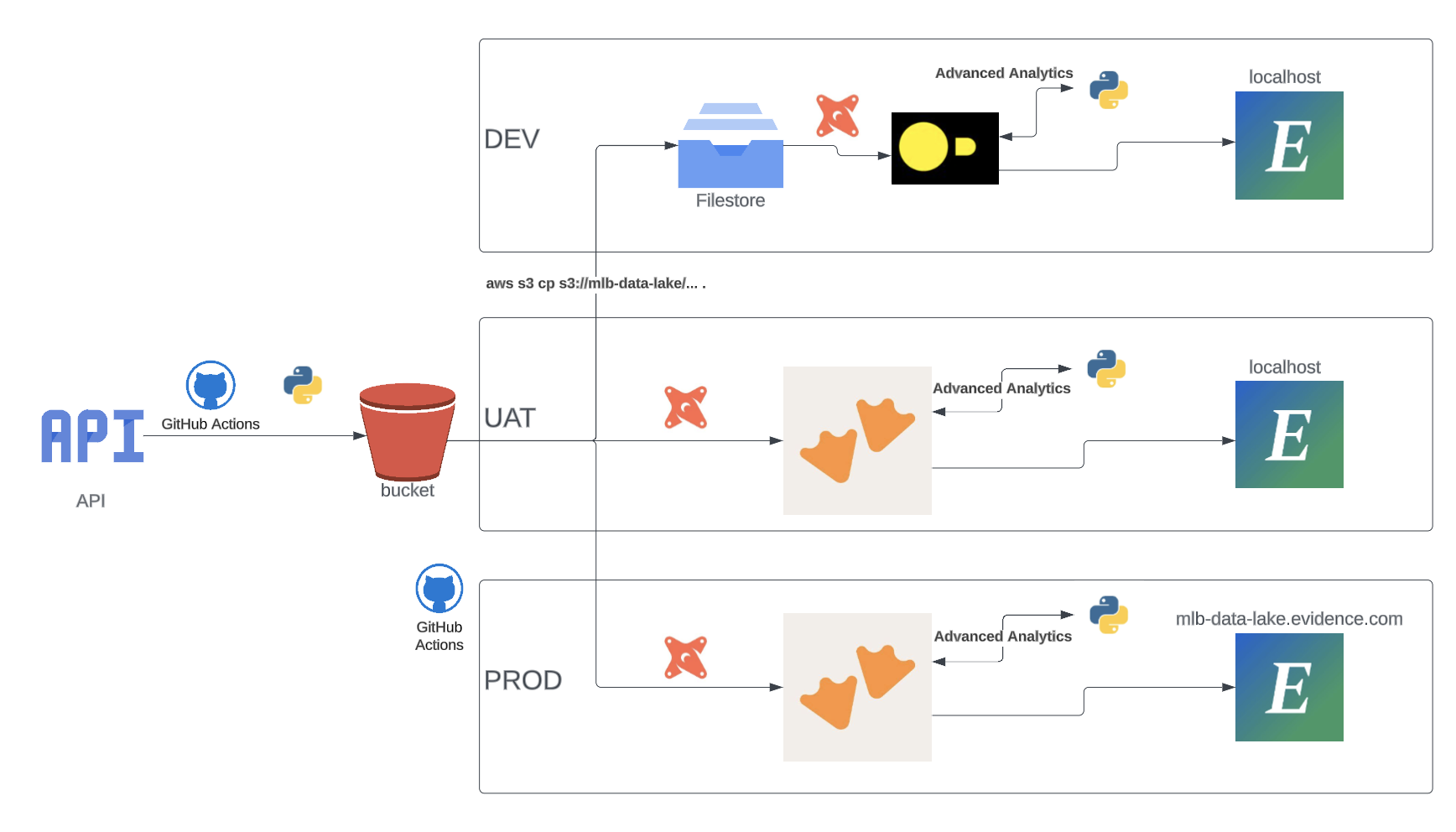

The different layers I have are:

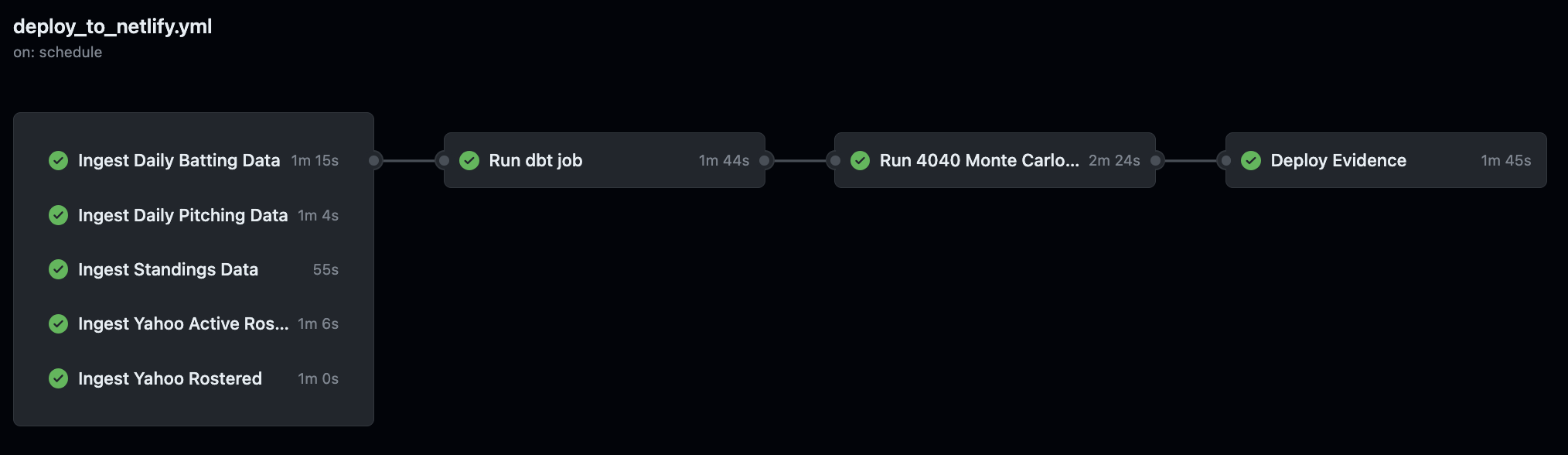

- orchestration (ex. dagster)

- ingestion (ex. read data from API and write to s3)

- extract and load (ex. meltano to read data from s3 into duckdb)

- storage (ex. postgres)

- transform (ex. dbt to currate data mart)

- advanced analytics (ex. churn prediction)

- visualization (ex. Tableau dashboard)

- dagster

- using different python libraries to read in dataframes and write them to s3 using awswrangler

- libraries - awswrangler, boto3, pybaseball, yahoo_fantasy_api, yahoo_oauth

- could write straight to duckdb/motherduck instead of s3

- sources (python libraries, webscrapiing, apis)

- languages (go, rust)

- tools (meltano, fivetran, airbyte)

- None. Using external tables

- meltano

- fivetran

- airbyte

- using duckdb locally and motherduck in a uat and production enviroment

- postgres

- dbt-duckdb

- sqlmesh

- python script creating a Monte Carlo Simulation

- need to do more research generally here

- evidence.dev

- rill - https://www.rilldata.com/

- Streamlit - https://streamlit.io/