- [2022-3-22]: **

v0.2.2has been released:- Fix some bugs.

- [2021-11-3]: **

v0.2.0has been released:- New features:

- Change the format of

linkconfiguration.

- Change the format of

- New features:

- [2021-10-27]: **

v0.1.4has been released:- New features:

- Add contrastive representation learning methods (MoCo-V2).

- New features:

- [2021-10-24]: **

v0.1.2has been released:- New features:

- Add distributed (DDP) support.

- New features:

- [2021-10-7]: **

v0.1.1has been released:- New features:

- Change the Cars196 loading method.

- New features:

- [2021-9-15]: **

v0.1.0has been released:- New features:

- output_wrapper and pipeline setting are decomposed for convenience.

- Pipeline will be stored in the experiment folder using a directed graph.

- New features:

- [2021-9-13]: **

v0.0.1has been released:- New features:

config.yamlwill be created to store the configuration in the experiment folder.**

- New features:

- [2021-9-6]:

v0.0.0has been released.

GeDML is an easy-to-use generalized deep metric learning library, which contains:

- State-of-the-art DML algorithms: We contrain 18+ losses functions and 6+ sampling strategies, and divide these algorithms into three categories (i.e., collectors, selectors, and losses).

- Bridge bewteen DML and SSL: We attempt to bridge the gap between deep metric learning and self-supervised learning through specially designed modules, such as

collectors. - Auxiliary modules to assist in building: We also encapsulates the upper interface for users to start programs quickly and separates the codes and configs for managing hyper-parameters conveniently.

pip install gedmlCUDA_VISIBLE_DEVICES=0 python demo.py \

--data_path <path_to_data> \

--save_path <path_to_save> \

--eval_exclude f1_score NMI AMI \

--device 0 --batch_size 128 --test_batch_size 128 \

--setting proxy_anchor --splits_to_eval test --embeddings_dim 128 \

--lr_trunk 0.0001 --lr_embedder 0.0001 --lr_collector 0.01 \

--dataset cub200 --delete_old \CUDA_VISIBLE_DEVICES=0 python demo.py \

--data_path <path_to_data> \

--save_path <path_to_save> \

--eval_exclude f1_score NMI AMI \

--device 0 --batch_size 128 --test_batch_size 128 \

--setting mocov2 --splits_to_eval test --embeddings_dim 128 \

--lr_trunk 0.015 --lr_embedder 0.015 \

--dataset imagenet --delete_old \If you want to use our code to conduct DML or CRL experiments, please refer to the up-to-date and most detailed configurations below: 👇

- If you use the command line, you can run

sample_run.shto try this project. - If you debug with VS Code, you can refer to

launch.jsonto set.vscode.

Use ParserWithConvert to get parameters

>>> from gedml.launcher.misc import ParserWithConvert

>>> csv_path = ...

>>> parser = ParserWithConvert(csv_path=csv_path, name="...")

>>> opt, convert_dict = parser.render()Use ConfigHandler to create all objects.

>>> from gedml.launcher.creators import ConfigHandler

>>> link_path = ...

>>> assert_path = ...

>>> param_path = ...

>>> config_handler = ConfigHandler(

convert_dict=convert_dict,

link_path=link_path,

assert_path=assert_path,

params_path=param_path,

is_confirm_first=True

)

>>> config_handler.get_params_dict()

>>> objects_dict = config_handler.create_all()Use manager to automatically call trainer and tester.

>>> from gedml.launcher.misc import utils

>>> manager = utils.get_default(objects_dict, "managers")

>>> manager.run()Or directly use trainer and tester.

>>> from gedml.launcher.misc import utils

>>> trainer = utils.get_default(objects_dict, "trainers")

>>> tester = utils.get_default(objects_dict, "testers")

>>> recorder = utils.get_default(objects_dict, "recorders")

# start to train

>>> utils.func_params_mediator(

[objects_dict],

trainer.__call__

)

# start to test

>>> metrics = utils.func_params_mediator(

[

{"recorders": recorder},

objects_dict,

],

tester.__call__

)For more information, please refer to: 👉 Docs 📖

Some specific guidances:

We will continually update the optimal parameters of different configs in TsinghuaCloud

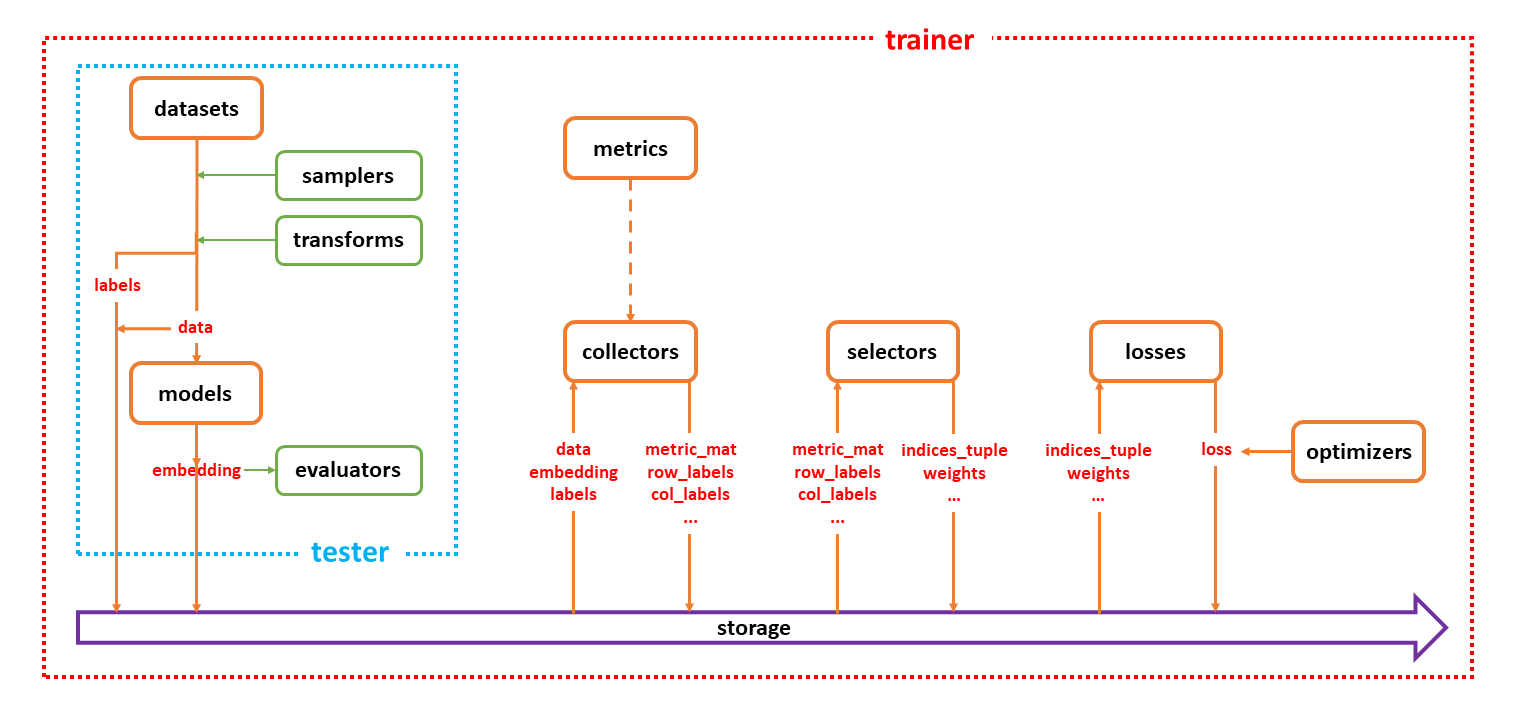

This project is modular in design. The pipeline diagram is as follows:

| method | description |

|---|---|

| BaseCollector | Base class |

| DefaultCollector | Do nothing |

| ProxyCollector | Maintain a set of proxies |

| MoCoCollector | paper: Momentum Contrast for Unsupervised Visual Representation Learning |

| SimSiamCollector | paper: Exploring Simple Siamese Representation Learning |

| HDMLCollector | paper: Hardness-Aware Deep Metric Learning |

| DAMLCollector | paper: Deep Adversarial Metric Learning |

| DVMLCollector | paper: Deep Variational Metric Learning |

| method | description |

|---|---|

| CrossEntropyLoss | Cross entropy loss for unsupervised methods |

| LargeMarginSoftmaxLoss | paper: Large-Margin Softmax Loss for Convolutional Neural Networks |

| ArcFaceLoss | paper: ArcFace: Additive Angular Margin Loss for Deep Face Recognition |

| CosFaceLoss | paper: CosFace: Large Margin Cosine Loss for Deep Face Recognition |

| method | description |

|---|---|

| ContrastiveLoss | paper: Learning a Similarity Metric Discriminatively, with Application to Face Verification |

| MarginLoss | paper: Sampling Matters in Deep Embedding Learning |

| TripletLoss | paper: Learning local feature descriptors with triplets and shallow convolutional neural networks |

| AngularLoss | paper: Deep Metric Learning with Angular Loss |

| CircleLoss | paper: Circle Loss: A Unified Perspective of Pair Similarity Optimization |

| FastAPLoss | paper: Deep Metric Learning to Rank |

| LiftedStructureLoss | paper: Deep Metric Learning via Lifted Structured Feature Embedding |

| MultiSimilarityLoss | paper: Multi-Similarity Loss With General Pair Weighting for Deep Metric Learning |

| NPairLoss | paper: Improved Deep Metric Learning with Multi-class N-pair Loss Objective |

| SignalToNoiseRatioLoss | paper: Signal-To-Noise Ratio: A Robust Distance Metric for Deep Metric Learning |

| PosPairLoss | paper: Exploring Simple Siamese Representation Learning |

| method | description |

|---|---|

| ProxyLoss | paper: No Fuss Distance Metric Learning Using Proxies |

| ProxyAnchorLoss | paper: Proxy Anchor Loss for Deep Metric Learning |

| SoftTripleLoss | paper: SoftTriple Loss: Deep Metric Learning Without Triplet Sampling |

| method | description |

|---|---|

| BaseSelector | Base class |

| DefaultSelector | Do nothing |

| DenseTripletSelector | Select all triples |

| DensePairSelector | Select all pairs |

- KevinMusgrave / pytorch-metric-learning

- KevinMusgrave / powerful-benchmarker

- Confusezius / Deep-Metric-Learning-Baselines

- facebookresearch / moco

- PatrickHua / SimSiam

- ujjwaltiwari / Deep_Variational_Metric_Learning

- idstcv / SoftTriple

- wzzheng / HDML

- google-research / simclr

- kunhe / FastAP-metric-learning

- wy1iu / LargeMargin_Softmax_Loss

- tjddus9597 / Proxy-Anchor-CVPR2020

- facebookresearch / deit

- assert parameters.

- write github action to automate unit-test, package publish and docs building.

- add cross-validation splits protocol.

- distributed tester for matrix-form input.

- add metrics module.

- how to improve the running efficiency.

- re-define pipeline setting!!!

- simplify distribution setting!!