Disclaimer: This code was developed on Ubuntu 20.04 with Python 3.10, CUDA 12.1 and PyTorch 2.1.

There is a single conda environment required to run the project (hmp). Requirements can be found in requirements.txt. To create the environment and install necessary packages:

source scripts/create_venv.sh

It installs all the necessary packages (including PyMAF-X related packages) to run the project. In case of installation problems, you may refer to related pages of PyTorch3D, mmpose.

Note: There is no need to clone code from the git repos of METRO or PyMAF-X. It is already incorporated under ./external/MeshTransformer and ./external/PyMAF-X

We are done with setting the virtual environment up. Code is arranged. Now it is time to put body models, HMP model and initializator (PyMAF-X) model. Go to HMP webpage to register. Then to download all required models, run:

source scripts/download_all.sh

In the execution you should enter your email and password for the website. This will download all required models. In case of problems running script, you can manually donwload from our website.

Note: If you would like to use METRO instead of PyMAF-X, Please follow the instructions on file downloads on their website. Folder orders are exactly same as suggested there.

Note: A pretrained version of the motion prior model is already downloaded and placed under ./outputs/generative/results/model. Follow this part only if you really want to train.

In motion prior training we used GRAB, TCDHands and SAMP from AMASS. Download the ARCTIC dataset also. Those raw datasets should be placed under ./data/amass_raw

For processing the raw AMASS and ARCTIC datasets. Run the following commands respectively:

python src/scripts/process_amass.py

python src/scripts/process_arctic.py

To split the processed dataset into train, test, and validation sets we run:

python src/datasets/amass.py amass.yaml

To train the model on single-motion AMASS sequences, use:

python src/train_basic.py basic.yaml

The code will obtain sequences of 32, 64, 128, 256, and 512 frames and reconstruct them at 30, 60, 120, and 240 fps.

python src/train.py generative.yaml

To evaluate the trained model, we can see its performance on motion_reconstruction, motion_inbetweening or latent_interpolation. You can run:

python src/application.py --config application.yaml --task TASKNAME --save_path SAVEPATH

For interactive visualization for those tasks, run python scenepic_viz.py SAVEPATH.

If you want to run/evaluate HMP on them, download DexYCB and HO3D-v3 datasets and put them in data/rgb_data/HO3D_v3 and data/rgb_data/DexYCB respectively.

For DexYCB, you first need to run ./scripts/process_dexycb.sh

We are intending to publish quantitative numbers for ARCTIC.

You can download and try out new videos. Download a 30-fps video and put it in data/rgb_data/in_the_wild/FOLDERNAME/rgb_raw.mp4. An example command for fitting:

python src/fitting_app.py --config CONFIGNAME.yaml --vid-path data/rgb_data/FOLDERNAME/rgb_raw.mp4

The output will be placed in ./optim/CONFIGNAME/DATASETNAME/FOLDERNAME/recon_000_30fps_hmp.mp4. We share some sample configs (in_the_lab_sample_config.yaml & in_the_wild_sample_config.yaml) under configs folder for in_the_lab(HO3D, DexYCB, ARCTIC etc. ) and in_the_wild setting. You can play with the numbers.

To better illustrate the output structure please look at the folowing example.

./data

├── rgb_data

| ├── DexYCB

| ├── HO3D_v3

| └── in_the_wild

├── body_models

├── mmpose_models

├── amass(*)

├── amass_raw(*)

└── amass_processed_hist(*)

*Optional folders. amass, amass_raw, and amass_processed_hist folders are for training motion prior.

For better results make sure that hands are not interacting and very close. This deteriorates bounding box detection and regression performance.

After collecting the above necessary files, the directory structure is expected as follows.

├── data

| ├── amass (*)

| | ├── generative

| | └── single

| ├── amass_raw (*)

| | ├── GRAB

| | ├── SAMP

| | └── TCDHands

| ├── body_models

| | ├── mano

| | | ├── MANO_LEFT.pkl

| | | └── MANO_RIGHT.pkl

| | └── smplx

| | └── SMPLX_NEUTRAL.npz

| ├── rgb_data

| | ├── DexYCB

| | ├── HO3D_v3

| | └── in_the_wild

| └── mmpose models

|

├── external

| ├── mmpose

| ├── PyMAF-X

| ├── v2a_code

| └── MeshTransformer

|

└── optim

└── CONFIGNAME

└── DATASETNAME

└── FOLDERNAME

(*) Optional folders for motion prior training.

- HMP codebase is adapted from NEMF

- Part of the code in

src/utils.pyandpython src/scripts/process_amass.pyis taken from HuMoR. - We use METRO and PyMAF-X as initialization methods and baselines

- We use MediaPipe and MMPose as 2D keypoint sources.

- We use TempCLR for quantitative evaluation.

- We use MANO hand model.

- Shout-out to manopth and transformers !.

Huge thanks to these great open-source projects! This project would be impossible without them.

If you found this code or paper useful, please consider citing:

@InProceedings{Duran_2024_WACV,

author = {Duran, Enes and Kocabas, Muhammed and Choutas, Vasileios and Fan, Zicong and Black, Michael J.},

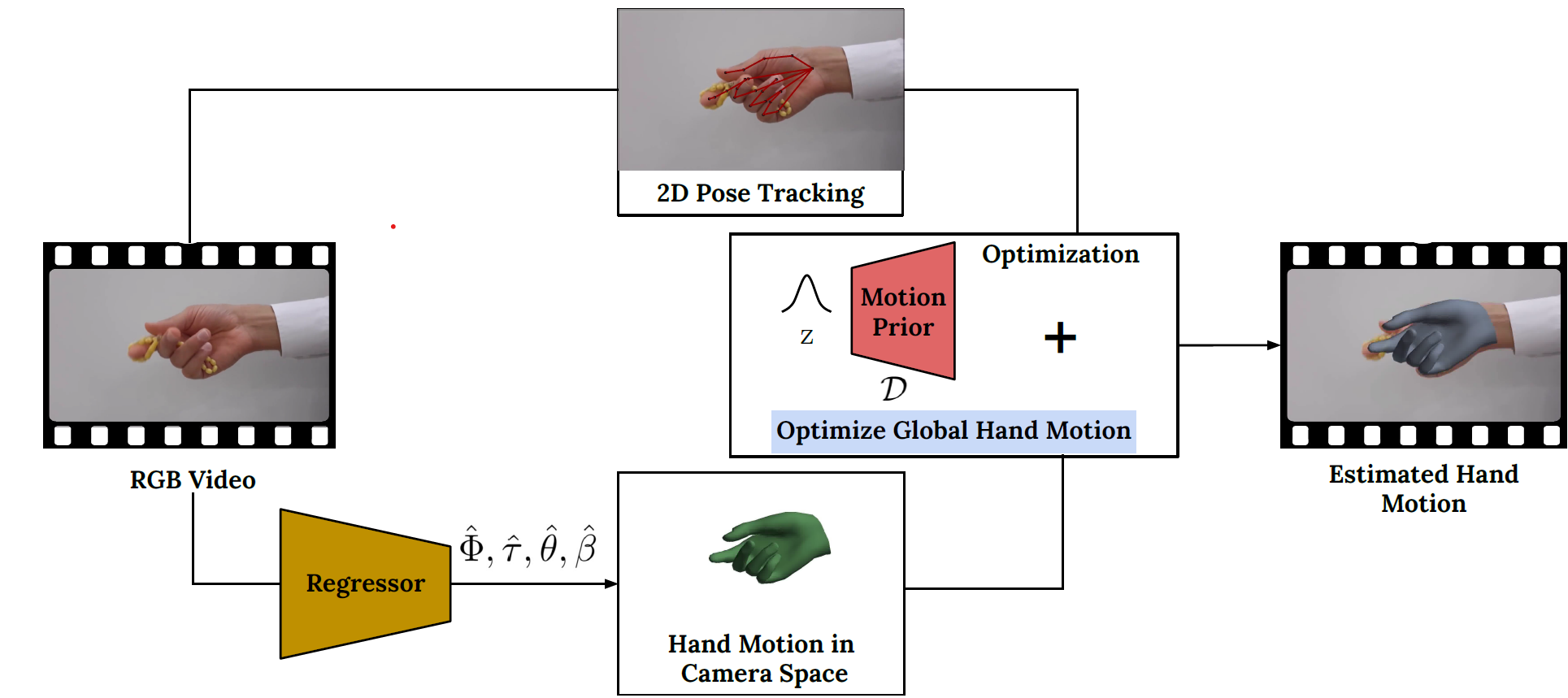

title = {HMP: Hand Motion Priors for Pose and Shape Estimation From Video},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024},

pages = {6353-6363}

}

Should you run into any problems or have questions, please create an issue or contact enes.duran@tuebingen.mpg.de.