A conversational AI chatbot for enhancing user queries and assisting in generating improved questions.

Explore the docs »

View Demo

·

Report Bug

·

Request Feature

Table of Contents

Welcome to the OptiPrompt project, your gateway to a world of intelligent conversation and assistance! 🤖✨

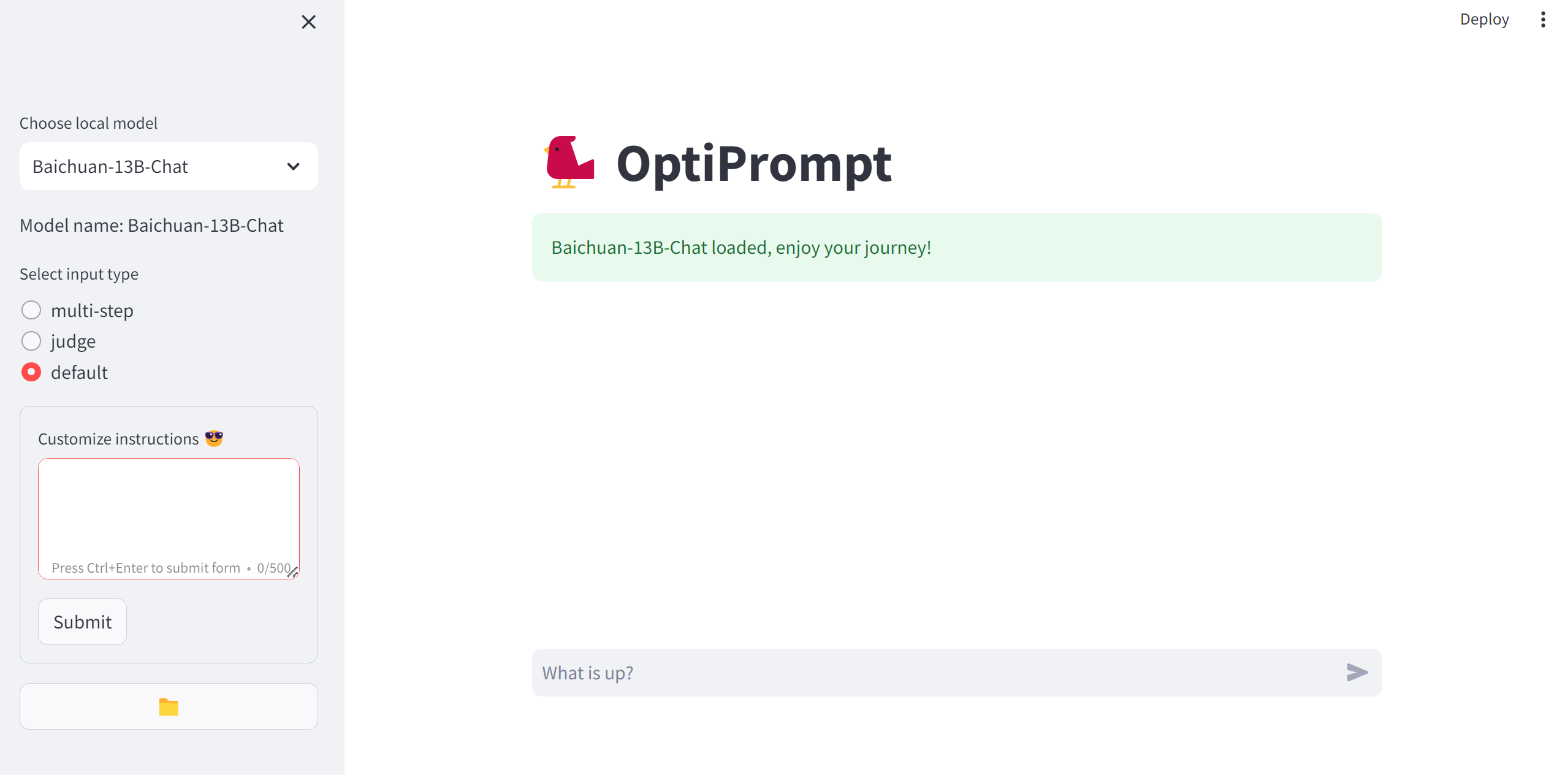

The OptiPrompt is an innovative chatbot powered by state-of-the-art natural language processing models. Whether you're seeking answers, code optimization suggestions, or even help with refining your questions, our chatbot is here to assist you effectively. Designed for a variety of use cases, from code optimization to language model guidance, it's your go-to AI assistant.

Our project leverages the latest advancements in language modeling technology and provides you with a seamless conversational experience. Engage in productive discussions, refine your queries, and harness the power of AI to enhance your workflow.

Get ready to embark on an exciting journey with the OptiPrompt. Explore its capabilities, improve your communication, and boost your productivity. It's more than just a chatbot; it's your AI companion in the world of natural language understanding and generation.

Start your conversation with the OptiPrompt today and elevate your interactions to the next level! 🚀🤖

- Streamlit

- Langchain

- Baichuan-13b

Before getting started with the project, ensure that you have the following prerequisites installed:

- Python 3.9: You'll need Python 3.10 to run this project. We recommend using Anaconda to manage your Python environments. You can create a Python 3.10 environment with the following command:

conda create -n infinite-fdu python=3.9Activate the environment using:

conda activate infinite-fdu- Clone the repository to your local machine using the following command:

git clone https://github.com/Infinite-FDU/OptiPrompt

- Navigate to the project directory

- Install the project dependencies from the

requirements.txtfile:pip install --pre --upgrade bigdl-llm[all] pip install -r requirements.txt

Before running the project, it's essential to check whether your computer satisfies the requirements for CUDA support. CUDA is a parallel computing platform and application programming interface (API) model created by NVIDIA.

Some of the deep learning libraries used in this project, such as PyTorch, can leverage CUDA for GPU acceleration. If you have an NVIDIA GPU and wish to enable GPU support, you should ensure that your GPU is compatible with CUDA and that you have the appropriate NVIDIA drivers installed.

If your GPU is not CUDA-compatible or you encounter issues with CUDA, remove the torch based packages in the requirements.txt and install on your own.

Welcome to the OptiPrompt, your intelligent assistant for prompt engineering. As a prompt engineer, your role is to craft and refine prompts to elicit precise and meaningful responses from language models. This chatbot offers a range of optimization options to assist you in your task.

- User Input: Enter your original prompt here.

- Optimized Output: The chatbot will refine your prompt to make it more explicit and effective for language models.

- User Input: Share your code snippet or programming-related query.

- Optimized Output: The chatbot will review and enhance your code for better readability and functionality.

- User Input: Submit a prompt word or phrase for evaluation.

- Evaluation Output: The chatbot will assess the quality of your prompt and provide a score along with feedback.

- User Input: Pose a complex question that can be divided into smaller steps.

- Optimized Output: The chatbot will break down your question into manageable parts, perfect for step-by-step exploration.

Note: This project has been tested using artificial intelligence, Claude, for prompt optimization. It compares user inputs to optimized inputs and achieves a perfect score in 39 out of 39 tests. For detailed information, please refer to the "test_default.ipynb" and "output.json" files.

The OptiPrompt empowers you with the ability to provide custom instructions, allowing you to whisper specific guidance to the language model. These custom instructions serve as a form of persistent memory, influencing the behavior of the chatbot across different sessions.

-

Customize Your Guidance: Craft personalized instructions in plain text to guide the language model in a particular direction. You can provide context, preferences, or specific expectations.

-

Save to Local File: Your custom instructions are saved to a local file, ensuring they persist even after closing the chatbot session. This file acts as a repository of your guidance.

-

Influence Model Behavior: During subsequent interactions, the chatbot will refer to your saved custom instructions, effectively remembering your preferences and following your guidance.

-

Adapt and Refine: You can update and refine your custom instructions over time, enabling the language model to adapt to your evolving needs and preferences.

Custom instructions provide a powerful way to tailor your interactions with the OptiPrompt. Whether you want to fine-tune responses, shape the conversation, or achieve specific outcomes, your instructions serve as a valuable resource for persistent memory and guidance.

Harness the potential of custom instructions to create more meaningful and personalized conversations with the chatbot, making it a truly adaptable assistant that understands your unique requirements.

For more examples, please refer to the Documentation

- Streamlit app setup with customizable settings and styling.

- Integration of Transformers LLM with support for model selection.

- Four distinct input types: multi-step, judge, code, and default.

- User-friendly interface for prompt engineering with real-time previews.

- System message functionality for context-aware interactions.

- Chat history display for tracking conversations.

- Save customized instructions to a local file for future sessions.

- Documentation and GitHub repository setup.

- Continued language support expansion.

- Integration with other local models.

- Collaboration with domain-specific experts for specialized knowledge.

- Exploration of educational and research applications.

- User feedback sessions for continuous improvement.