This repo contains a basic procedure to train and deploy the DNN model suggested by the paper 'Deep Floor Plan Recognition using a Multi-task Network with Room-boundary-Guided Attention'. It rewrites the original codes from zlzeng/DeepFloorplan into newer versions of Tensorflow and Python.

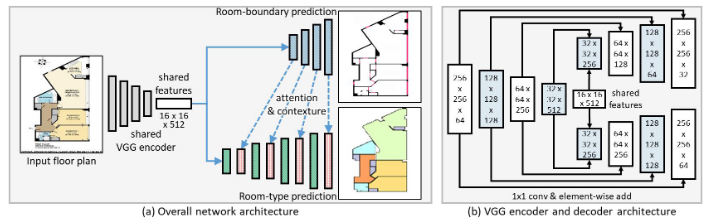

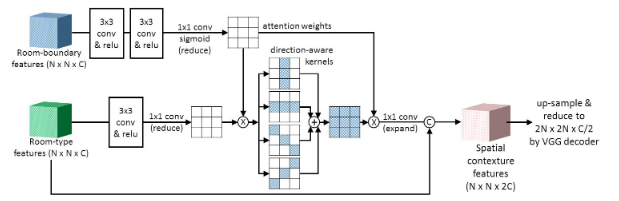

Network Architectures from the paper,

Depends on different applications, the following installation methods can

| OS | Hardware | Application | Command |

|---|---|---|---|

| Ubuntu | CPU | Model Development | pip install -e .[tfcpu,dev,testing,linting] |

| Ubuntu | GPU | Model Development | pip install -e .[tfgpu,dev,testing,linting] |

| MacOS | M1 Chip | Model Development | pip install -e .[tfmacm1,dev,testing,linting] |

| Ubuntu | GPU | Model Deployment API | pip install -e .[tfgpu,api] |

| Ubuntu | GPU | Everything | pip install -e .[tfgpu,api,dev,testing,linting,game] |

| Agnostic | ... | Docker | (to be updated) |

| Ubuntu | GPU | Notebook | pip install -e .[tfgpu,jupyter] |

| Ubuntu | GPU | Game | pip install -e .[tfgpu,game] |

- Install packages.

# Option 1

python -m venv venv

source venv/bin/activate

pip install --upgrade pip setuptools wheel

# Option 2 (Preferred)

conda create -n venv python=3.8 cudatoolkit=10.1 cudnn=7.6.5

conda activate venv

# common install

pip install -e .[tfgpu,api,dev,testing,linting]

- According to the original repo, please download r3d dataset and transform it to tfrecords

r3d.tfrecords. Friendly reminder: there is another dataset r2v used to train their original repo's model, I did not use it here cos of limited access. Please see the link here zlzeng/DeepFloorplan#17. - Run the

train.pyfile to initiate the training, model checkpoint is stored aslog/store/Gand weight is inmodel/store,

python -m dfp.train [--batchsize 2][--lr 1e-4][--epochs 1000]

[--logdir 'log/store'][--modeldir 'model/store']

[--save-tensor-interval 10][--save-model-interval 20]

[--tfmodel 'subclass'/'func'][--feature-channels 256 128 64 32]

[--backbone 'vgg16'/'mobilenetv1'/'mobilenetv2'/'resnet50']

[--feature-names block1_pool block2_pool block3_pool block4_pool block5_pool]

- for example,

python -m dfp.train --batchsize=4 --lr=5e-4 --epochs=100

--logdir=log/store --modeldir=model/store

- Run Tensorboard to view the progress of loss and images via,

tensorboard --logdir=log/store

- Convert model to tflite via

convert2tflite.py.

python -m dfp.convert2tflite [--modeldir model/store]

[--tflitedir model/store/model.tflite]

[--loadmethod 'log'/'none'/'pb']

[--quantize][--tfmodel 'subclass'/'func']

[--feature-channels 256 128 64 32]

[--backbone 'vgg16'/'mobilenetv1'/'mobilenetv2'/'resnet50']

[--feature-names block1_pool block2_pool block3_pool block4_pool block5_pool]

- Download and unzip model from google drive,

gdown https://drive.google.com/uc?id=1czUSFvk6Z49H-zRikTc67g2HUUz4imON # log files 112.5mb

unzip log.zip

gdown https://drive.google.com/uc?id=1tuqUPbiZnuubPFHMQqCo1_kFNKq4hU8i # pb files 107.3mb

unzip model.zip

gdown https://drive.google.com/uc?id=1B-Fw-zgufEqiLm00ec2WCMUo5E6RY2eO # tfilte file 37.1mb

unzip tflite.zip

- Deploy the model via

deploy.py, please be aware that load method parameter should match with weight input.

python -m dfp.deploy [--image 'path/to/image']

[--postprocess][--colorize][--save 'path/to/output_image']

[--loadmethod 'log'/'pb'/'tflite']

[--weight 'log/store/G'/'model/store'/'model/store/model.tflite']

[--tfmodel 'subclass'/'func']

[--feature-channels 256 128 64 32]

[--backbone 'vgg16'/'mobilenetv1'/'mobilenetv2'/'resnet50']

[--feature-names block1_pool block2_pool block3_pool block4_pool block5_pool]

- for example,

python -m dfp.deploy --image floorplan.jpg --weight log/store/G

--postprocess --colorize --save output.jpg --loadmethod log

- Play with pygame.

python -m dfp.game

- Build and run docker container. (Please train your weight, google drive does not work currently due to its update.)

docker build -t tf_docker -f Dockerfile .

docker run -d -p 1111:1111 tf_docker:latest

docker run --gpus all -d -p 1111:1111 tf_docker:latest

# special for hot reloading flask

docker run -v ${PWD}/src/dfp/app.py:/src/dfp/app.py -v ${PWD}/src/dfp/deploy.py:/src/dfp/deploy.py -d -p 1111:1111 tf_docker:latest

docker logs `docker ps | grep "tf_docker:latest" | awk '{ print $1 }'` --follow

- Call the api for output.

curl -H "Content-Type: application/json" --request POST \

-d '{"uri":"https://cdn.cnn.com/cnnnext/dam/assets/200212132008-04-london-rental-market-intl-exlarge-169.jpg","colorize":1,"postprocess":0}' \

http://0.0.0.0:1111/uri --output /tmp/tmp.jpg

curl --request POST -F "file=@resources/30939153.jpg" \

-F "postprocess=0" -F "colorize=0" http://0.0.0.0:1111/upload --output out.jpg

- If you run

app.pywithout docker, the second curl for file upload will not work.

- Click on

and authorize access.

- Run the first 2 code cells for installation.

- Go to Runtime Tab, click on Restart runtime. This ensures the packages installed are enabled.

- Run the rest of the notebook.

- Git clone this repo.

- Install required packages and pre-commit-hooks.

pip install -e .[tfgpu,api,dev,testing,linting]

pre-commit install

pre-commit run

pre-commit run --all-files

# pre-commit uninstall/ pip uninstall pre-commit

- Create issues. Maintainer will decide if it requires branch. If so,

git fetch origin

git checkout xx-features

- Stage your files, Commit and Push to branch.

- After pull and merge requests, the issue is solved and the branch is deleted. You can,

git checkout main

git pull

git remote prune origin

git branch -d xx-features

- From

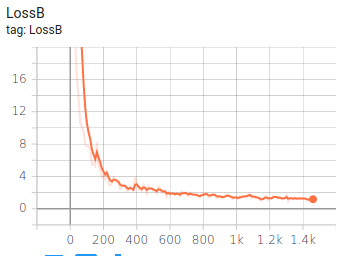

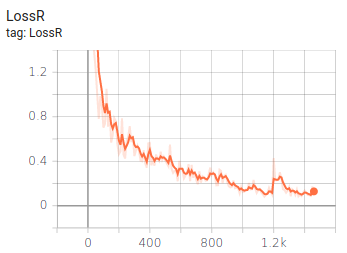

train.pyandtensorboard.

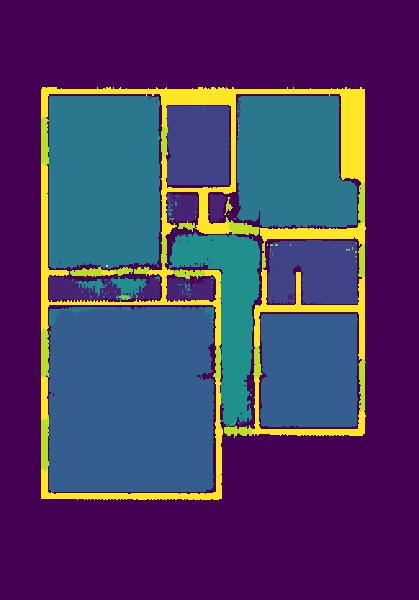

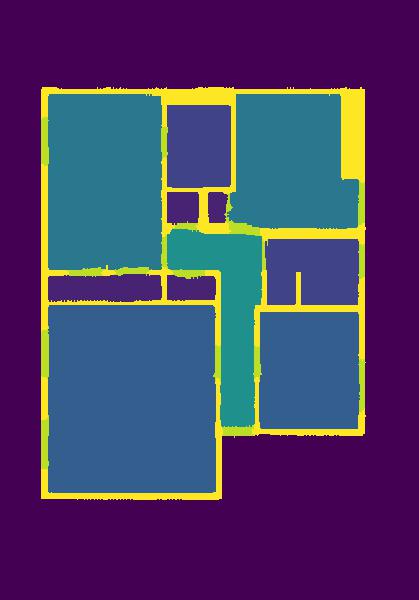

| Compare Ground Truth (top) against Outputs (bottom) |

Total Loss |

|---|---|

|

|

| Boundary Loss | Room Loss |

|

|

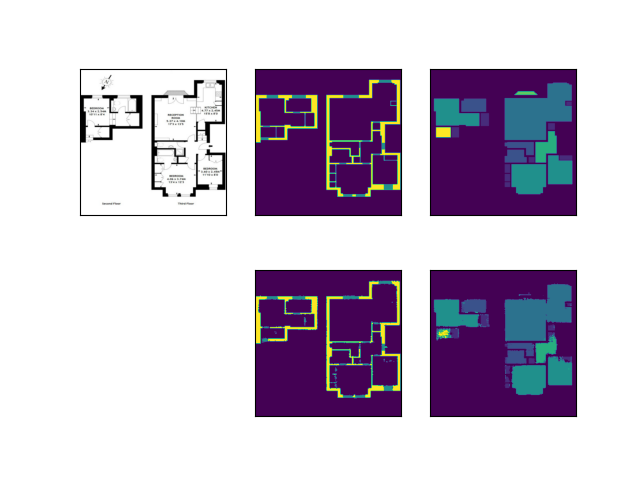

- From

deploy.pyandutils/legend.py.

| Input | Legend | Output |

|---|---|---|

|

|

|

--colorize |

--postprocess |

--colorize--postprocess |

|

|

|

- Backbone Comparison in Size

| Backbone | log | pb | tflite | toml |

|---|---|---|---|---|

| VGG16 | 130.5Mb | 119Mb | 45.3Mb | link |

| MobileNetV1 | 102.1Mb | 86.7Mb | 50.2Mb | link |

| MobileNetV2 | 129.3Mb | 94.4Mb | 57.9Mb | link |

| ResNet50 | 214Mb | 216Mb | 107.2Mb | link |

- Feature Selection Comparison in Size

| Backbone | Feature Names | log | pb | tflite | toml |

|---|---|---|---|---|---|

| MobileNetV1 | "conv_pw_1_relu", "conv_pw_3_relu", "conv_pw_5_relu", "conv_pw_7_relu", "conv_pw_13_relu" |

102.1Mb | 86.7Mb | 50.2Mb | link |

| MobileNetV1 | "conv_pw_1_relu", "conv_pw_3_relu", "conv_pw_5_relu", "conv_pw_7_relu", "conv_pw_12_relu" |

84.5Mb | 82.3Mb | 49.2Mb | link |

- Feature Channels Comparison in Size

| Backbone | Channels | log | pb | tflite | toml |

|---|---|---|---|---|---|

| VGG16 | [256,128,64,32] | 130.5Mb | 119Mb | 45.3Mb | link |

| VGG16 | [128,64,32,16] | 82.4Mb | 81.6Mb | 27.3Mb | |

| VGG16 | [32,32,32,32] | 73.2Mb | 67.5Mb | 18.1Mb | link |

- tfmot

- Pruning (not working)

- Clustering (not working)

- Post training Quantization (work the best)

- Training aware Quantization (not supported by the version)