This repo is the official implementation of "Spatially Adaptive Inference with Stochastic Feature Sampling and Interpolation" on COCO object detection. The code is based on MMDetection v0.6.0.

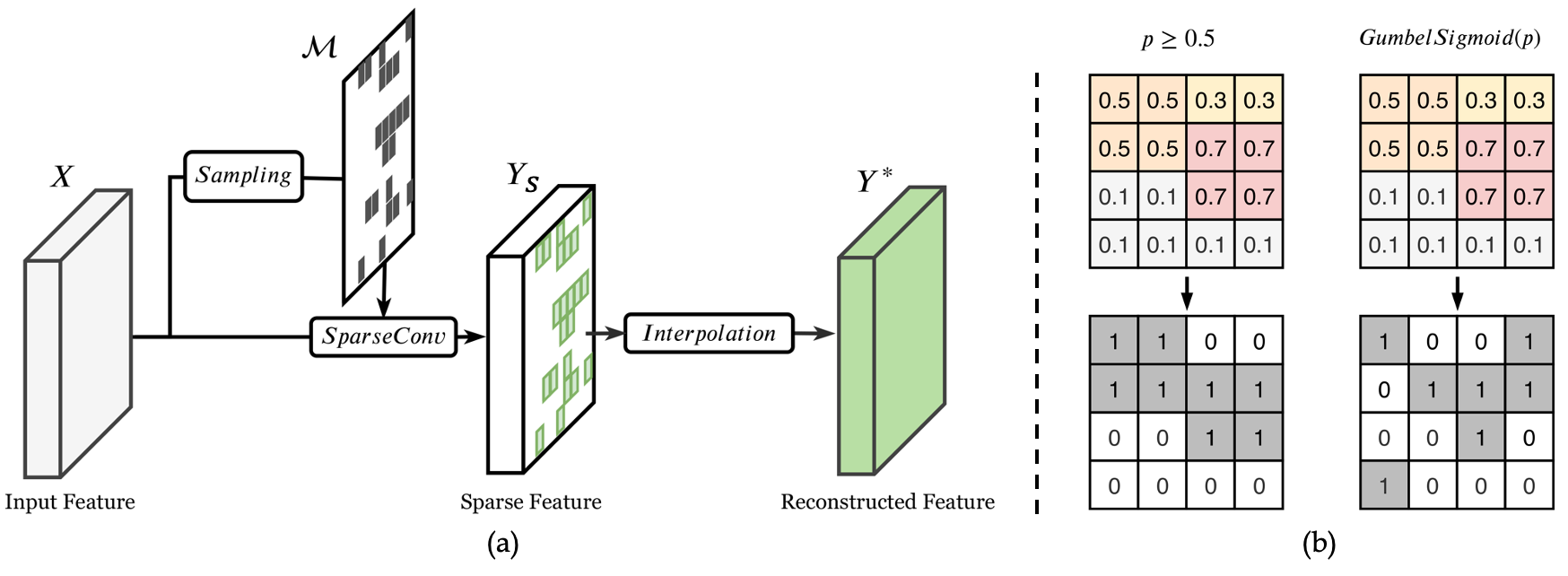

Stochastic sampling-interpolation network(a) and comparison between deterministic sampling(b, left) and stochastic sampling(b, right).

Abstract. In the feature maps of CNNs, there commonly exists considerable spatial redundancy that leads to much repetitive processing. Towards reducing this superfluous computation, we propose to compute features only at sparsely sampled locations, which are probabilistically chosen according to activation responses, and then densely reconstruct the feature map with an efficient interpolation procedure. With this sampling-interpolation scheme, our network avoids expending computation on spatial locations that can be effectively interpolated, while being robust to activation prediction errors through broadly distributed sampling. A technical challenge of this sampling-based approach is that the binary decision variables for representing discrete sampling locations are non-differentiable, making them incompatible with backpropagation. To circumvent this issue, we make use of a reparameterization trick based on the Gumbel-Softmax distribution, with which backpropagation can iterate these variables towards binary values. The presented network is experimentally shown to save substantial computation while maintaining accuracy over a variety of computer vision tasks.

All the models are based on the Faster R-CNN with FPN.

| Backbone | Resolution | Sparse Loss Weight | mAP | GFlops | Config | Model URL | Model sha256sum |

|---|---|---|---|---|---|---|---|

| ResNet-101 | 500 | - | 38.5 | 70.0 | config | model | 206b4c0e |

| ResNet-101 | 600 | - | 40.4 | 100.2 | config | model | c4e102de |

| ResNet-101 | 800 | - | 42.3 | 184.1 | config | model | 3fc2af7a |

| ResNet-101 | 1000 | - | 43.4 | 289.5 | config | model | e043c999 |

| SparseResNet-101 | 1000 | 0.02 | 43.3 | 164.8 | config | model | 16a152e0 |

| SparseResNet-101 | 1000 | 0.05 | 42.7 | 120.3 | config | model | f0a467c8 |

| SparseResNet-101 | 1000 | 0.1 | 41.9 | 94.4 | config | model | 1c9bf665 |

| SparseResNet-101 | 1000 | 0.2 | 40.7 | 71.4 | config | model | 46044e4a |

At present, we have not checked the compatibility of the code with other versions of the packages, so we only recommend the following configuration.

- Python 3.7

- PyTorch == 1.1.0

- Torchvision == 0.3.0

- CUDA 9.0

- Other dependencies

We recommand using conda env to setup the experimental environments.

# Create environment

conda create -n SAI_Det python=3.7 -y

conda activate SAI_Det

# Install PyTorch & Torchvision

conda install pytorch=1.1.0 cudatoolkit=9.0 torchvision -c pytorch -y

# Clone repo

git clone https://github.com/zdaxie/SpatiallyAdaptiveInference-Detection ./SAI_Det

cd ./SAI_Det

# Create soft link for data

mkdir data

cd data

ln -s ${COCO-Path} ./coco

cd ..

# Install requirements and Compile operators

./init.shFor now, we only support training with 8 GPUs.

# Test with the given config & model

./tools/dist_test.sh ${config-path} ${model-path} ${num-gpus} --out ${output-file.pkl}

# Train with the given config

./tools/dist_train.sh ${config-path} ${num-gpus}This project is released under the Apache 2.0 license.

If you use our codebase or models in your research, please cite this project.

@InProceedings{xie2020spatially,

author = {Xie, Zhenda and Zhang, Zheng and Zhu, Xizhou and Huang, Gao and Lin, Steve},

title = {Spatially Adaptive Inference with Stochastic Feature Sampling and Interpolation},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020},

month = {August},

}