This repository was created to demonstrate an issue in Hazelcast CPSubsystem. The original code is a copy of this example. (The copied state was this commit).

- build the projects with

./gradlew build - build the images with

docker-compose build - start a docker-swarm with

docker swarm initcommand (later you can destroy it withdocker swarm leave --force) - deploy the claster with

docker stack deploy --compose-file docker-compose.yml hz-issue(in the end you can remove the cluster withdocker stack rm hz-issue) - in the end you will have eureka on port: 8761 and the hazelcast example on port: 8080

- you can put entries to a map with url:

http://localhost:8080/put?key=some-key&value=some-valueand read them withhttp://localhost:8080/get?key=some-key - you can modify the same map guarded by hazelcast CPSubsystem locks with:

http://localhost:8080/lock-put?key=some-key&value=some-valueandhttp://localhost:8080/lock-get?key=some-key

In class Application

CPSubsystem is configured to have 4 members and 3 members shuld form the groups. Also: url of eureka is

changed from localhost to the service name in the swarm. Networking interface was set to be 10.0.*.*

so hazelcast registers the swarm network interface to eureka and the nodes can talk.

We have new endpoints with locks, defined in LockCommandController which uses locks from CPSubsystem.

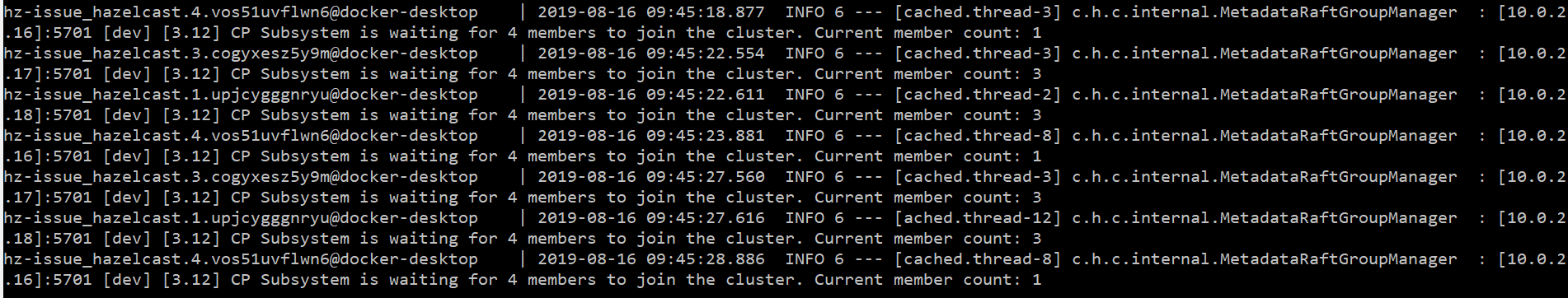

Sometimes when the nodes start, CPSubsystem can not form a cluster. The 4 members can form 2 clusters

(2 with 2 members or 1 with 1 meber and 1 with 3 members) and can not provide you a lock. You will see

a lot of CP Subsystem is waiting for 4 members to join the cluster. Current member count: 2 log

messages, or like this:

It seems that hazelcast can never recover from a state like this.