Prepare for trainning issues

Closed this issue · 5 comments

In fork branch, not find MSDeformAttn in from ops.modules import MSDeformAttn. So do I need to install additional libraries if I want to run the code?

Looking forward to your reply~

You may refer to #1.

You may refer to #1.

Thanks,I will try~

@zehuichen123. When I run the code, error happens:

2022-08-22 14:34:18,474 - mmdet - INFO - workflow: [('train', 1)], max: 20 epochs

2022-08-22 14:34:18,474 - mmdet - INFO - Checkpoints will be saved to /data/xu-lidar/two/AutoAlignV2/work_dirs/centerpoint_voxel_nus_8subset_bs4_img1_nuimg_detach_deform_multipts by HardDiskBackend.

/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/fusion_layers/coord_transform.py:33: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

torch.tensor(img_meta['pcd_rotation'], dtype=dtype, device=device)

/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/fusion_layers/multi_voxel_deform_fusion.py:103: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than tensor.new_tensor(sourceTensor).

return points.new_tensor(grid), valid_idx

Traceback (most recent call last):

File "tools/train.py", line 224, in <module>

main()

File "tools/train.py", line 213, in main

train_model(

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/apis/train.py", line 28, in train_model

train_detector(

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmdet/apis/train.py", line 170, in train_detector

runner.run(data_loaders, cfg.workflow)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

epoch_runner(data_loaders[i], **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/runner/epoch_based_runner.py", line 50, in train

self.run_iter(data_batch, train_mode=True, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/runner/epoch_based_runner.py", line 29, in run_iter

outputs = self.model.train_step(data_batch, self.optimizer,

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/parallel/data_parallel.py", line 75, in train_step

return self.module.train_step(*inputs[0], **kwargs[0])

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmdet/models/detectors/base.py", line 237, in train_step

losses = self(**data)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/runner/fp16_utils.py", line 98, in new_func

return old_func(*args, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/detectors/base.py", line 59, in forward

return self.forward_train(**kwargs)

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/detectors/centerpoint_fusion.py", line 128, in forward_train

img_feats, pts_feats = self.extract_feat(

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/detectors/centerpoint_fusion.py", line 90, in extract_feat

pts_feats = self.extract_pts_feat(points, img_feats, img_metas)

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/detectors/centerpoint_fusion.py", line 155, in extract_pts_feat

voxel_features, feature_coors = self.pts_voxel_encoder(

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/mmcv/runner/fp16_utils.py", line 186, in new_func

return old_func(*args, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/voxel_encoders/voxel_fusion_encoder.py", line 201, in forward

voxel_feats = self.fusion_layer(img_feats, voxel_coors, voxel_feats, \

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/fusion_layers/multi_voxel_deform_fusion.py", line 250, in forward

img_pts = self.obtain_mlvl_feats(img_feats, voxel_coors, voxel_feats,\

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/fusion_layers/multi_voxel_deform_fusion.py", line 302, in obtain_mlvl_feats

self.sample_single(img_ins[level][i * num_camera: (i + 1) * num_camera],

File "/data/xu-lidar/two/AutoAlignV2/mmdet3d/models/fusion_layers/multi_voxel_deform_fusion.py", line 369, in sample_single

img_pts = self.deform_layers[level_num](pts_feats, ref_points, \

File "/data/xu-lidar/two/AutoAlignV2/envs/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

TypeError: forward() missing 1 required positional argument: 'level_start_index'

I find the DeformTransLayer in multi_voxel_deform_fusion.py is not match it in deform_layer.py and missing parameter src_feat or query_feat.

So are these two parameters the same?

Besides, I find the parameter exists in multi_voxel_deform_fusion_v3.py. So should I call the V3 file?

Thanks~

emmm I don't have multi_voxel_deform_fusion.py file, but only multi_voxel_deformfusion.py file. and there exists level_start_index:

if torch.cuda.is_available() and value_flatten.is_cuda:

output = MultiScaleDeformableAttnFunction.apply(

value_flatten, spatial_shapes, level_start_index, sampling_locations,

attention_weights, self.im2col_step)

else:

# WON'T REACH HERE

print("Won't Reach Here")

output = multi_scale_deformable_attn_pytorch(

value_flatten, spatial_shapes, sampling_locations, attention_weights)

emmm I don't have multi_voxel_deform_fusion.py file, but only multi_voxel_deformfusion.py file. and there exists level_start_index:

if torch.cuda.is_available() and value_flatten.is_cuda: output = MultiScaleDeformableAttnFunction.apply( value_flatten, spatial_shapes, level_start_index, sampling_locations, attention_weights, self.im2col_step) else: # WON'T REACH HERE print("Won't Reach Here") output = multi_scale_deformable_attn_pytorch( value_flatten, spatial_shapes, sampling_locations, attention_weights)

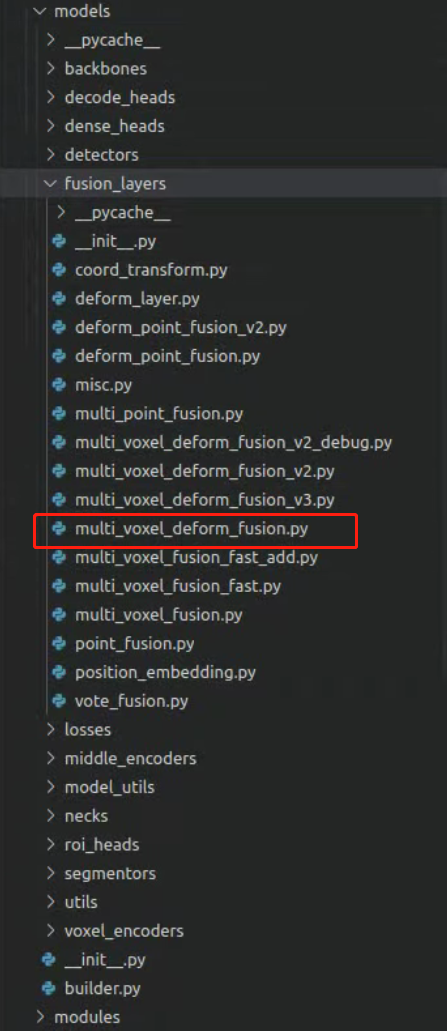

@zehuichen123 .Thanks for your prompt replay. But I fork the https://github.com/CV-Det/AutoAlignV2 branch. The structure of directory is:

we can find the multi_voxel_deform_fusion.py file, not find multi_voxel_deformfusion.py file. Did I download the wrong repository?

Thanks~