This project combines LangChain, AWS OpenSearch, and the Llama-2-7B-Chat LLM model to establish a rapid and efficient chatbot for document querying. It encompasses two models: Query and Ingest. The Query model retrieves documents from the chatbot's index to generate responses for your queries. Ingest prepares documents from an S3 bucket for storage as vector embeddings in a user-specified OpenSearch index.

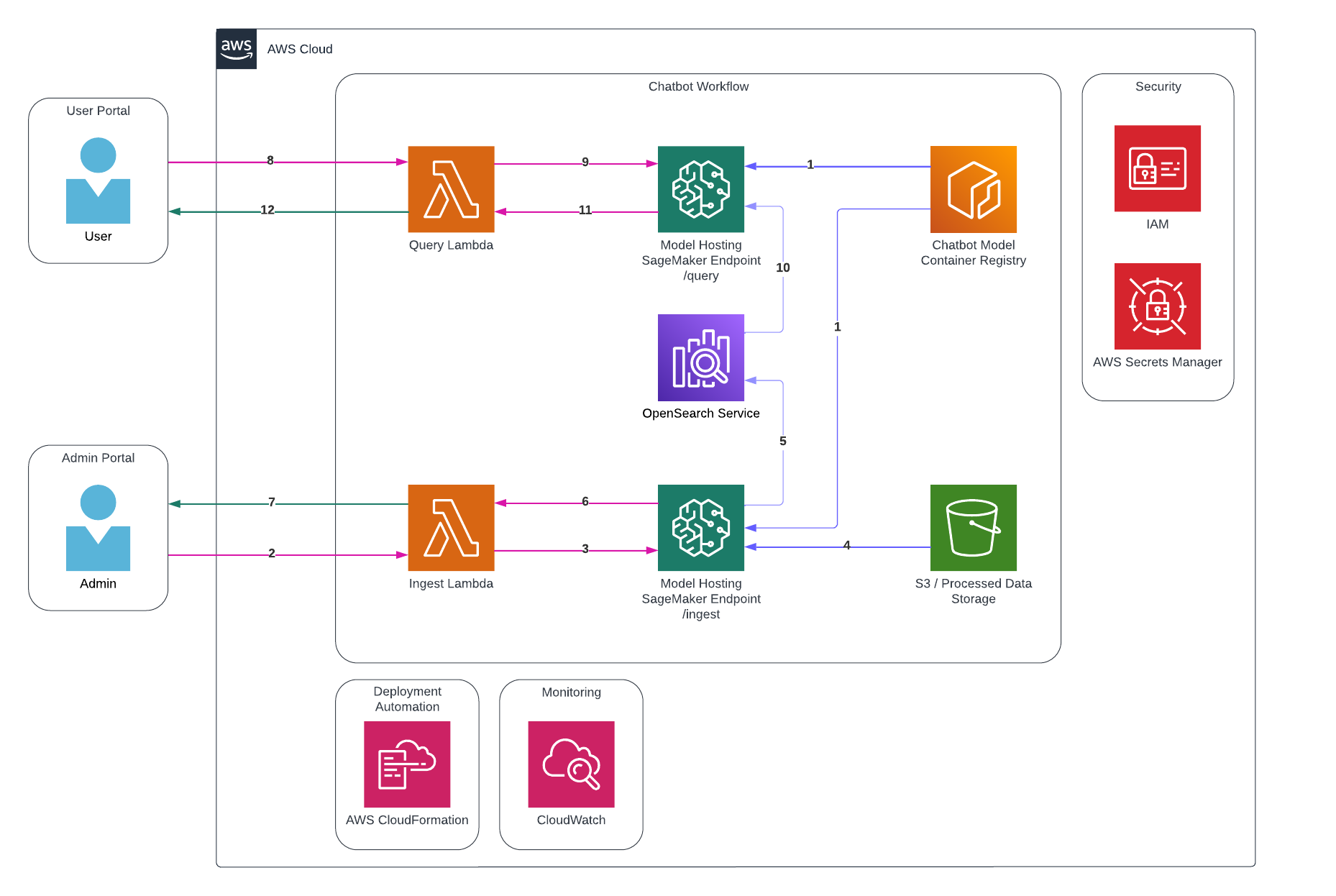

The above workflow outlines the AWS solution for deploying a chatbot with two distinct routes: data ingestion and data querying. The process follows a systematic flow as described below:

- The data ingestion and querying endpoints are deployed on Amazon SageMaker, from a specified container registry.

- The user initiates the data ingestion process by making a request to the Ingest Lambda function through its designated function URL.

- The Ingest Lambda function, upon receiving the user's request, invokes the SageMaker endpoint, targeting the '/ingest' route.

- The SageMaker model retrieves the required data, already processed, and stored in Amazon S3, and proceeds to create embeddings.

- The generated embeddings are stored in an OpenSearch vector database for efficient access and retrieval.

- Upon completion, the SageMaker model sends a response back to the Lambda function.

- The user is promptly notified of the ingestion process status through the response received from the Lambda function.

- To perform a data query, the user sends a request to the Query Lambda function using the designated function URL.

- The Query Lambda function, upon receiving the user's query request, invokes the SageMaker endpoint, targeting the '/query' route.

- The SageMaker model processes the user's query, converting it into embeddings, and initiates a similarity search using OpenSearch. The search retrieves data related to the query.

- The retrieved data, along with the original query, is used by the model to generate a prompt. The prompt is then passed to the Language Model (LLM) to generate a response.

- The Lambda function sends back the generated response to the user, completing the query process.

- Clone the repository:

git clone https://github.com/HadeelAdal/chatbot-cdk.git - Navigate to the project directory:

cd chatbot-cdk

To deploy the project, use the following command: cdk deploy --parameters opensearchUsername={USERNAME} --parameters opensearchPassword={PASSWORD}

Query payload: {"index": "{INDEX_NAME}", "bucket": "{BUCKET_NAME}", "key": "{FOLDER_KEY}"}

Ingest payload: {"index": "{INDEX_NAME}", "prompt": "{QUERY}"}

npm run buildcompile typescript to jsnpm run watchwatch for changes and compilenpm run testperform the jest unit testscdk deploydeploy this stack to your default AWS account/regioncdk diffcompare deployed stack with current statecdk synthemits the synthesized CloudFormation template