💡 This is the official implementation of the paper "RealNet: A Feature Selection Network with Realistic Synthetic Anomaly for Anomaly Detection (CVPR 2024)" [arxiv]

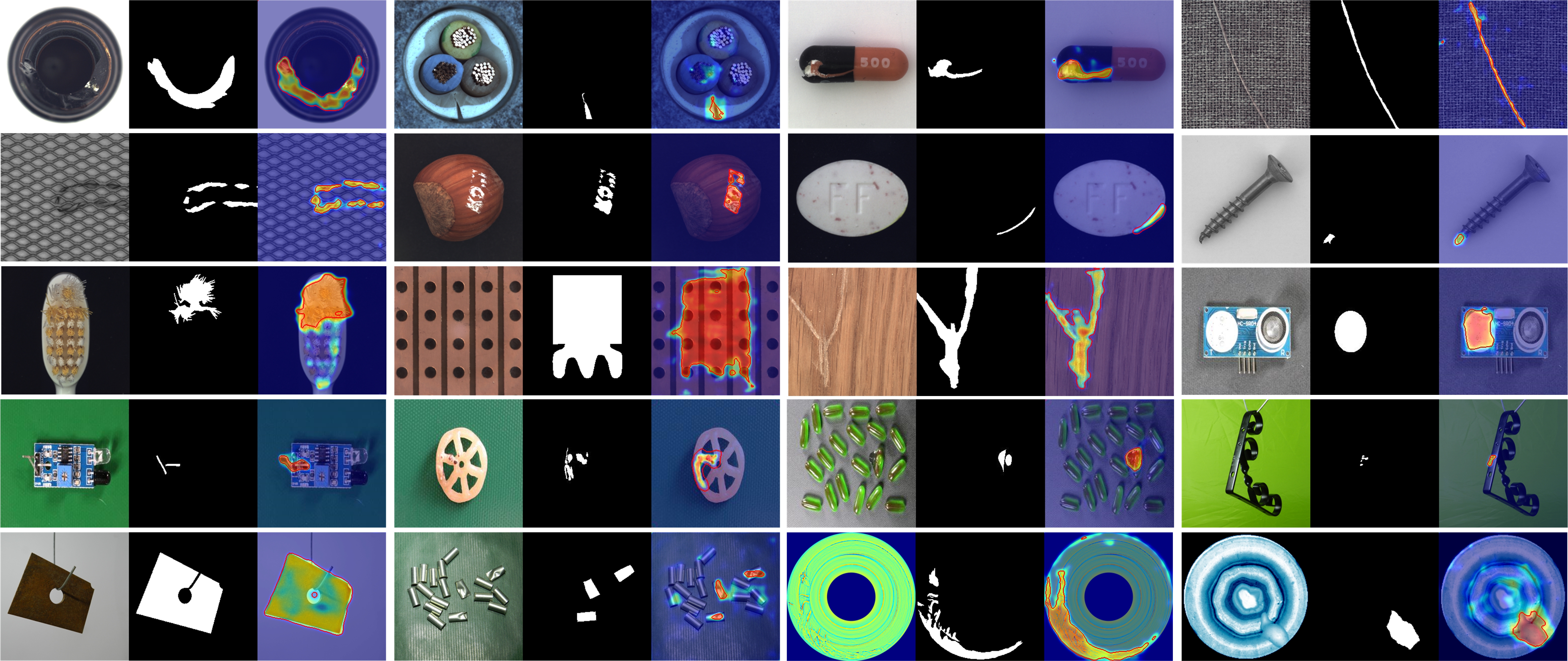

RealNet is a simple yet effective framework that incorporates three key innovations: First, we propose Strength-controllable Diffusion Anomaly Synthesis (SDAS), a diffusion process-based synthesis strategy capable of generating samples with varying anomaly strengths that mimic the distribution of real anomalous samples. Second, we develop Anomaly-aware Features Selection (AFS), a method for selecting representative and discriminative pre-trained feature subsets to improve anomaly detection performance while controlling computational costs. Third, we introduce Reconstruction Residuals Selection (RRS), a strategy that adaptively selects discriminative residuals for comprehensive identification of anomalous regions across multiple levels of granularity.

We employ the diffusion model for anomaly synthesis, providing 360k anomaly images for anomaly detection models training across four datasets (MVTec-AD, MPDD, BTAD, and VisA). [Download]

The diffusion model [Download] and the guided classifier (optional) [Download] trained on the MVTec-AD, MPDD, BTAD, and VisA datasets.

| Image AUROC | Pixel AUROC | |

|---|---|---|

| MVTec-AD | 99.6 | 99.0 |

| MPDD | 96.3 | 98.2 |

| BTAD | 96.1 | 97.9 |

| VisA | 97.8 | 98.8 |

To run experiments, first clone the repository and install requirements.txt.

$ git clone https://github.com/cnulab/RealNet.git

$ cd RealNet

$ pip install -r requirements.txt

Download the following datasets:

- MVTec-AD [Official] or [Our Link]

- MPDD [Official] or [Our Link]

- BTAD [Official] or [Our Link]

- VisA [Official] or [Our Link]

For the VisA dataset, we have conducted format processing to ensure uniformity. We strongly recommend you to download it from our link.

If you use DTD (optional) dataset for anomaly synthesis, please download:

- DTD [Official] or [Our Link]

Unzip them to the data. Please refer to data/README.

We load the diffusion model weights pre-trained on ImageNet, as follows:

- Pre-trained Diffusion [Official] or [Our Link]

We use the guided classifier to improve image quality (optional):

- Pre-trained Guided Classifier [Official] or [Our Link]

Download them and place them in the pretrain folder.

Train the diffusion model on the MVTec-AD dataset:

$ python -m torch.distributed.launch --nproc_per_node=4 train_diffusion.py --dataset MVTec-AD

Train the guided classifier on the MVTec-AD dataset:

$ python -m torch.distributed.launch --nproc_per_node=2 train_classifier.py --dataset MVTec-AD

We use 4*A40 GPUs and take 48 hours training the diffusion model, and use 2*RTX3090 GPUs and take 3 hours training the guided classifier.

We provide the following checkpoints:

- MVTec-AD: [Diffusion], [Guided Classifier]

- MPDD: [Diffusion], [Guided Classifier]

- BTAD: [Diffusion], [Guided Classifier]

- VisA: [Diffusion], [Guided Classifier]

Download them to the experiments. Please refer to experiments/README.

Sample anomaly images using 1*RTX3090 GPU:

$ python -m torch.distributed.launch --nproc_per_node=1 sample.py --dataset MVTec-AD

We provide 10k sampled anomaly images with a resolution of 256*256 for each category, which can be downloaded through the following link:

- MVTec-AD [Download]

- MPDD [Download]

- BTAD [Download]

- VisA [Download]

Train RealNet using 1*RTX3090 GPU:

$ python -m torch.distributed.launch --nproc_per_node=1 train_realnet.py --dataset MVTec-AD --class_name bottle

realnet.yaml provides various configurations during the training.

More commands can be found in run.sh.

Calculating Image AUROC, Pixel AUROC, and PRO, and generating qualitative results for anomaly localization:

$ python evaluation_realnet.py --dataset MVTec-AD --class_name bottle

We also provide some generated normal images for each category (setting the anomaly strength to 0 in the paper), which can be downloaded through the following link:

- MVTec-AD [Download]

- BTAD [Download]

- VisA [Download]

The additional file directory of this repository:

- [Google Drive]

- [Baidu Cloud] (pwd 6789)

Code reference: UniAD and BeatGans.

If this work is helpful to you, please cite it as:

@inproceedings{zhang2024realnet,

title={RealNet: A Feature Selection Network with Realistic Synthetic Anomaly for Anomaly Detection},

author={Ximiao Zhang, Min Xu, and Xiuzhuang Zhou},

year={2024},

eprint={2403.05897},

archivePrefix={arXiv},

primaryClass={cs.CV}

}