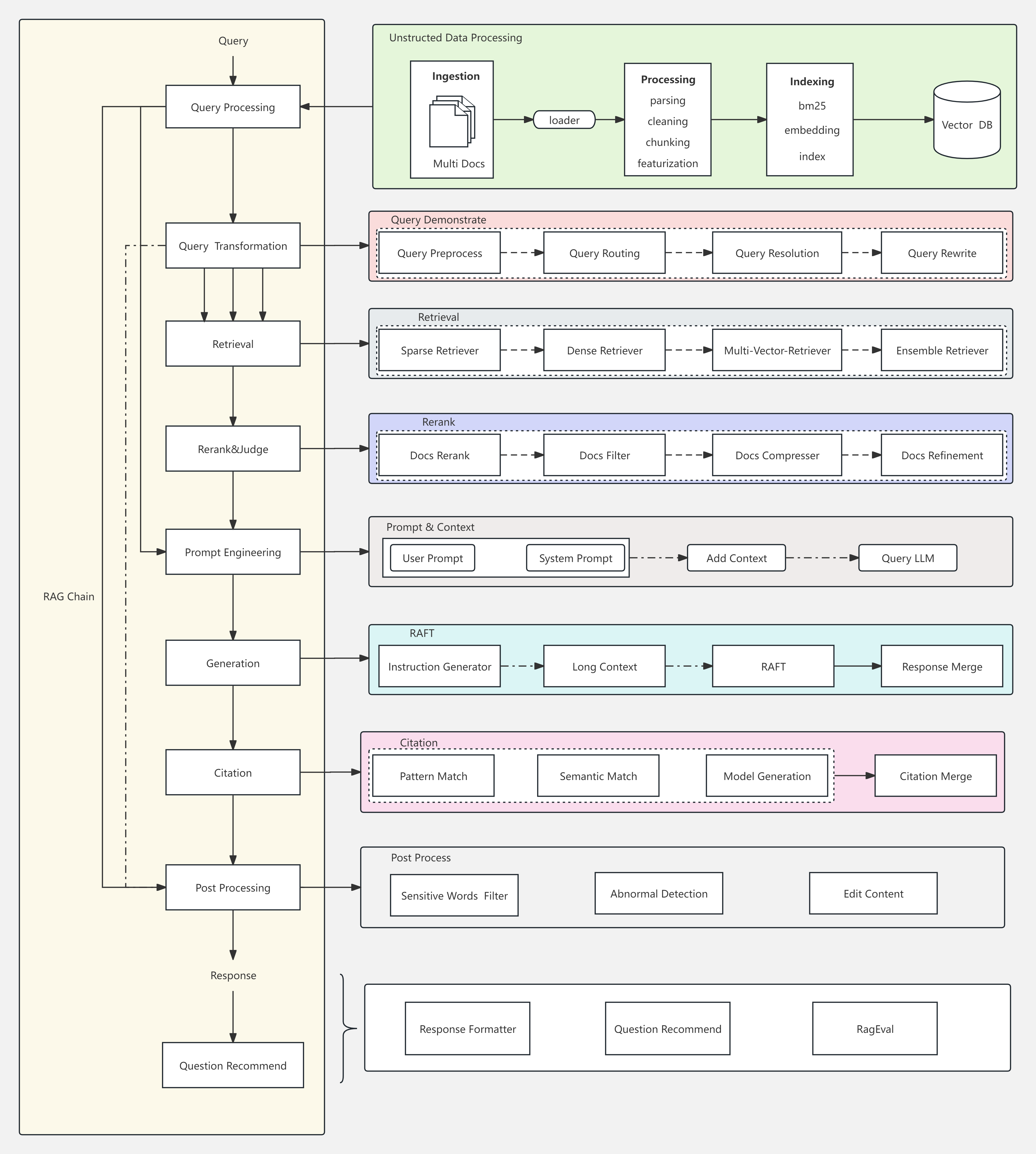

可配置的模块化RAG框架。

GoMate是一款配置化模块化的Retrieval-Augmented Generation (RAG) 框架,旨在提供可靠的输入与可信的输出,确保用户在检索问答场景中能够获得高质量且可信赖的结果。

GoMate框架的设计核心在于其高度的可配置性和模块化,使得用户可以根据具体需求灵活调整和优化各个组件,以满足各种应用场景的要求。

“Reliable input,Trusted output”

可靠的输入,可信的输出

- RAPTOR:递归树检索器实现

pip install -r requirements.txtfrom gomate.modules.document.parset import TextParser

from gomate.modules.store import VectorStore

docs = TextParser('./data/docs').get_content(max_token_len=600, cover_content=150)

vector = VectorStore(docs)from gomate.modules.retrieval.embedding import BgeEmbedding

embedding = BgeEmbedding("BAAI/bge-large-zh-v1.5") # 创建EmbeddingModel

vector.get_vector(EmbeddingModel=embedding)

vector.persist(path='storage') # 将向量和文档内容保存到storage目录下,下次再用就可以直接加载本地的数据库

vector.load_vector(path='storage') # 加载本地的数据库question = '伊朗坠机事故原因是什么?'

contents = vector.query(question, EmbeddingModel=embedding, k=1)

content = '\n'.join(contents[:5])

print(contents)from gomate.modules.generator.llm import GLMChat

chat = GLMChat(path='THUDM/chatglm3-6b')

print(chat.chat(question, [], content))docs = TextParser.get_content_by_file(file='data/docs/伊朗问题.txt', max_token_len=600, cover_content=150)

vector.add_documents('storage', docs, embedding)

question = '如今伊朗人的经济生活状况如何?'

contents = vector.query(question, EmbeddingModel=embedding, k=1)

content = '\n'.join(contents[:5])

print(contents)

print(chat.chat(question, [], content))构建自定义的RAG应用

from gomate.modules.document.reader import ReadFiles

from gomate.modules.generator.llm import GLMChat

from gomate.modules.retrieval.embedding import BgeEmbedding

from gomate.modules.store import VectorStore

class RagApplication():

def __init__(self, config):

pass

def init_vector_store(self):

pass

def load_vector_store(self):

pass

def add_document(self, file_path):

pass

def chat(self, question: str = '', topk: int = 5):

pass模块可见rag.py

可以配置本地模型路径

class ApplicationConfig:

llm_model_name = '/data/users/searchgpt/pretrained_models/chatglm3-6b' # 本地模型文件 or huggingface远程仓库

embedding_model_name = '/data/users/searchgpt/pretrained_models/bge-reranker-large' # 检索模型文件 or huggingface远程仓库

vector_store_path = './storage'

docs_path = './data/docs'

python app.py