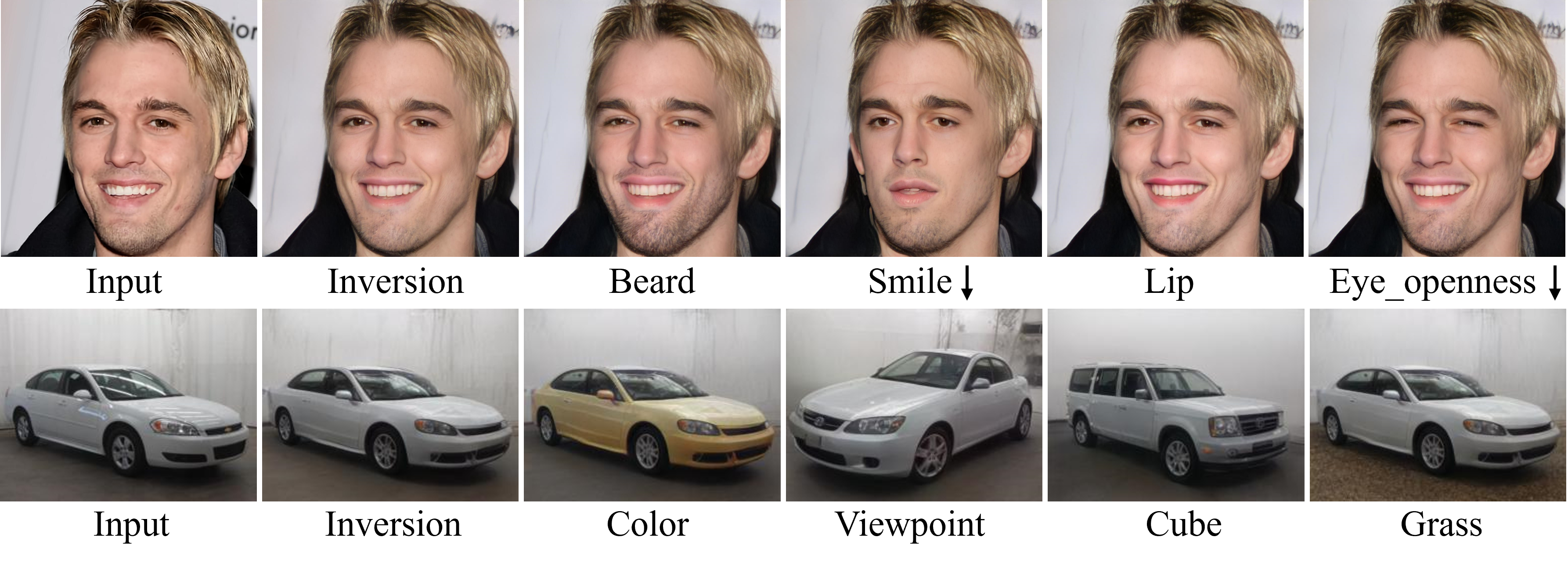

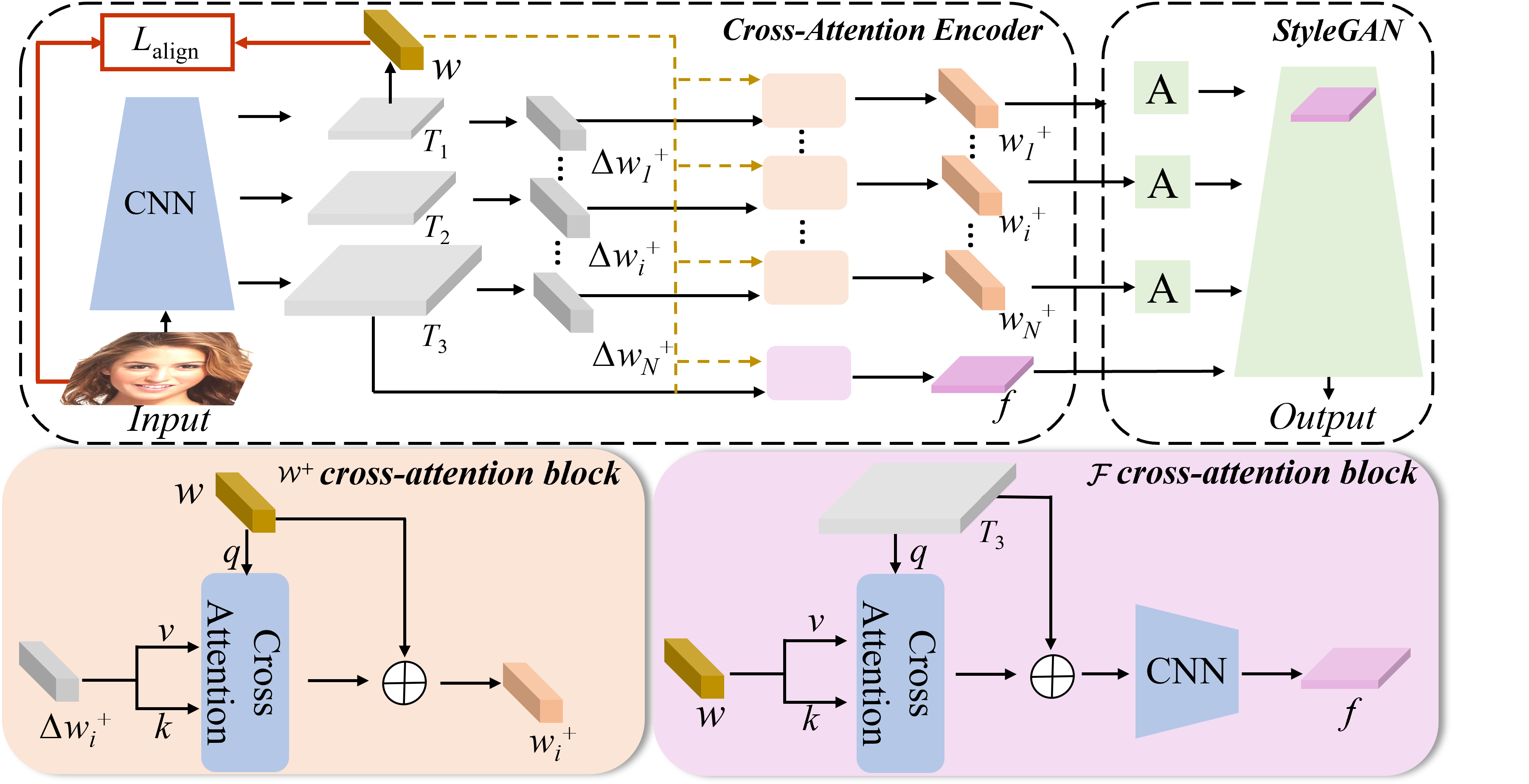

Delving StyleGAN Inversion for Image Editing: A Foundation Latent Space Viewpoint, CVPR2023 (Official PyTorch Implementation)

Hongyu Liu1, Yibing Song2, Qifeng Chen1

1HKUST, 2 AI3 Institute, Fudan University

- Linux or macOS

- NVIDIA GPU + CUDA CuDNN (CPU may be possible with some modifications, but is not inherently supported)

- Python 3

- Clone this repo:

git clone https://github.com/KumapowerLIU/CLCAE.git

cd CLCAE

- Dependencies:

We recommend running this repository using Anaconda. All dependencies for defining the environment are provided inenvironment/clcae_env.yaml.

Please download the pre-trained models from the following links. Each CLCAE model contains the entire architecture, including the encoder and decoder weights.

| Path | Description |

|---|---|

| FFHQ_Inversion | CLCAE trained with the FFHQ dataset for StyleGAN inversion. |

| Car_Inversion | CLCAE trained with the Car dataset for StyleGAN inversion. |

If you wish to use one of the pretrained models for training or inference, you may do so using the flag --checkpoint_path_af.

In addition, we provide various auxiliary models needed for training your own pSp model from scratch as well as pretrained models needed for computing the ID metrics reported in the paper.

| Path | Description |

|---|---|

| Contrastive model and data for FFHQ | Contrastive model for FFHQ as mentioned in our paper |

| Contrastive model and data for Car | Contrastive model for Car as mentioned in our paper |

| FFHQ StyleGAN | StyleGAN model pretrained on FFHQ taken from rosinality with 1024x1024 output resolution. |

| Car StyleGAN | StyleGAN model pretrained on FFHQ taken from rosinality with 512x512 output resolution. |

| IR-SE50 Model | Pretrained IR-SE50 model taken from TreB1eN for use in our ID loss during pSp training. |

| MoCo ResNet-50 | Pretrained ResNet-50 model trained using MOCOv2 for computing MoCo-based similarity loss on non-facial domains. The model is taken from the official implementation. |

| CurricularFace Backbone | Pretrained CurricularFace model taken from HuangYG123 for use in ID similarity metric computation. |

| MTCNN | Weights for MTCNN model taken from TreB1eN for use in ID similarity metric computation. (Unpack the tar.gz to extract the 3 model weights.) |

By default, we assume that all auxiliary models are downloaded and saved to the directory pretrained_models. However, you may use your own paths by changing the necessary values in configs/path_configs.py.

- Currently, we provide support for numerous datasets and experiments (inversion and contrastive learning).

- Refer to

configs/paths_config.pyto define the necessary data paths and model paths for training and evaluation. - Refer to

configs/transforms_config.pyfor the transforms defined for each dataset/experiment. - Finally, refer to

configs/data_configs.pyfor the source/target data paths for the train and test sets as well as the transforms.

- Refer to

- If you wish to experiment with your own dataset, you can simply make the necessary adjustments in

data_configs.pyto define your data paths.transforms_configs.pyto define your own data transforms.

The main training script can be found in scripts/train.py.

Intermediate training results are saved to opts.exp_dir. This includes checkpoints, train outputs, and test outputs.

Additionally, if you have tensorboard installed, you can visualize tensorboard logs in opts.exp_dir/logs.

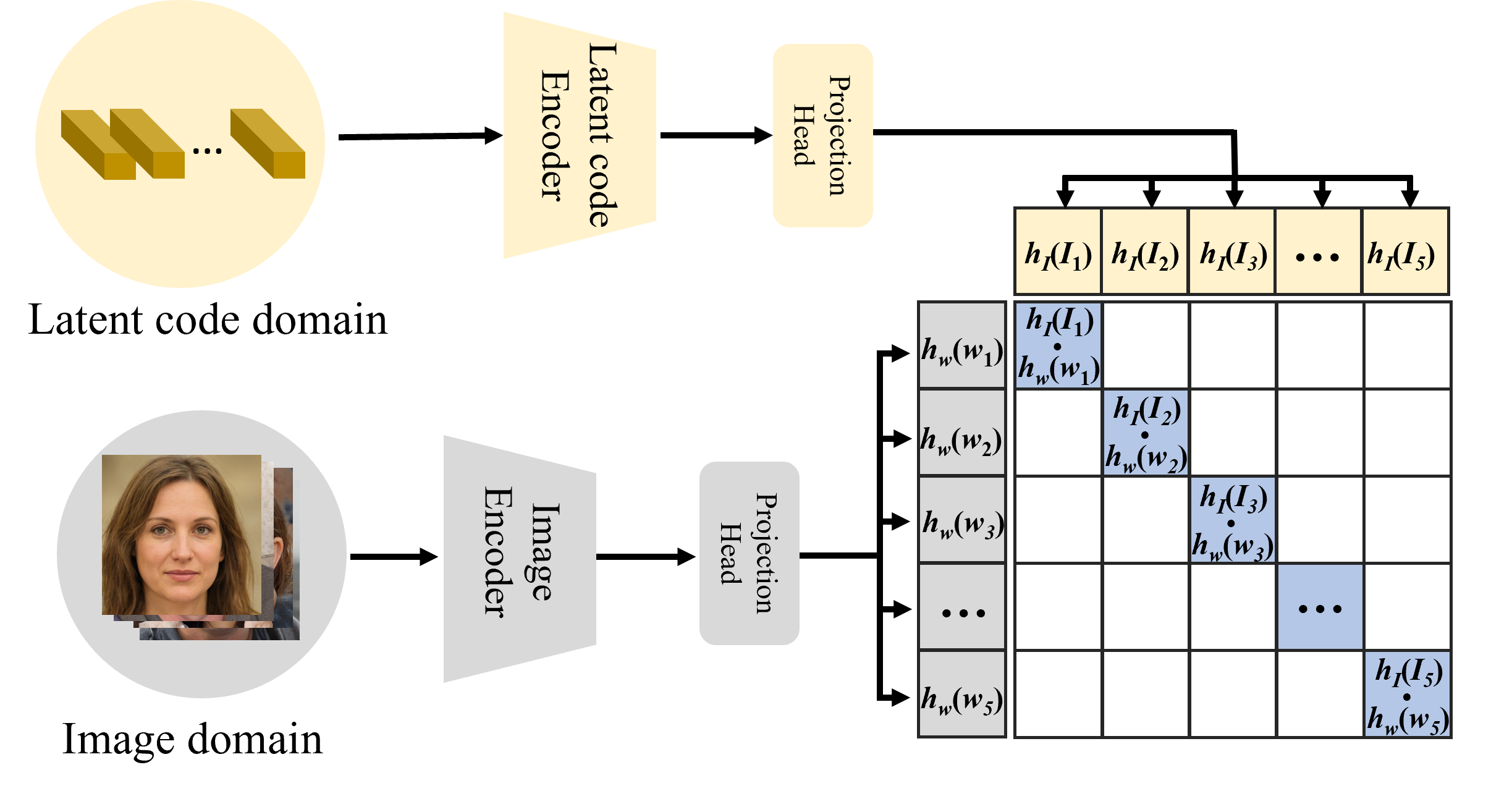

For contrastive learning, you need generate the latent-image pair data with pre-trained StyleGAN model as mentioned in our paper.

For the FFHQ:

python3 -m torch.distributed.launch --nproc_per_node GPU_NUM --use_env \

./scripts/train.py \

--exp_dir ./checkpoints/contrastive \

--use_norm --use_ddp --val_interval 2500 \

--save_interval 5000 --workers 8 --batch_size $batchsize_num --test_batch_size $batchsize_num

--dataset_type ffhq_encode_contrastive --train_contrastive True

For the Car:

python3 -m torch.distributed.launch --nproc_per_node GPU_NUM --use_env \

./scripts/train.py \

--exp_dir ./checkpoints/contrastive \

--use_norm --use_ddp --val_interval 2500 \

--save_interval 5000 --workers 8 --batch_size $batchsize_num --test_batch_size $batchsize_num

--dataset_type car_encode_contrastive --train_contrastive True

For the FFHQ:

python3 -m torch.distributed.launch --nproc_per_node GPU_NUM --use_env

./scripts/train.py \

--exp_dir /checkpoints/ffhq_inversion \

--use_norm --use_ddp --val_interval 2500 --save_interval 5000 --workers 8 --batch_size 2 --test_batch_size 2 \

--lpips_lambda=0.2 --l2_lambda=1 --id_lambda=0.1 \

--feature_matching_lambda=0.01 --contrastive_lambda=0.1 --learn_in_w --output_size 1024

--dataset_type ffhq_encode_inversion --train_inversion True

For the Car:

python3 -m torch.distributed.launch --nproc_per_node GPU_NUM --use_env

./scripts/train.py \

--exp_dir /checkpoints/car_inversion \

--use_norm --use_ddp --val_interval 2500 --save_interval 5000 --workers 8 --batch_size 2 --test_batch_size 2 \

--lpips_lambda=0.2 --l2_lambda=1 --id_lambda=0.1 \

--feature_matching_lambda=0.01 --contrastive_lambda=0.1 --learn_in_w --output_size 512

--dataset_type car_encode_inversion --train_inversion True --contrastive_model_image contrastive_car_image \

--contrastive_model_image contrastive_car_latent

Having trained your model, you can use scripts/inference_inversion.py to apply the model on a set of images.

For example,

python3 scripts/inference_inversion.py \

--exp_dir=./results \

--checkpoint_path_af= You should wrtie the path of pretrainmodel \

--data_path= You should wrtie the path of test images folder \

--test_batch_size=1 \

--test_workers=1 \

--couple_outputs \

--resize_outputs

You should check the scripts/inference_edit.py and scripts/inference_edit_not_interface.py

This code borrows heavily from pSp, e4e and FeatureStyleEncoder

If you find our work useful for your research, please consider citing the following papers :)

@InProceedings{Liu_2023_CVPR,

author = {Liu, Hongyu and Song, Yibing and Chen, Qifeng},

title = {Delving StyleGAN Inversion for Image Editing: A Foundation Latent Space Viewpoint},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {10072-10082}

}The codes and the pretrained model in this repository are under the MIT license as specified by the LICENSE file.