Object grasp label dense annotation based on graspnetAPI. Use the same annotation format as GraspNet1-billion dataset.

- graspnetAPI

Get the code.

git clone https://github.com/rhett-chen/grasp-auto-annotation.git

cd grasp-auto-annotationInstall packages via Pip.

pip install -r requirements.txtCompile sdf generator(if you need to generate object sdf file)

cd sdf-gen

mkdir build && cd build && cmake ..

makeOrganize your data as GraspNet1-billion format. Take OCRTOC dataset as example:

| -- ocrtoc

| -- models

| -- objectname1

| -- textured.obj

| -- textured.ply

| -- textured.sdf

| -- objectname2

| -- ... ...

| -- grasp_labels(to be generated)

| -- objectname1_labels.npz

| -- scenes

If object model doesn't contain .sdf file, generate .sdf from .obj use generate_sdf() or batch_generate_sdf() function in auto_annotaton/annotation_utils.py

Set relevant arguments in auto_annotaton/options.py. The default parameters here are the recommended parameters.

Generate object-level grasp pose label.

cd auto_annotation

python main.pyLoad, save and visualize object grasp poses, you also need to set relevant arguments in options.py. The format of loaded grasp poses is same as GraspNetAPI(num_grasp*17).

cd auto_annotation

python load_grasp.pyGenerate pickle object dexnet model for fast loading.

cd auto_annotation

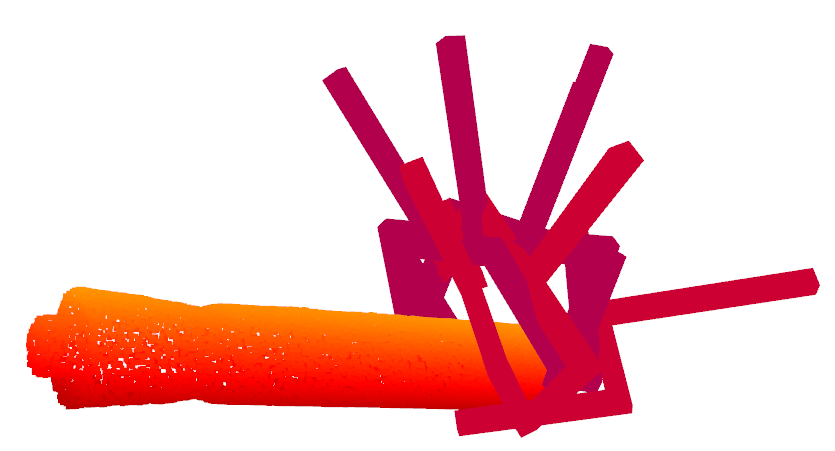

python gen_pickle_dexmodel.pyWe show the grasp label annotation results of large_marker object in OCRTOC dataset. When annotating this object, we set num_views=100, num_angles=3, num_depth=3, and we randomly select only one grasp point for annotaion in the example.

The process of grasping pose annotation is based on GraspNet1-billion , many function implementations are based on GraspNetAPI.