conda create -n torch python=3.8

conda activate torch

conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.1 -c pytorch

pip install pytorch-lightning==1.0.2 opencv-python matplotlib joblib scikit-image torchsummary webdataset albumentations more_itertoolsSetup |

||

Train |

make train CODE=scripts/animegan_pretrain.py CFG=configs/animegan_pretrain.yaml

make train CODE=scripts/animeganv2.py CFG=configs/animeganv2.yaml

make tensorboard LOGDIR=logs/animeganv2/ |

|

test |

make infer CODE=scripts/animeganv2.py \

CKPT=logs/animeganv2/version_0/checkpoints/epoch=17.ckpt \

EXTRA=image_path:asset/animegan_test2.jpg # (1)

make infer CODE=scripts/animeganv2.py \

CKPT=logs/animeganv2/version_0/checkpoints/epoch=17.ckpt \

EXTRA=help # (2)

|

|

Result |

||

|

📝

|

|

Setup |

||

Train |

make train CODE=scripts/whiteboxgan_pretrain.py CFG=configs/whitebox_pretrain.yaml

make train CODE=scripts/whiteboxgan.py CFG=configs/whitebox.yaml

make tensorboard LOGDIR=logs/whitebox |

|

test |

make infer CODE=scripts/whiteboxgan.py \

CKPT=logs/whitebox/version_0/checkpoints/epoch=4.ckpt \

EXTRA=image_path:asset/whitebox_test.jpg # (1)

make infer CODE=scripts/whiteboxgan.py \

CKPT=logs/whitebox/version_0/checkpoints/epoch=4.ckpt \

EXTRA=image_path:tests/test.flv,device:cuda,batch_size:4 # (2)

# ffmpeg -i xx.mp4 -vcodec libx265 -crf 28 xxx.mp4

make infer CODE=scripts/whiteboxgan.py \

CKPT=logs/whitebox/version_0/checkpoints/epoch=4.ckpt \

EXTRA=help # (3)

|

|

Result |

||

|

📝

|

|

Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation(minivision)

Setup |

||

Train |

make train CODE=scripts/uagtit.py CFG=configs/uagtit.yaml

make tensorboard LOGDIR=logs/uagtit |

|

test |

python tools/face_crop_and_mask.py \

--data_path test/model_image \

--save_path test/model_image_faces \

--use_face_crop True \

--use_face_algin False \

--face_crop_ratio 1.3

make infer CODE=scripts/uagtit.py \

CKPT=logs/uagtit/version_13/checkpoints/epoch=15.ckpt \

EXTRA=image_path:asset/uagtit_test.png |

|

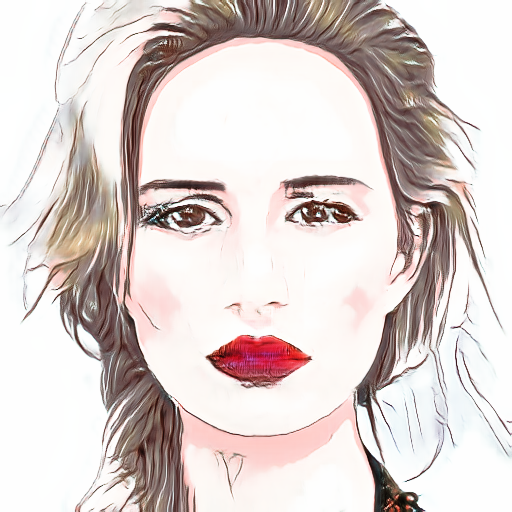

Result |

||

|

📝

|

|

Path |

Description |

AnimeStylized |

Repository root folder |

├ asset |

Folder containing readme image assets |

├ configs |

Folder containing configs defining model/data paramters, training hyperparamters. |

Folder with various dataset objects and transfroms. |

|

├ losses |

Folder containing various loss functions for training, Only very general used loss functions are added here. |

├ models |

Folder containing all the models and training objects |

├ optimizers |

Folder with common used optimizers |

├ scripts |

Folder with running scripts for training and inference |

├ utils |

Folder with various utility functions |

-

Add custom

LightningDataModuleobject asxxxds.pyindatamodulesdir. -

Add custom

Moduleobject model architecture asxxxnet.pyinnetworksdir. -

Add custom

LightningDataModuletraining script asxxx.pyinscriptsdir -

Add config file in

configsdir, the paramters follow your customLightningModuleandLightningDataModule -

trianing your algorithm