📔 Code for Paper: Exploring Effective Priors and Efficient Models for Weakly-Supervised Change Detection [arXiv]

| ⚡ | Higher-performing TransWCD baselines have been released, with F1 score of +2.47 on LEVIR-CD and +5.72 on DSIFN-CD compared to those mentioned in our paper. |

|---|

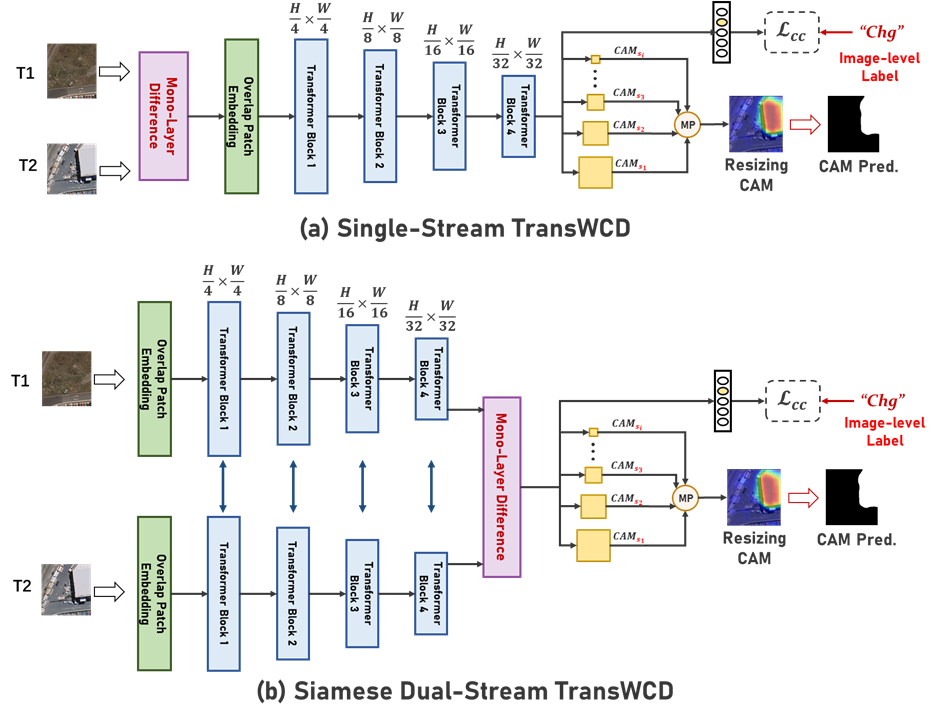

Weakly-supervised change detection (WSCD) aims to detect pixel-level changes with only image-level (i.e., scene-level) annotations. We develop TransWCD, a simple yet powerful transformer-based model, showcasing the potential of weakly-supervised learning in change detection.You can download WHU-CD, DSIFN-CD, LEVIR-CD, and other CD datasets, then use our

data_and_label_processingto convert these raw change detection datasets into cropped weakly-supervised change detection datasets.Or use the processed weakly-supervised datasets from

here. Please cite their papers and ours.WSCD dataset with image-level labels: ├─A ├─B ├─label ├─imagelevel_labels.npy └─listDownload the pre-trained weights from SegFormer and move them to

transwcd/pretrained/.conda create --name transwcd python=3.6 conda activate transwcd pip install -r requirments.txt# train python train_transwcd.pyYou can modify the corresponding implementation settings

WHU.yaml,LEVIR.yaml, andDSIFN.yamlintrain_transwcd.pyfor different datasets.# test python test.pyPlease remember to modify the corresponding configurations in

test.py, and the visual results can be found attranswcd/results/

TransWCD WHU-CD LEVIR-CD DSIFN-CD Single-Stream 67.81/Best model 51.06/Best model 57.28/Best model Dual-Stream 68.73/Best model 62.55/Best model 59.13/Best model *Average F1 score / Best model

On both WHU-CD and LEVIR-CD datasets, the test performance closely matches that of the validation, with differences < 3% F1 score.

If it's helpful to your research, please kindly cite. Here is an example BibTeX entry:

@article{zhao2023exploring, title={Exploring Effective Priors and Efficient Models for Weakly-Supervised Change Detection}, author={Zhao, Zhenghui and Ru, Lixiang and Wu, Chen}, journal={arXiv preprint arXiv:2307.10853}, year={2023} }Thanks to these brilliant works BGMix, ChangeFormer, and SegFormer!