This is the official implementation of "Is Synthetic Data From Diffusion Models Ready for Knowledge Distillation?"

In this paper, we extensively study whether and how synthetic images produced from state-of-the-art diffusion models can be used for knowledge distillation without access to real images, and obtain three key conclusions: (1) synthetic data from diffusion models can easily lead to state-of-the-art performance among existing synthesis-based distillation methods, (2) low-fidelity synthetic images are better teaching materials, and (3) relatively weak classifiers are better teachers.

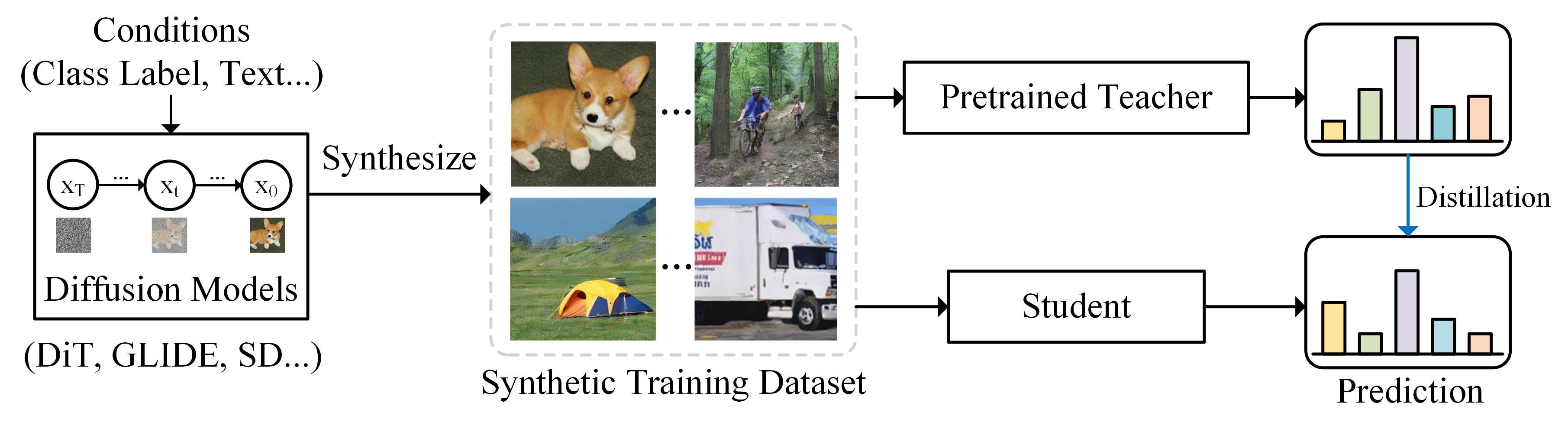

We propose to use the diffusion model to synthesize images given the target label space when the real dataset is not available. The student model is optimized to minimize the prediction discrepancy between itself and the teacher model on the synthetic dataset.

On CIFAR-100:

| Method | Syn Method | T:RN-34 S:RN-18 |

T:VGG-11 S:RN-18 |

T:WRN-40-2 S:WRN-16-1 |

T:WRN-40-2 S:WRN-40-1 |

T:WRN-40-2 S:WRN-16-2 |

|---|---|---|---|---|---|---|

| Teacher Student KD |

- - - |

78.05 77.10 77.87 |

71.32 77.10 75.07 |

75.83 65.31 64.06 |

75.83 72.19 68.58 |

75.83 73.56 70.79 |

| DeepInv DAFL DFQ FastDFKD |

Inversion Inversion Inversion Inversion |

61.32 74.47 77.01 74.34 |

54.13 54.16 66.21 67.44 |

53.77 20.88 51.27 54.02 |

61.33 42.83 54.43 63.91 |

61.34 43.70 64.79 65.12 |

| One-Image DM-KD(Ours) |

Augmentation Diffusion |

74.56 76.58 |

68.51 70.83 |

34.62 56.29 |

52.39 65.01 |

54.71 66.89 |

On ImageNet-1K:

| Method | Syn Method | Data Amount | Epoch | T:RN-50/18 S:RN-50 |

T:RN-50/18 S:RN-18 |

|---|---|---|---|---|---|

| Place365+KD BigGAN DeepInv FastDFKD |

- GAN Inversion Inversion |

1.8M 215K 140K 140K |

200 90 90 200 |

55.74 64.00 68.00 68.61 |

45.53 - - 53.45 |

| One-Image | Augmentation | 1.28M | 200 | 66.20 | - |

| DM-KD(Ours) | Diffusion | 140K 200K 1.28M |

200 200 200 |

66.74 68.63 72.43 |

60.10 61.61 68.25 |

On other datasets:

| Datasets | Categories (#Classes) |

Teacher T:RN-18 |

One-Image S:RN-50 |

Ours S:RN-50 |

|---|---|---|---|---|

| ImageNet-100 | Objects (100) | 89.6 | 84.4 | 85.9 |

| Flowers-102 | Flower types (102) | 87.9 | 81.5 | 85.4 |

- Synthesize data using diffusion models.

In this paper, we use DiT as our default image generator. There are two ways to create synthetic data for distillation.

- You can synthesize your own data according to the official implementation. The DiT model is XL/2. The Image Resolution is 256X256.

After the sythesis is complete, put the synthesized data into the syn_dataset folder.

- We provide offline synthesized datasets for experiments.

| Synthetic Dataset | Data Amount | Size | Download1 |

|---|---|---|---|

| Low Fidelity (s=2, T=100) | 200K | ~20GB | [Baidu Yun] |

| High Fidelity (s=4, T=250) | 200K | ~20GB | [Baidu Yun] |

Download the synthesized dataset and put the dataset into the syn_dataset folder. Then unzip the dataset in the current folder.

-

Download the true ImageNet validation dataset and put them into the

true_datasetfolder. Then unzip the dataset in the current folder. You can download the true ImageNet-1K dataset using our links ([Baidu Yun]). Or you can download the dataset from the official website. -

Download the pretrained teacher models and put them into the

save/modelsfolder.

| Teacher models | Download1 |

|---|---|

| ImageNet | [Baidu Yun] |

Note that the pretrained teacher models are also available at torchvision. You can also download the pretrained models from the official website.

- Training on 200K synthesized ImageNet-1K dataset: Run the script:

sh scripts/imagenet_distill.sh

The running file will be saved in the save/student_model folder.